- Главная

- Разное

- Дизайн

- Бизнес и предпринимательство

- Аналитика

- Образование

- Развлечения

- Красота и здоровье

- Финансы

- Государство

- Путешествия

- Спорт

- Недвижимость

- Армия

- Графика

- Культурология

- Еда и кулинария

- Лингвистика

- Английский язык

- Астрономия

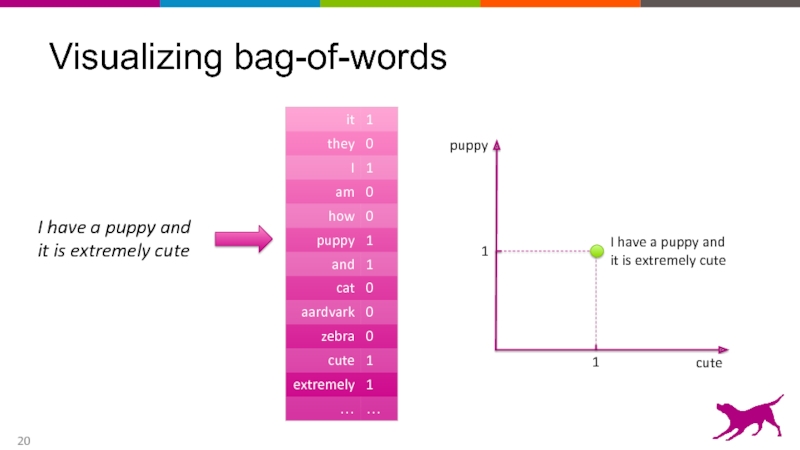

- Алгебра

- Биология

- География

- Детские презентации

- Информатика

- История

- Литература

- Маркетинг

- Математика

- Медицина

- Менеджмент

- Музыка

- МХК

- Немецкий язык

- ОБЖ

- Обществознание

- Окружающий мир

- Педагогика

- Русский язык

- Технология

- Физика

- Философия

- Химия

- Шаблоны, картинки для презентаций

- Экология

- Экономика

- Юриспруденция

Understanding Feature Space in Machine Learning презентация

Содержание

- 1. Understanding Feature Space in Machine Learning

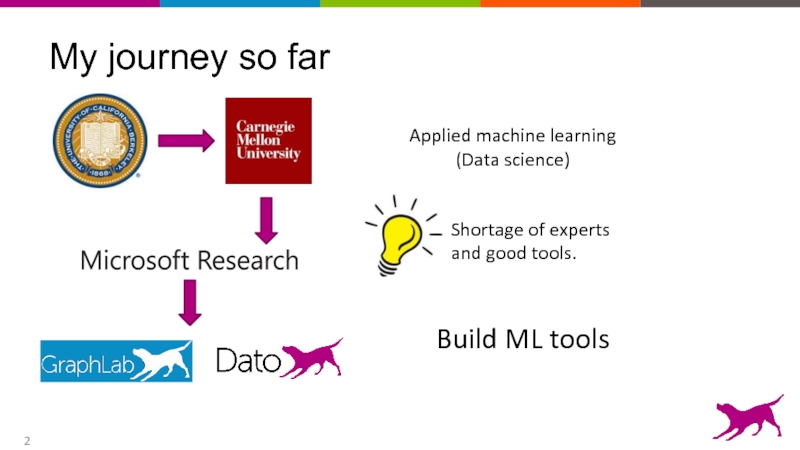

- 2. My journey so far Applied machine learning (Data science) Build ML tools

- 3. Why machine learning? Model data. Make predictions. Build intelligent applications.

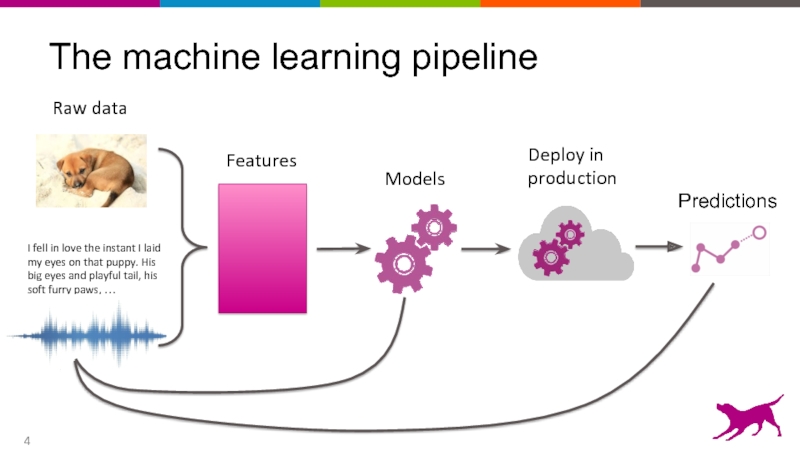

- 4. The machine learning pipeline I fell in

- 5. Feature = numeric representation of raw data

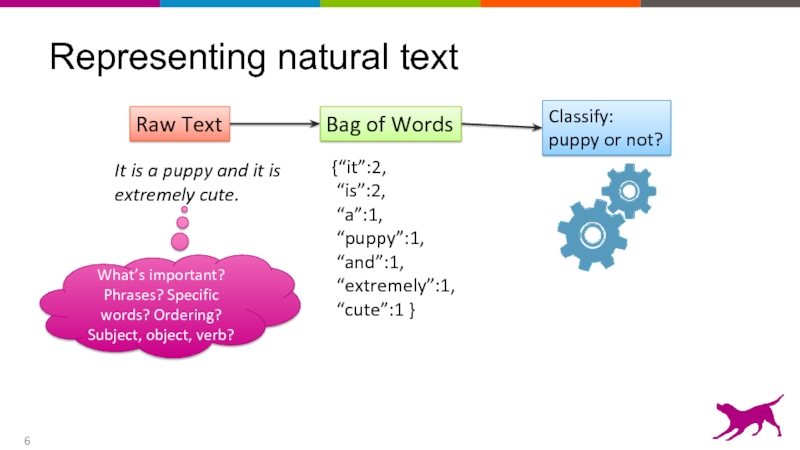

- 6. Representing natural text It is a puppy

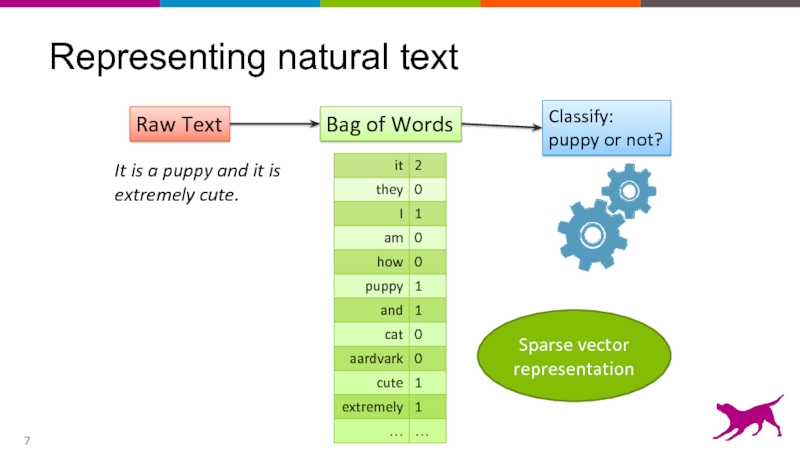

- 7. Representing natural text It is a puppy

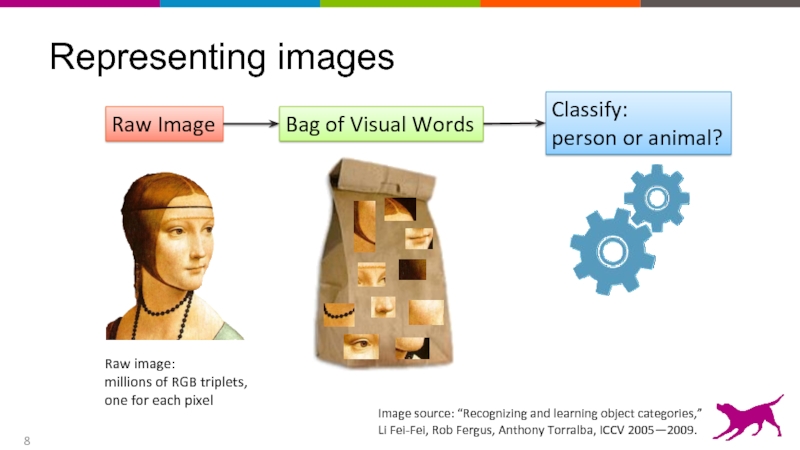

- 8. Representing images Image source: “Recognizing and learning

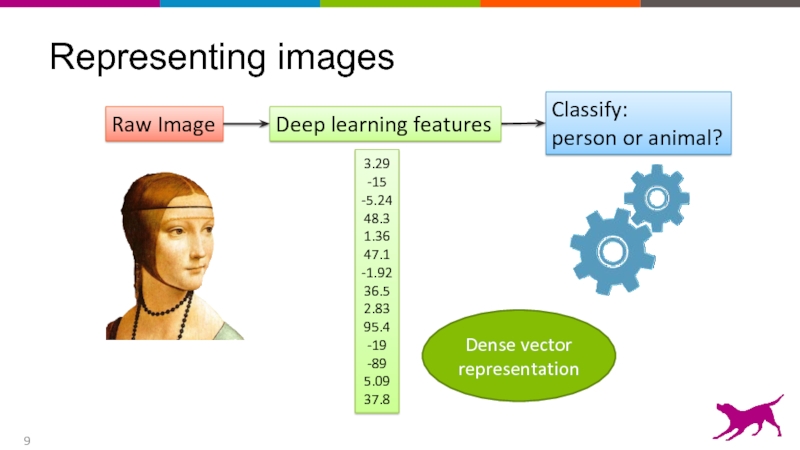

- 9. Representing images Raw Image Deep learning features

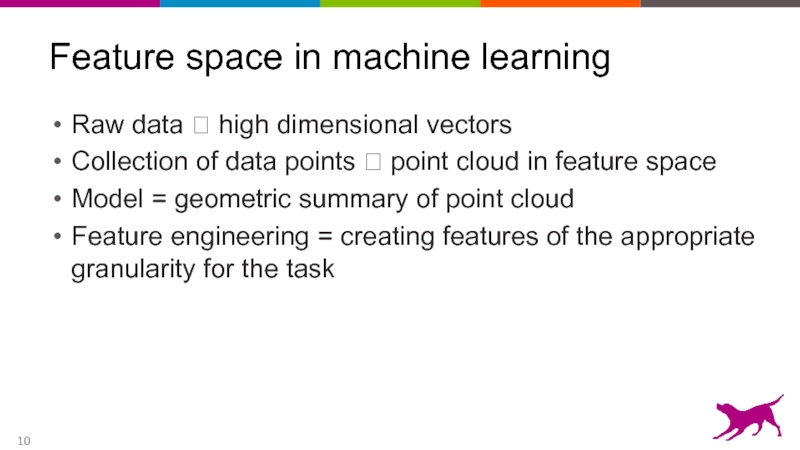

- 10. Feature space in machine learning Raw data

- 11. Crudely speaking, mathematicians fall into two categories:

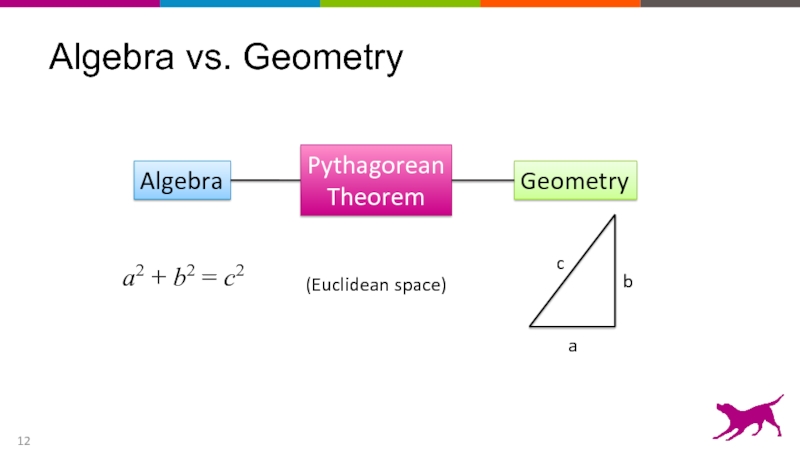

- 12. Algebra vs. Geometry a b c a2 + b2 = c2 Algebra Geometry (Euclidean space)

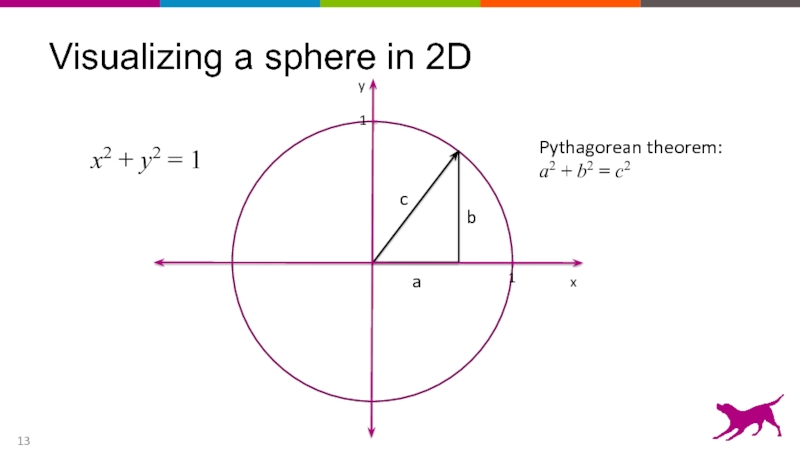

- 13. Visualizing a sphere in 2D x2 + y2 = 1

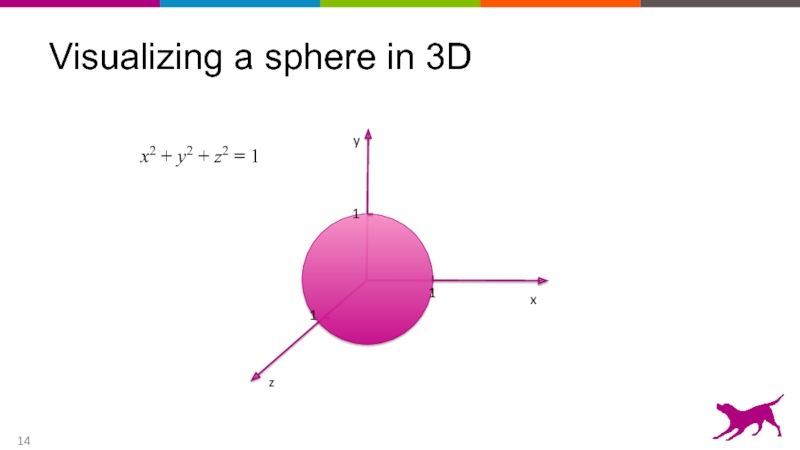

- 14. Visualizing a sphere in 3D x2

- 15. Visualizing a sphere in 4D x2

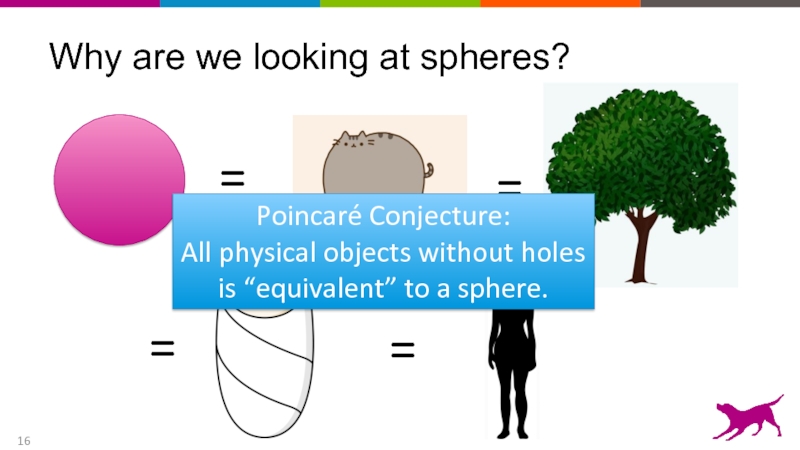

- 16. Why are we looking at spheres?

- 17. The power of higher dimensions A sphere

- 18. Visualizing Feature Space

- 19. The challenge of high dimension geometry Feature

- 20. Visualizing bag-of-words I have a puppy and it is extremely cute

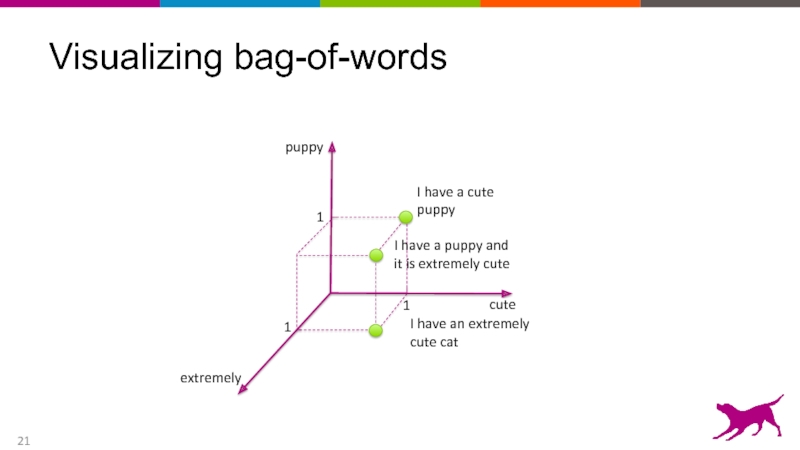

- 21. Visualizing bag-of-words puppy cute 1 1 1 extremely

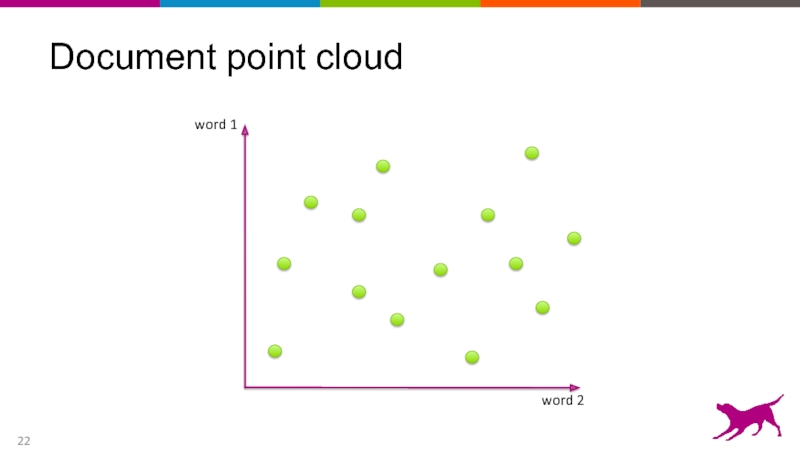

- 22. Document point cloud

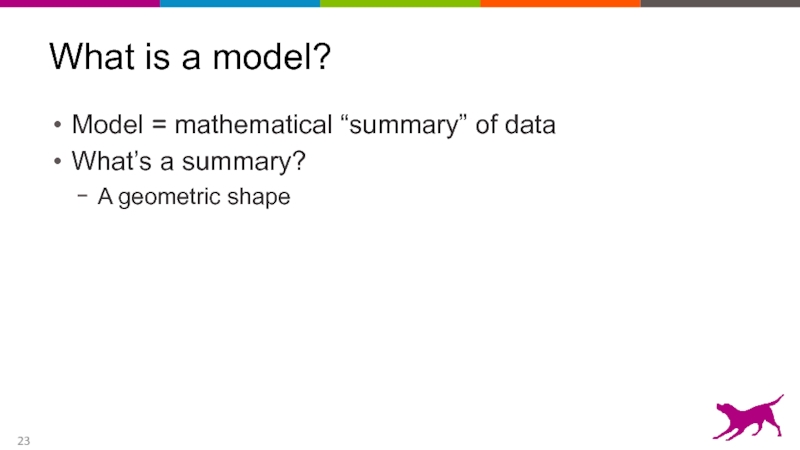

- 23. What is a model? Model = mathematical

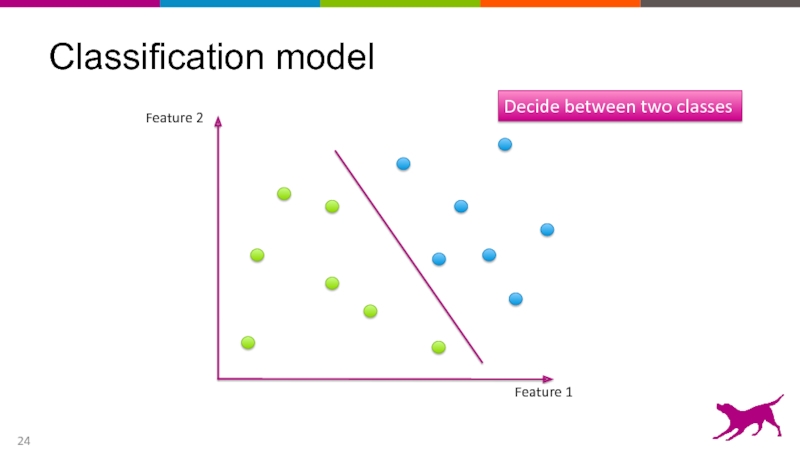

- 24. Classification model

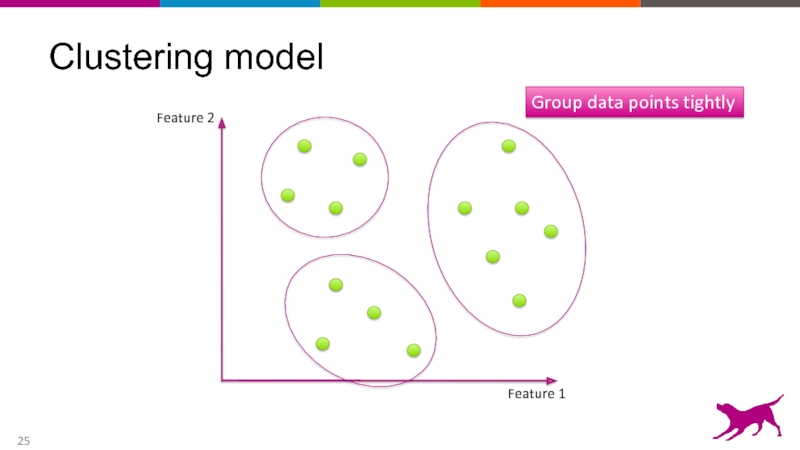

- 25. Clustering model

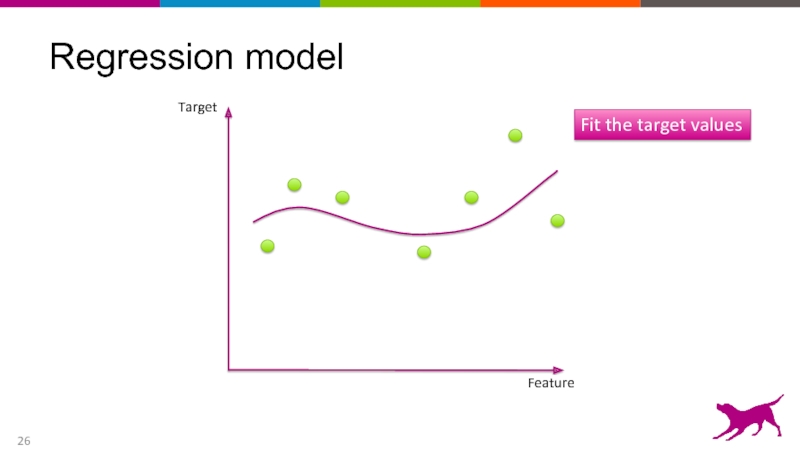

- 26. Regression model

- 27. Visualizing Feature Engineering

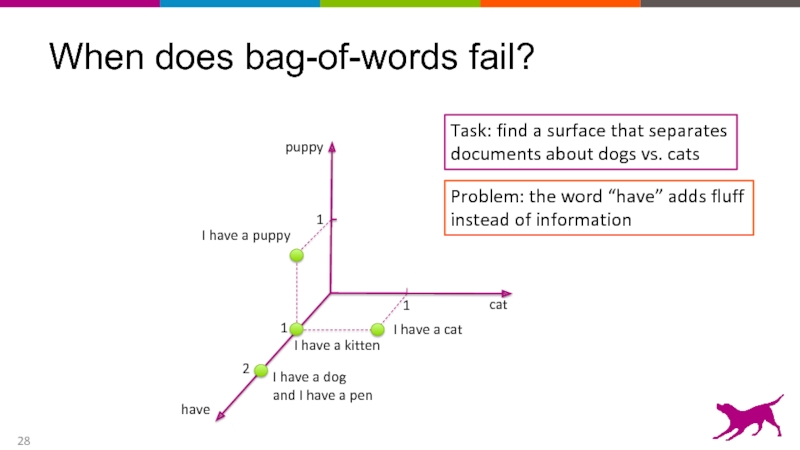

- 28. When does bag-of-words fail? puppy cat 2

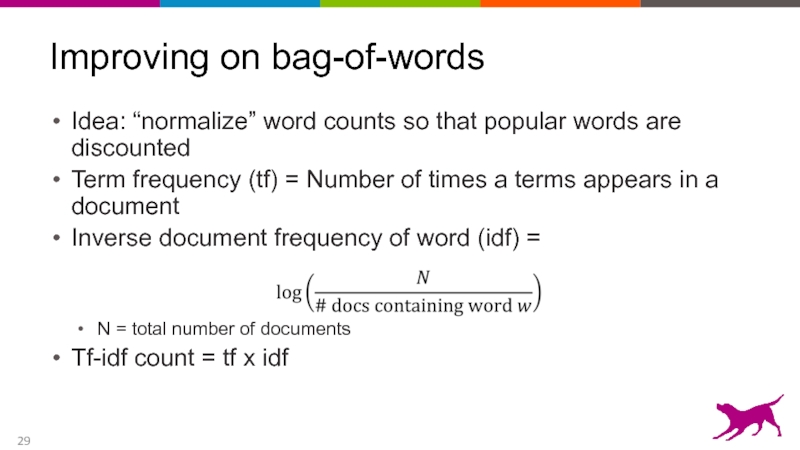

- 29. Improving on bag-of-words Idea: “normalize” word counts

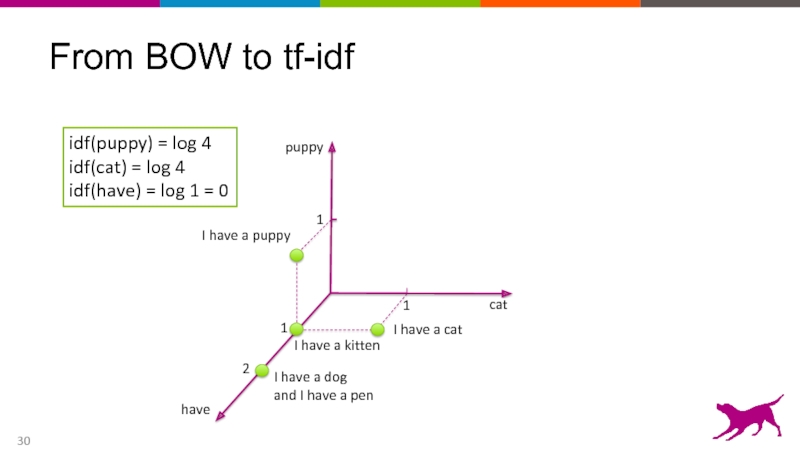

- 30. From BOW to tf-idf puppy cat 2

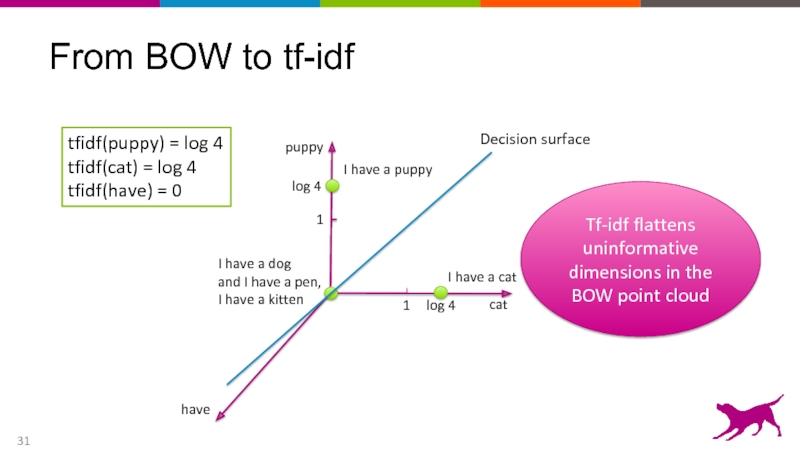

- 31. From BOW to tf-idf puppy cat 1

- 32. Entry points of feature engineering Start from

- 33. That’s not all, folks! There’s a lot

Слайд 4The machine learning pipeline

I fell in love the instant I laid

Raw data

Features

Слайд 6Representing natural text

It is a puppy and it is extremely cute.

What’s

Classify:

puppy or not?

Raw Text

Слайд 7Representing natural text

It is a puppy and it is extremely cute.

Classify:

puppy or not?

Raw Text

Sparse vector representation

Слайд 8Representing images

Image source: “Recognizing and learning object categories,”

Li Fei-Fei, Rob

Raw image:

millions of RGB triplets,

one for each pixel

Raw Image

Слайд 9Representing images

Raw Image

Deep learning features

3.29

-15

-5.24

48.3

1.36

47.1

-1.9236.5

2.83

95.4

-19

-89

5.09

37.8

Dense vector representation

Слайд 10Feature space in machine learning

Raw data ? high dimensional vectors

Collection of

Model = geometric summary of point cloud

Feature engineering = creating features of the appropriate granularity for the task

Слайд 11Crudely speaking, mathematicians fall into two categories: the algebraists, who find

Слайд 16Why are we looking at spheres?

=

=

=

=

Poincaré Conjecture:

All physical objects without holes

is

Слайд 17The power of higher dimensions

A sphere in 4D can model the

Point clouds = approximate geometric shapes

High dimensional features can model many things

Слайд 19The challenge of high dimension geometry

Feature space can have hundreds to

In high dimensions, our geometric imagination is limited

Algebra comes to our aid

Слайд 28When does bag-of-words fail?

puppy

cat

2

1

1

have

Task: find a surface that separates

documents about

Problem: the word “have” adds fluff

instead of information

1

Слайд 29Improving on bag-of-words

Idea: “normalize” word counts so that popular words are

Term frequency (tf) = Number of times a terms appears in a document

Inverse document frequency of word (idf) =

N = total number of documents

Tf-idf count = tf x idf

Слайд 31From BOW to tf-idf

puppy

cat

1

have

tfidf(puppy) = log 4

tfidf(cat) = log 4

tfidf(have) =

1

log 4

log 4

Tf-idf flattens uninformative dimensions in the BOW point cloud

Слайд 32Entry points of feature engineering

Start from data and task

What’s the best

Start from modeling method

What kind of features does k-means assume?

What does linear regression assume about the data?

Слайд 33That’s not all, folks!

There’s a lot more to feature engineering:

Feature normalization

Feature

“Regularizing” models

Learning the right features

Dato is hiring! jobs@dato.com

alicez@dato.com @RainyData