- Главная

- Разное

- Дизайн

- Бизнес и предпринимательство

- Аналитика

- Образование

- Развлечения

- Красота и здоровье

- Финансы

- Государство

- Путешествия

- Спорт

- Недвижимость

- Армия

- Графика

- Культурология

- Еда и кулинария

- Лингвистика

- Английский язык

- Астрономия

- Алгебра

- Биология

- География

- Детские презентации

- Информатика

- История

- Литература

- Маркетинг

- Математика

- Медицина

- Менеджмент

- Музыка

- МХК

- Немецкий язык

- ОБЖ

- Обществознание

- Окружающий мир

- Педагогика

- Русский язык

- Технология

- Физика

- Философия

- Химия

- Шаблоны, картинки для презентаций

- Экология

- Экономика

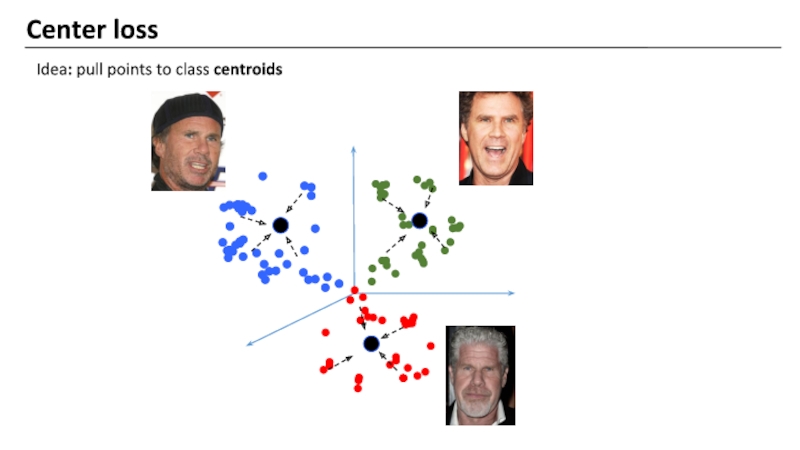

- Юриспруденция

Face Recognition: From Scratch to Hatch презентация

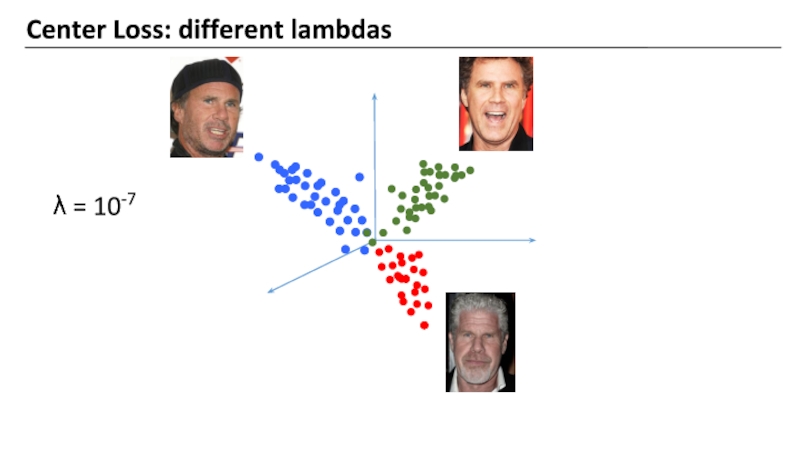

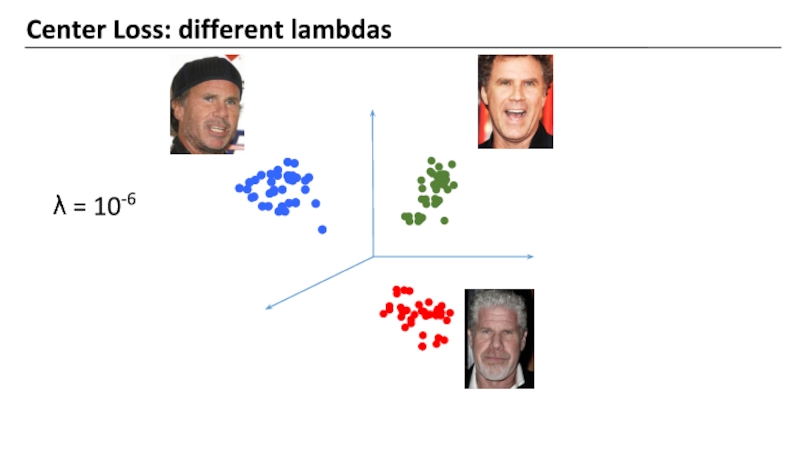

Содержание

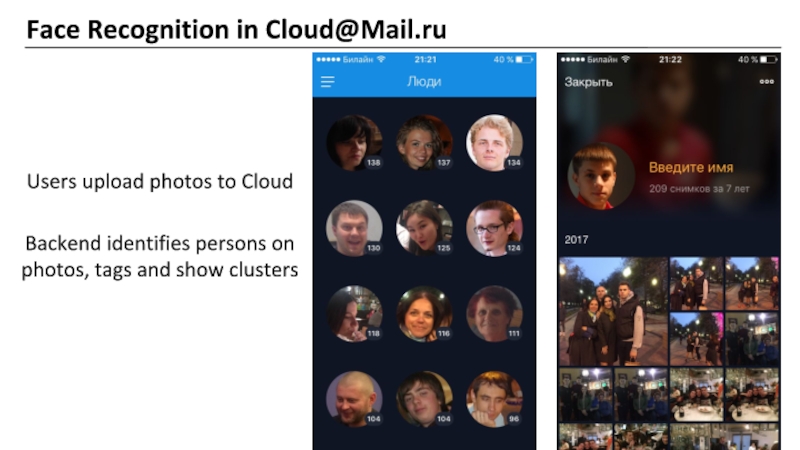

- 2. Face Recognition in Cloud@Mail.ru Users upload

- 3. Social networks

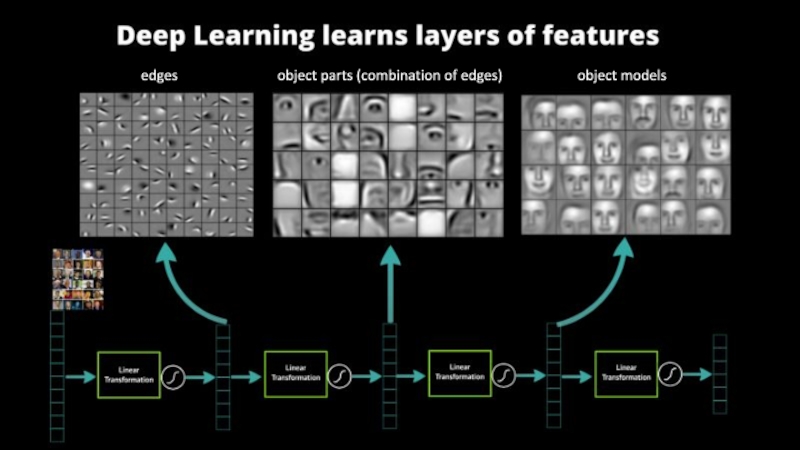

- 5. edges object parts (combination of edges) object models

- 7. Face detection

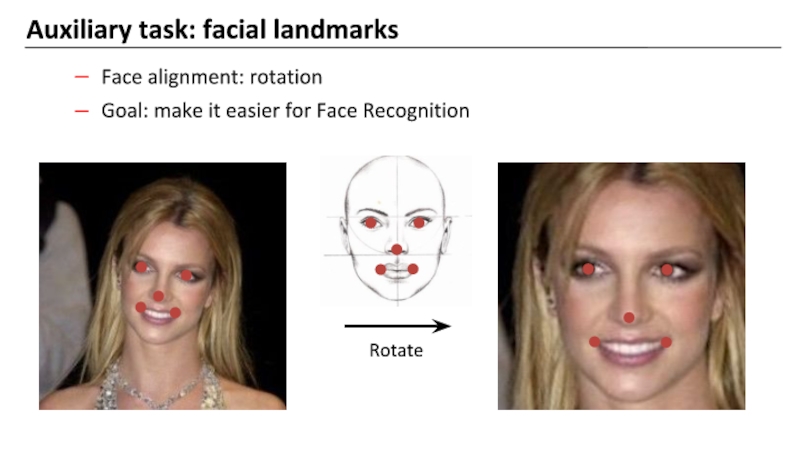

- 8. Auxiliary task: facial landmarks Face alignment: rotation Goal: make it easier for Face Recognition

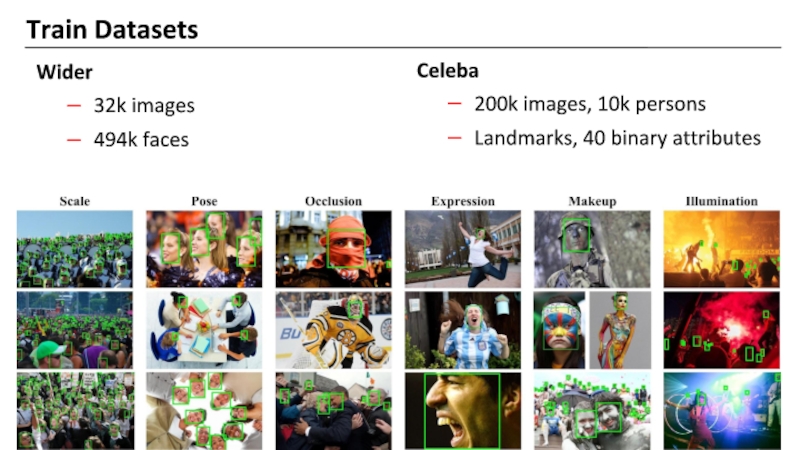

- 9. Train Datasets Wider 32k images 494k faces

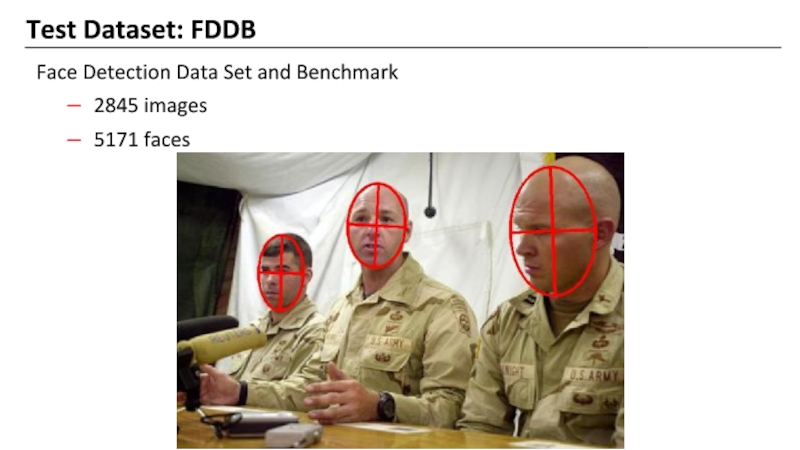

- 10. Test Dataset: FDDB Face Detection Data Set and Benchmark 2845 images 5171 faces

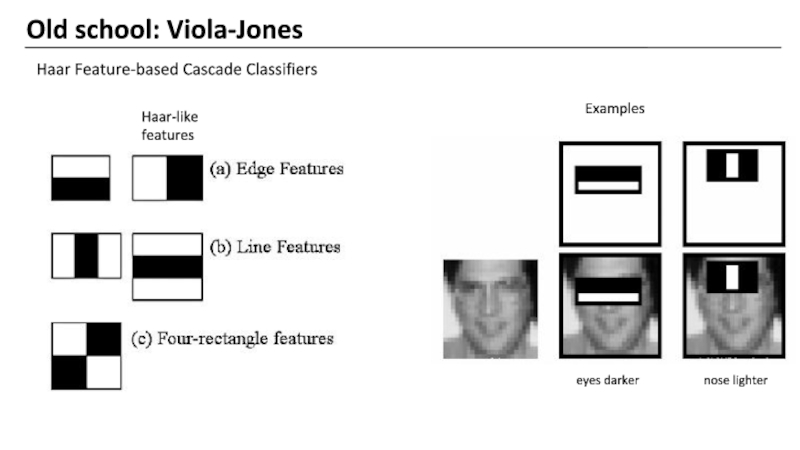

- 11. Old school: Viola-Jones Haar Feature-based Cascade Classifiers

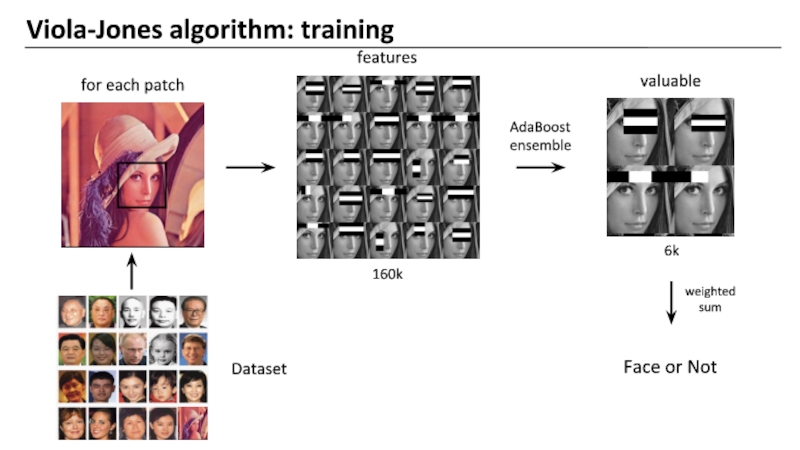

- 12. Viola-Jones algorithm: training Face or Not

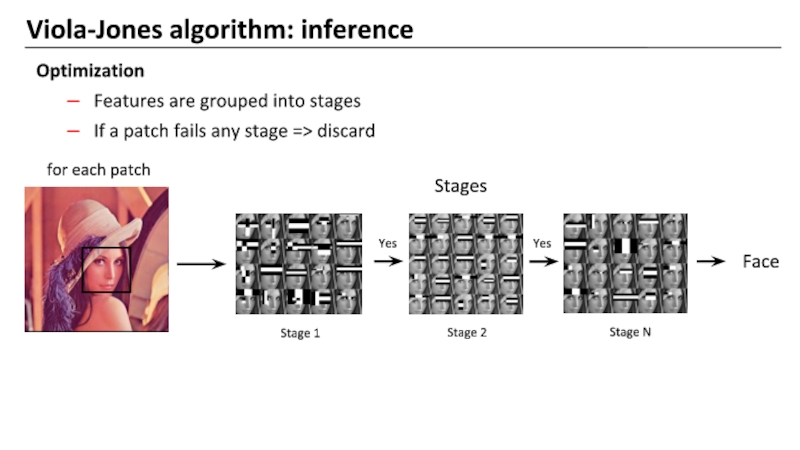

- 13. Viola-Jones algorithm: inference Stages Face Yes Yes

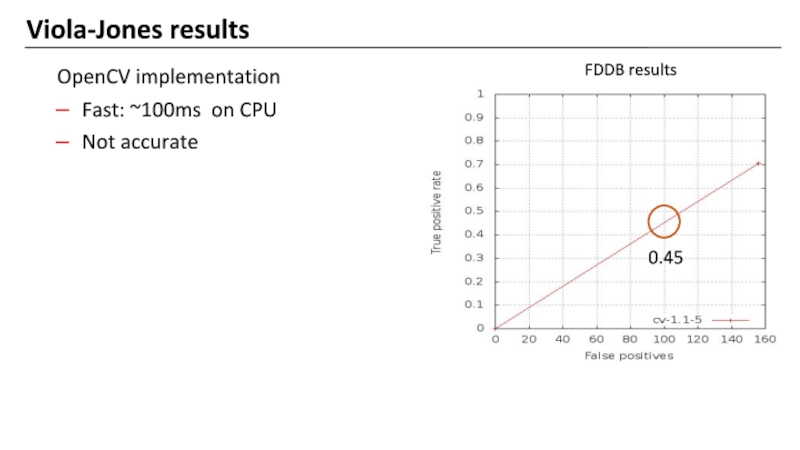

- 14. Viola-Jones results OpenCV implementation Fast: ~100ms on CPU Not accurate

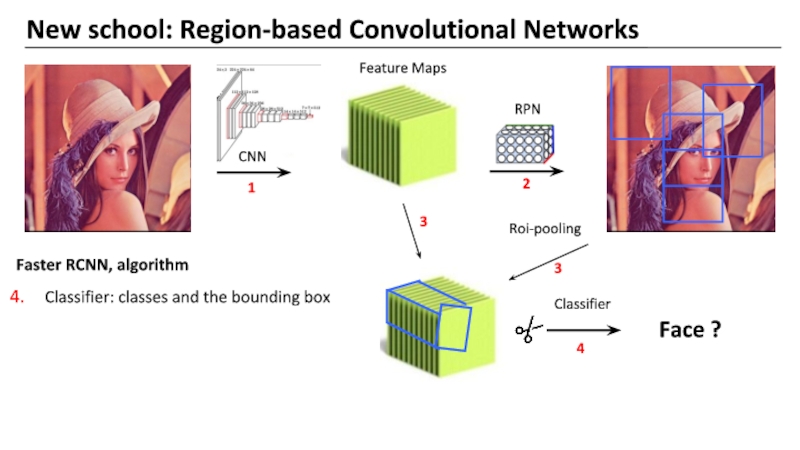

- 15. Pre-trained network: extracting features New school: Region-based

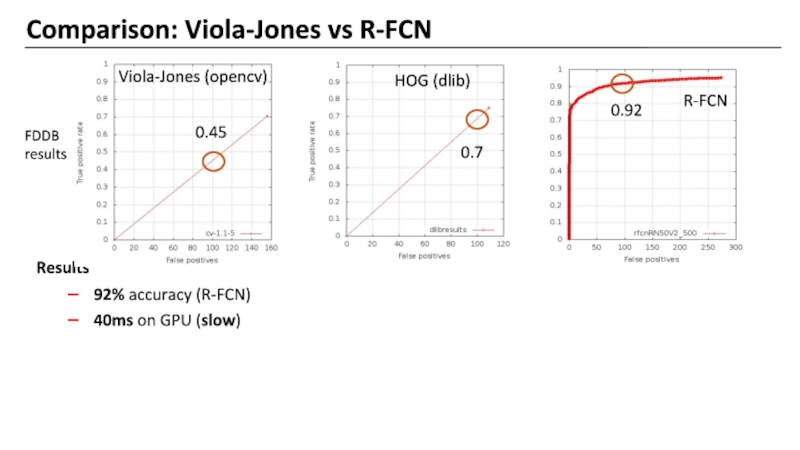

- 16. Comparison: Viola-Jones vs R-FCN Results 92% accuracy (R-FCN) FDDB results 40ms on GPU (slow)

- 17. Face detection: how fast We need faster

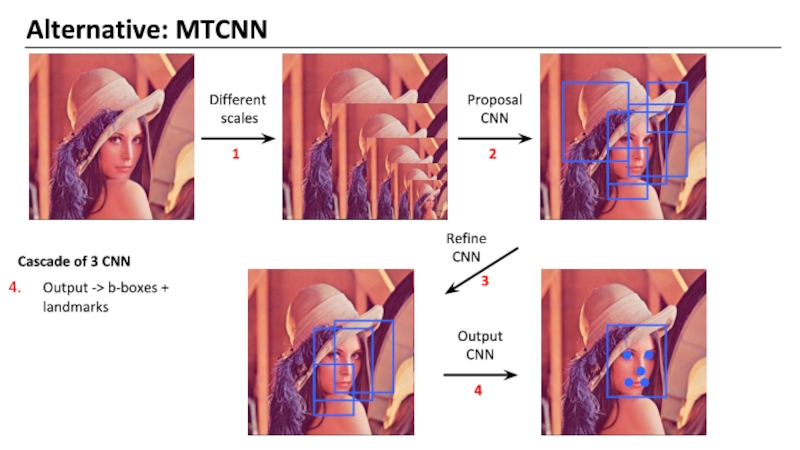

- 18. Alternative: MTCNN Cascade of 3 CNN Resize

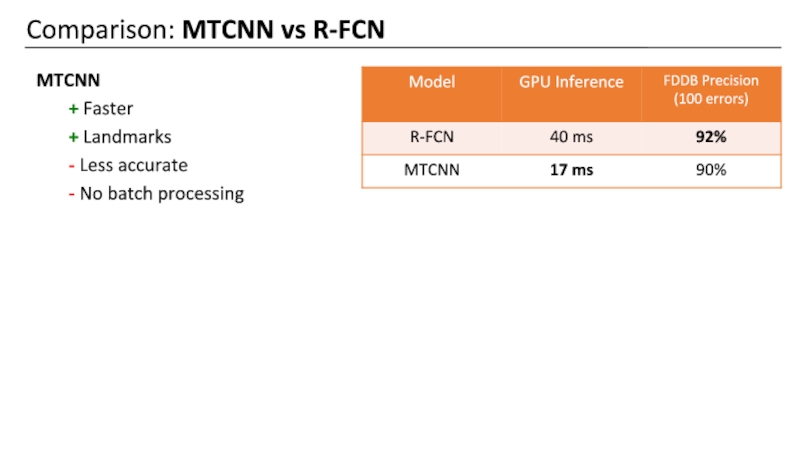

- 19. Comparison: MTCNN vs R-FCN MTCNN + Faster

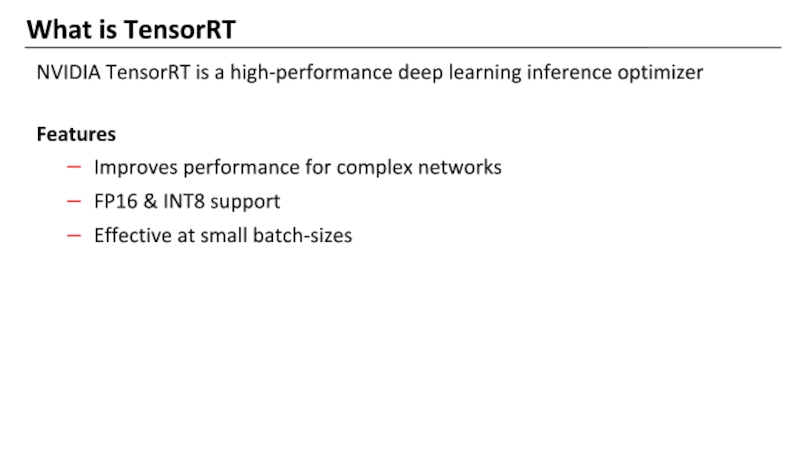

- 21. What is TensorRT NVIDIA TensorRT is a

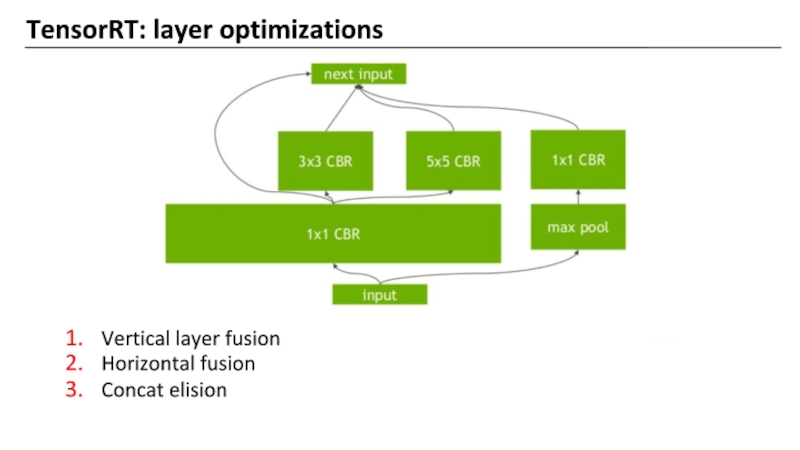

- 22. TensorRT: layer optimizations Horizontal fusion Concat elision Vertical layer fusion

- 23. TensorRT: downsides Caffe + TensorFlow supported Fixed input/batch size Basic layers support

- 24. Batch processing Problem Image size is fixed,

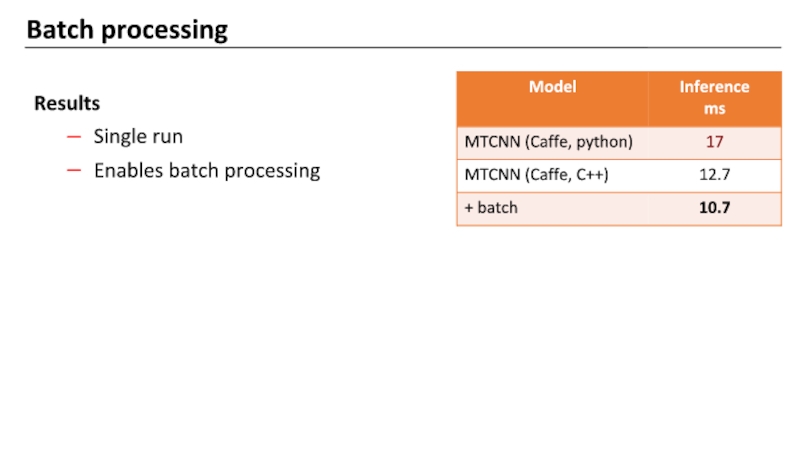

- 25. Batch processing Results Single run Enables batch processing

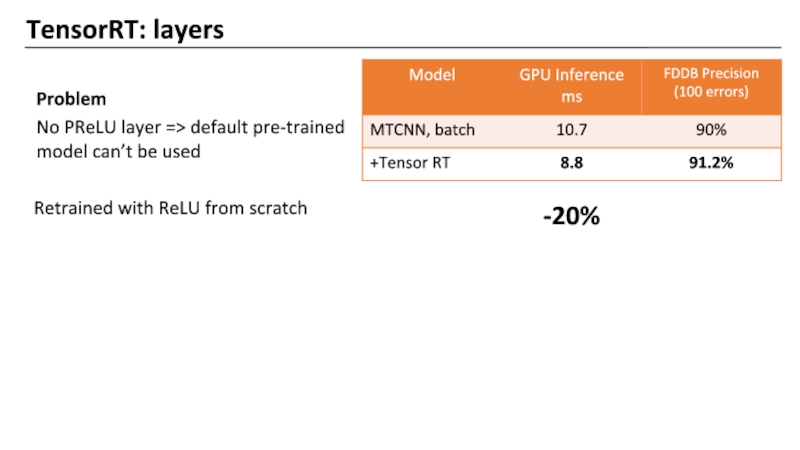

- 26. TensorRT: layers Problem No PReLU layer

- 27. Face detection: inference Target:

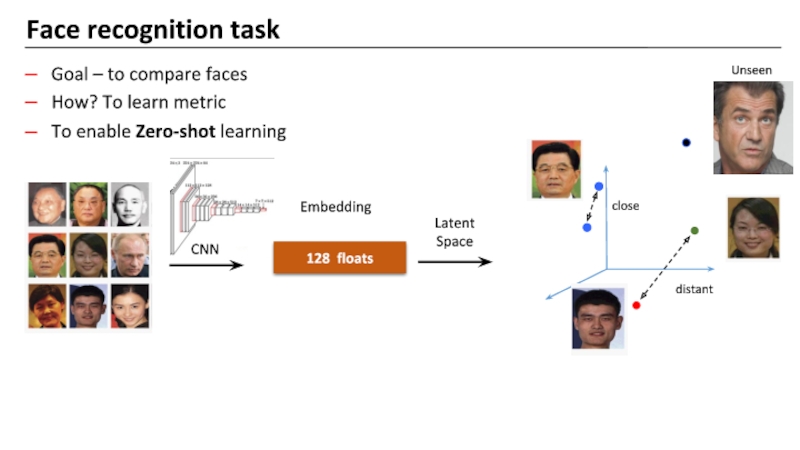

- 29. Face recognition task Goal – to compare

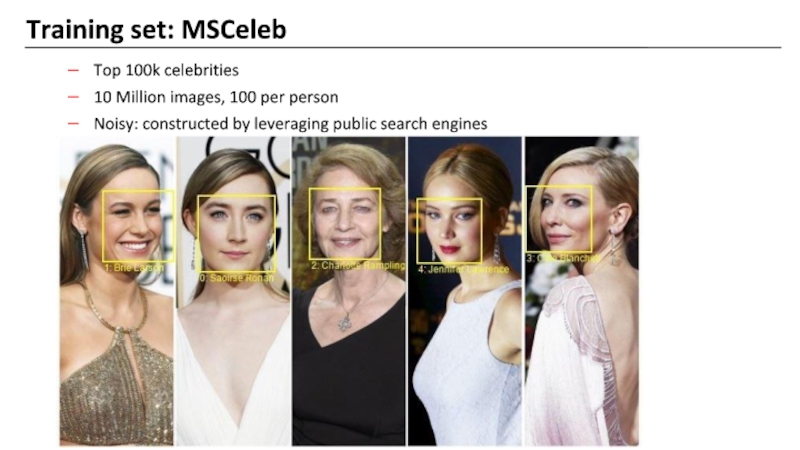

- 30. Training set: MSCeleb Top 100k celebrities 10

- 31. Small test dataset: LFW Labeled Faces in

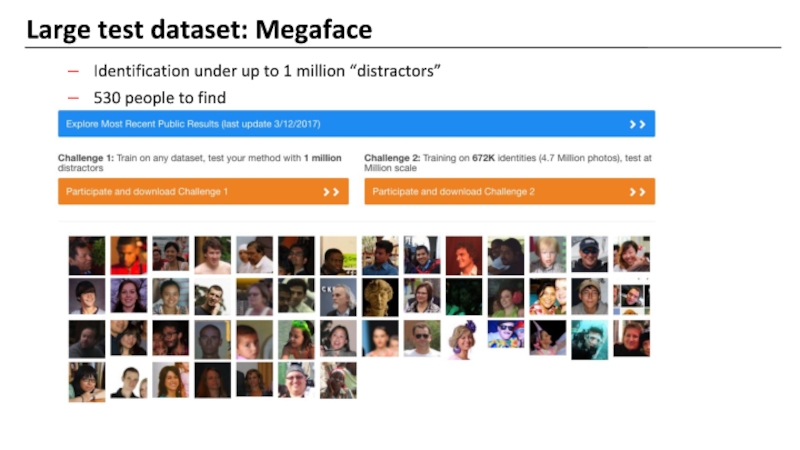

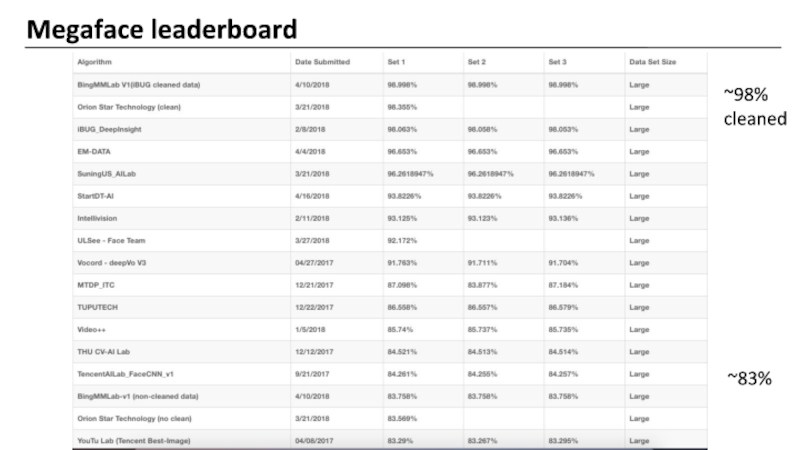

- 32. Large test dataset: Megaface Identification under up

- 33. Megaface leaderboard ~83% ~98% cleaned

- 34. Metric Learning

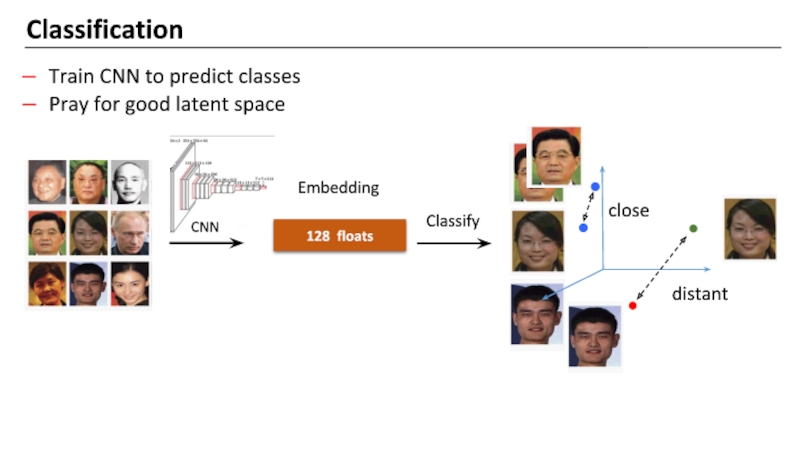

- 35. Classification Train CNN to predict classes Pray for good latent space

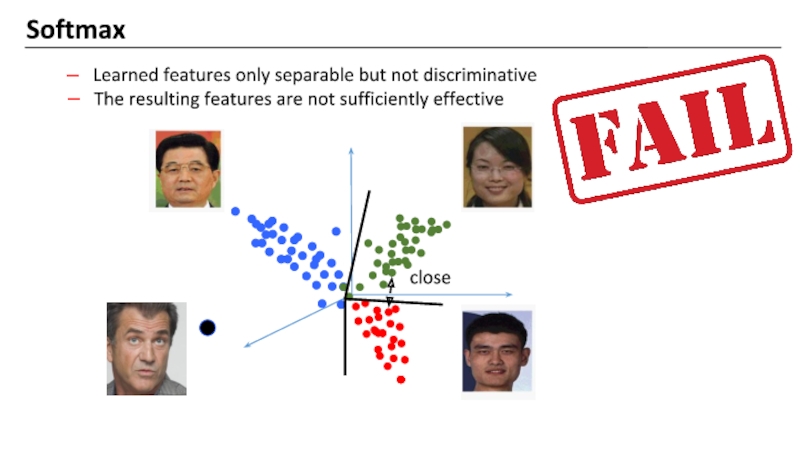

- 36. Softmax Learned features only separable but not discriminative The resulting features are not sufficiently effective

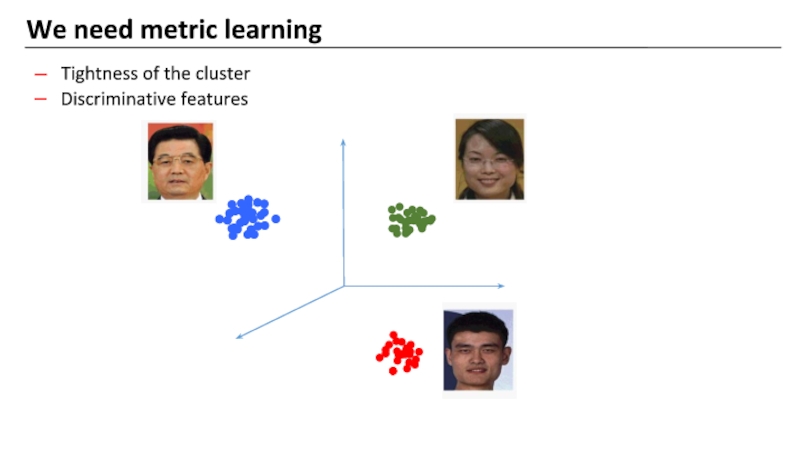

- 37. We need metric learning Tightness of the cluster Discriminative features

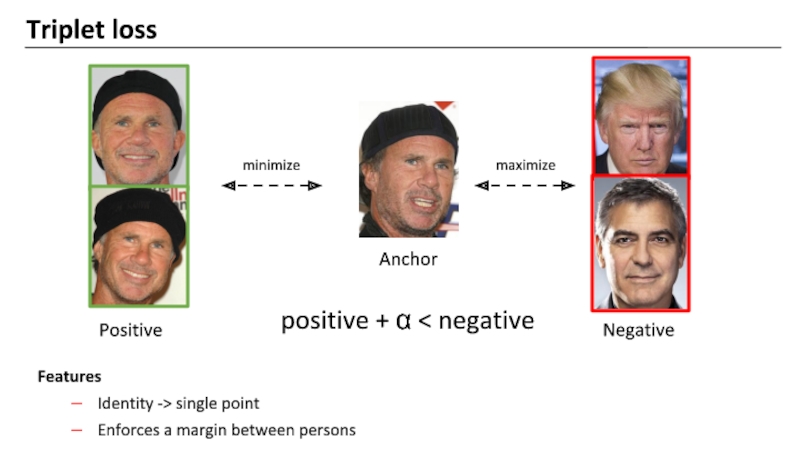

- 38. Triplet loss Features Identity -> single point

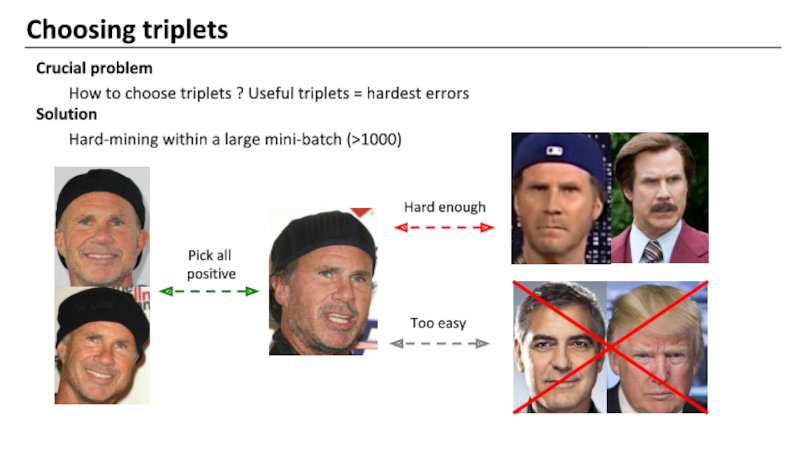

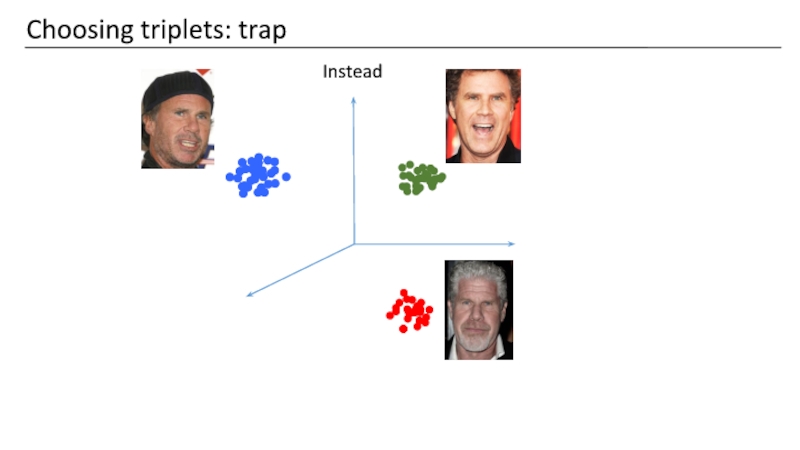

- 39. Choosing triplets Crucial problem How to choose

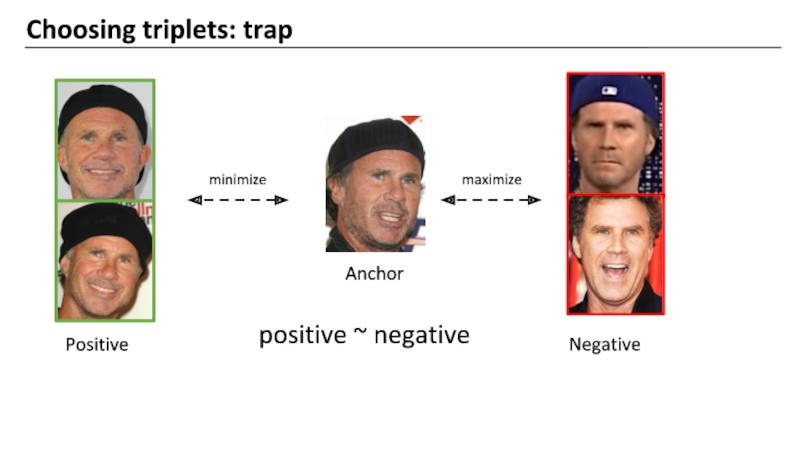

- 40. Choosing triplets: trap

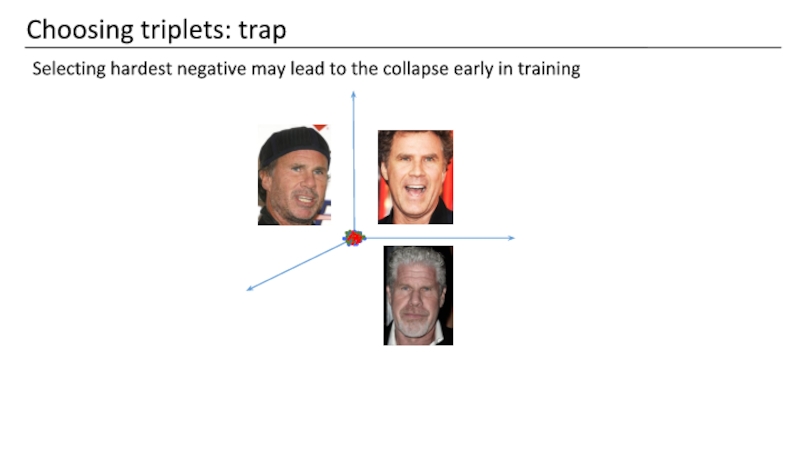

- 41. Choosing triplets: trap positive ~ negative

- 42. Choosing triplets: trap Instead

- 43. Choosing triplets: trap Selecting hardest negative may lead to the collapse early in training

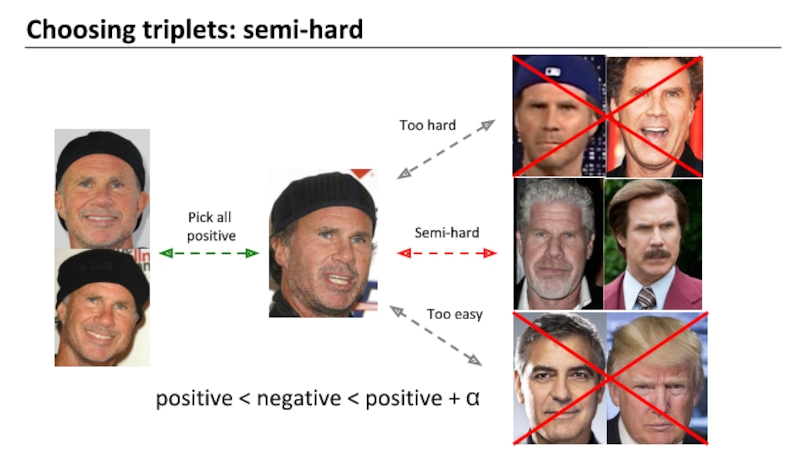

- 44. Choosing triplets: semi-hard positive < negative < positive + α

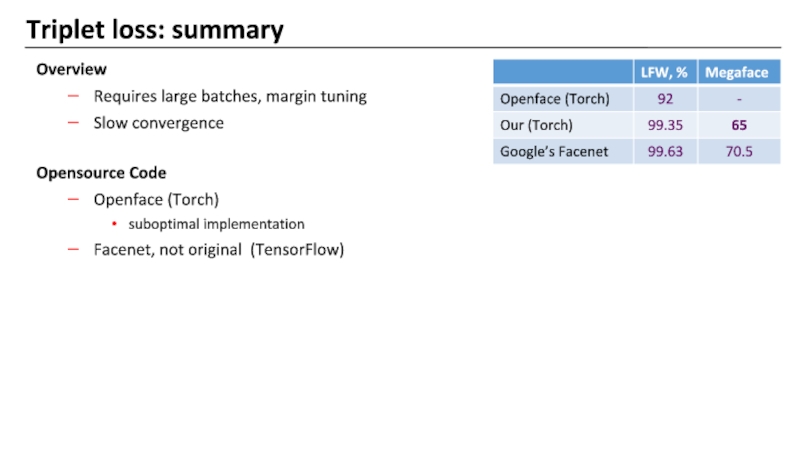

- 45. Triplet loss: summary Overview Requires large batches,

- 46. Center loss Idea: pull points to class

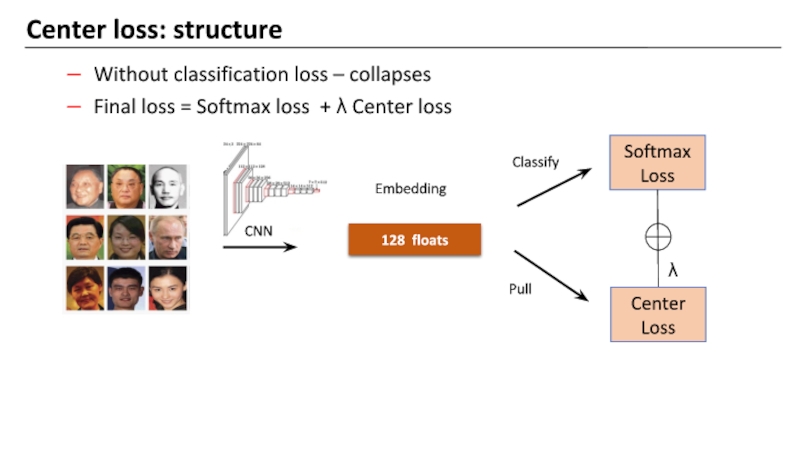

- 47. Center loss: structure Without classification loss –

- 48. Center Loss: different lambdas λ = 10-7

- 49. Center Loss: different lambdas λ = 10-6

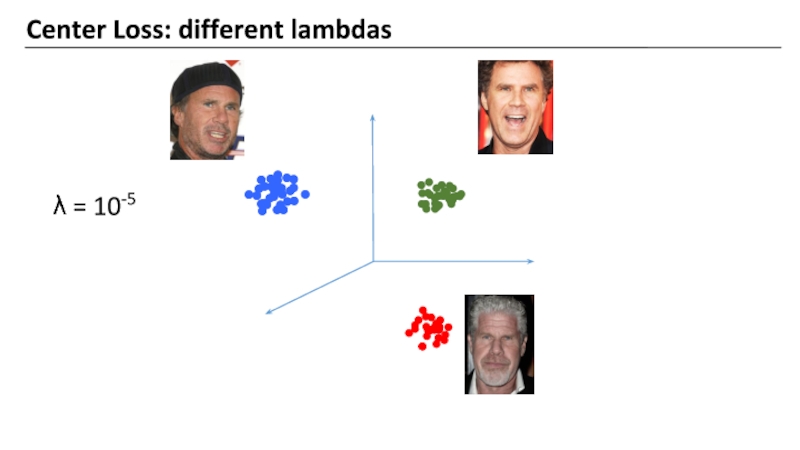

- 50. Center Loss: different lambdas λ = 10-5

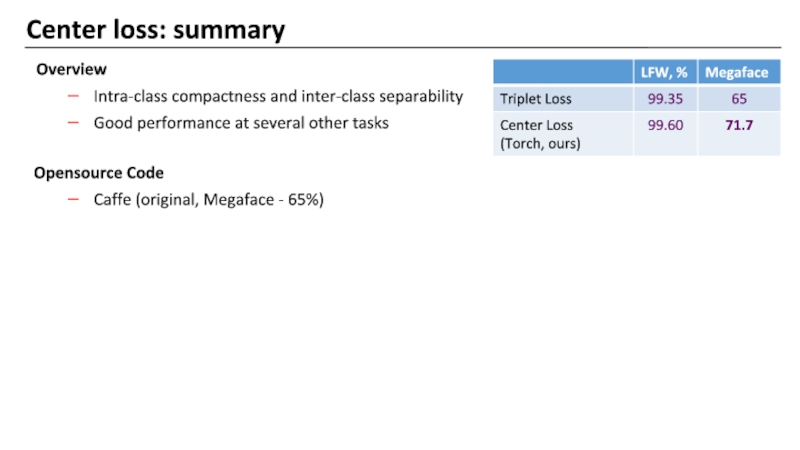

- 51. Center loss: summary Overview Intra-class compactness and

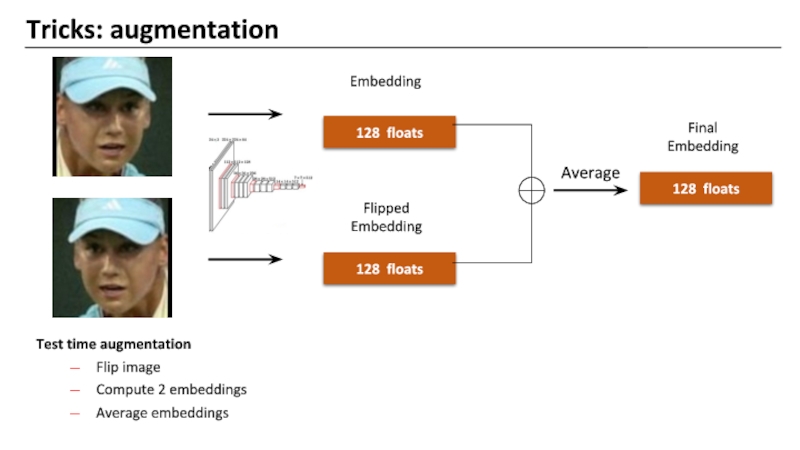

- 52. Tricks: augmentation Test time augmentation Flip image Average embeddings Compute 2 embeddings

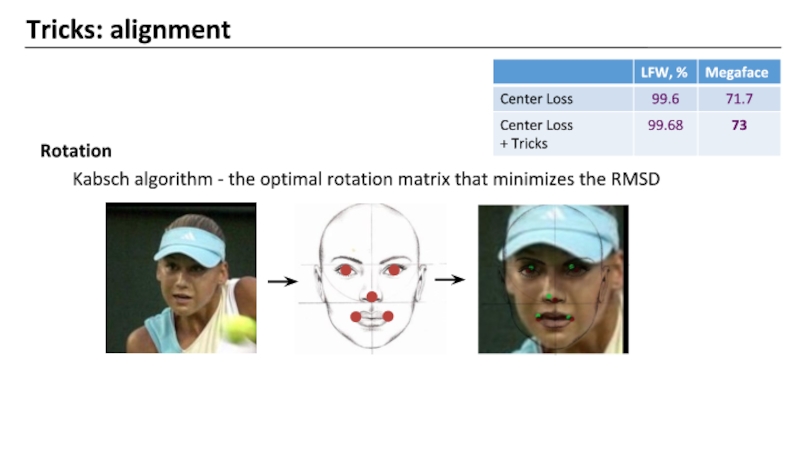

- 53. Tricks: alignment Rotation Kabsch algorithm - the optimal rotation matrix that minimizes the RMSD

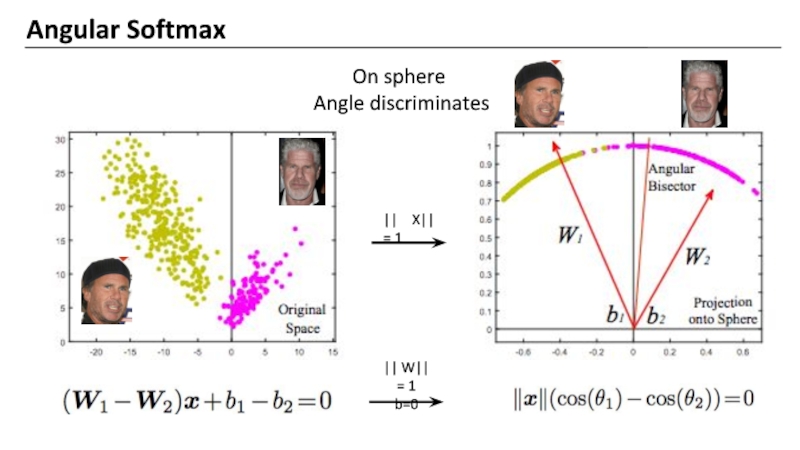

- 54. Angular Softmax On sphere Angle discriminates

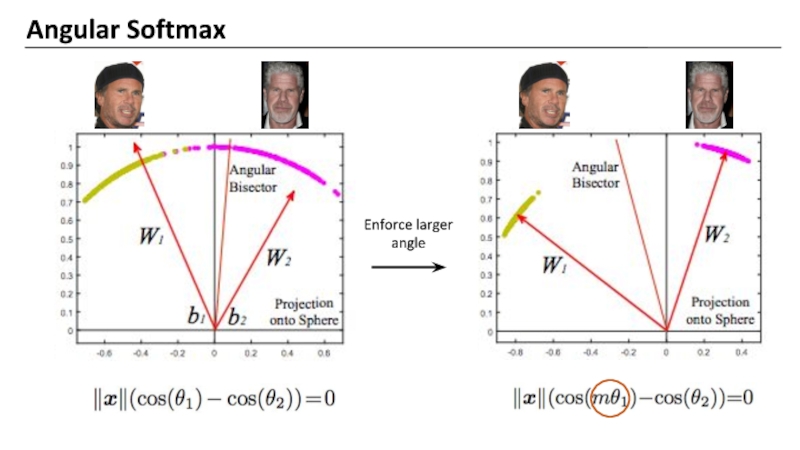

- 55. Angular Softmax

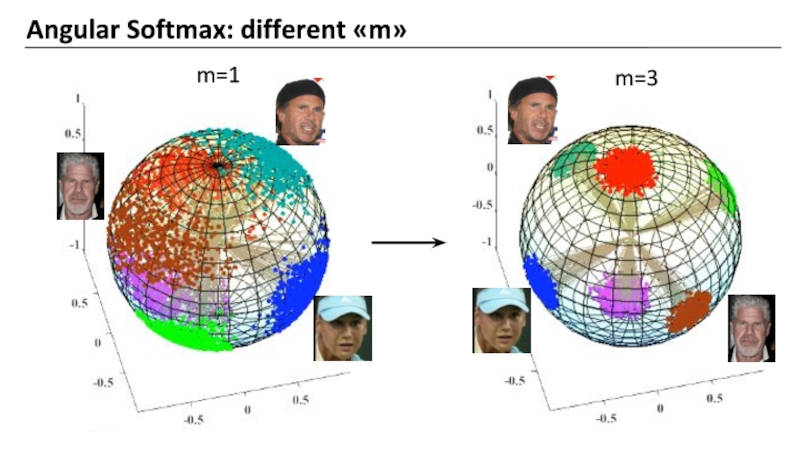

- 56. Angular Softmax: different «m»

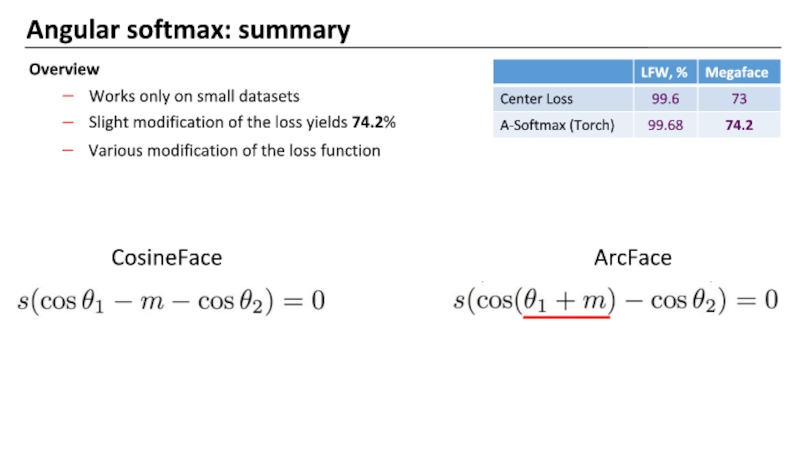

- 57. Angular softmax: summary Overview Works only on

- 58. Metric learning: summary Softmax < Triplet <

- 59. Fighting errors

- 60. Errors after MSCeleb: children

- 61. Errors after MSCeleb: asian Problem

- 62. How to fix these errors ? It’s

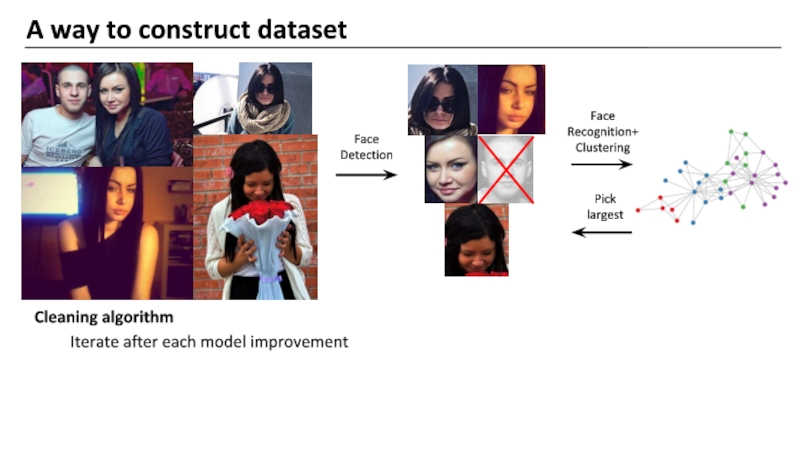

- 63. A way to construct dataset Cleaning

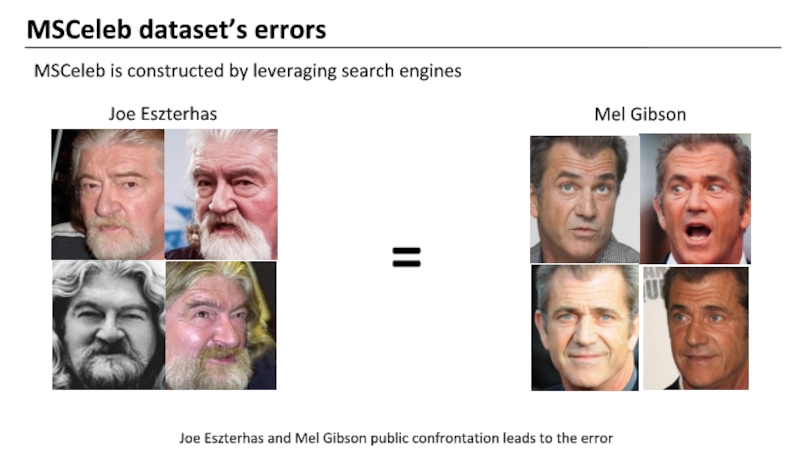

- 64. MSCeleb dataset’s errors MSCeleb is constructed by

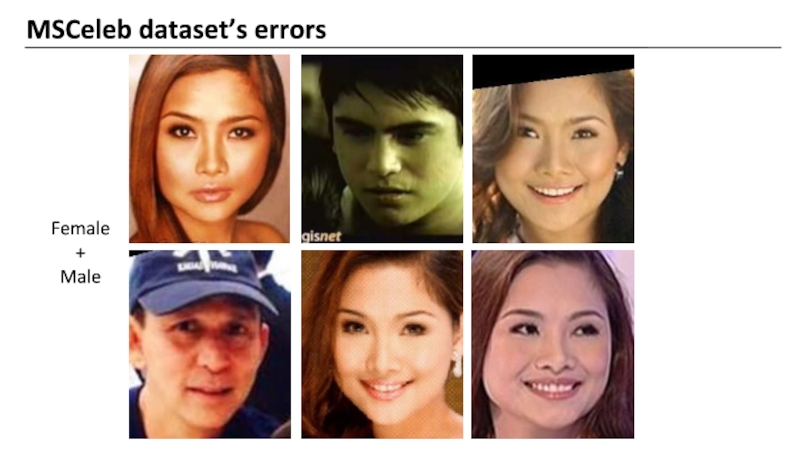

- 65. MSCeleb dataset’s errors Female + Male

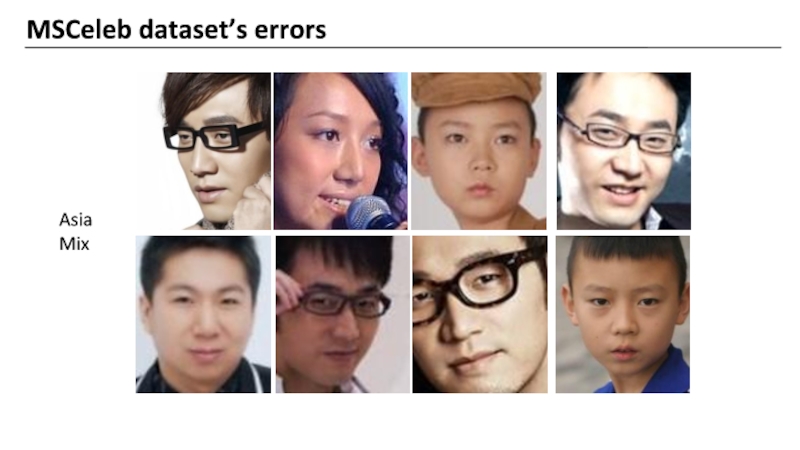

- 66. MSCeleb dataset’s errors Asia Mix

- 67. MSCeleb dataset’s errors Dataset has been shrinked

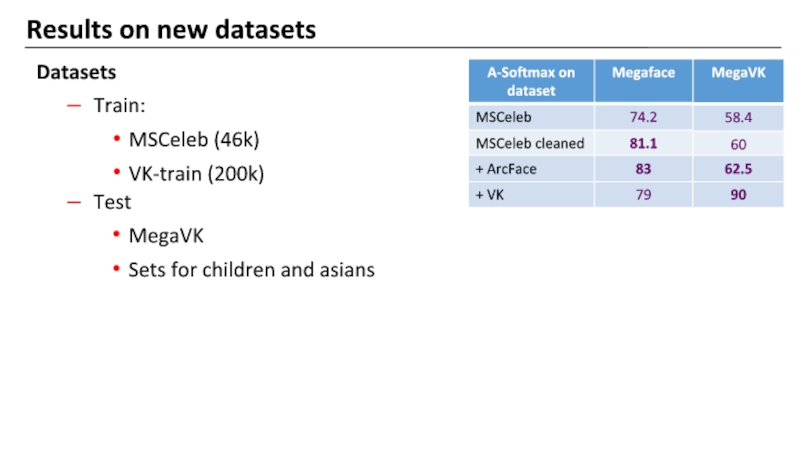

- 68. Results on new datasets Datasets Train: MSCeleb

- 69. How to handle big dataset It seems

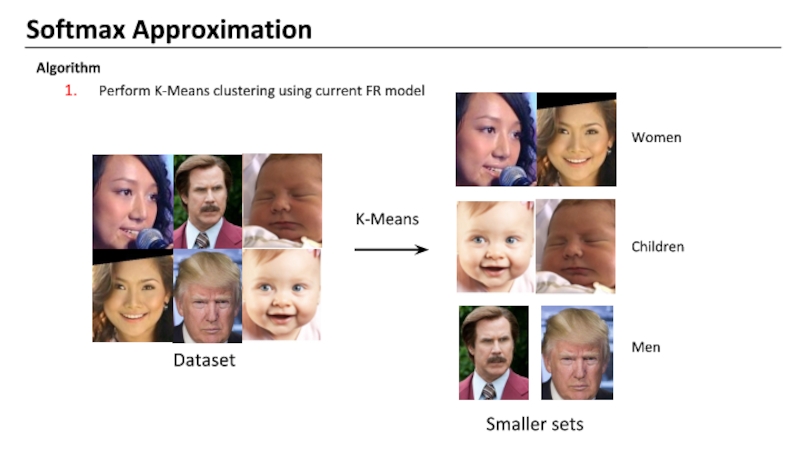

- 70. Softmax Approximation Algorithm Perform K-Means clustering using current FR model

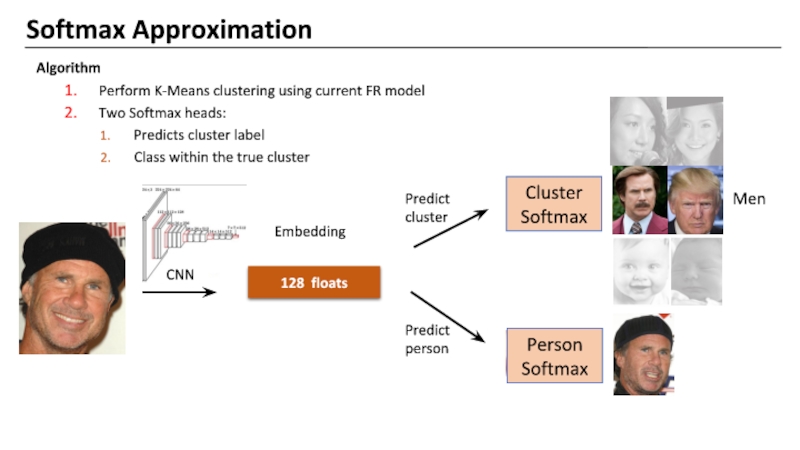

- 71. Softmax Approximation Algorithm Perform K-Means clustering using

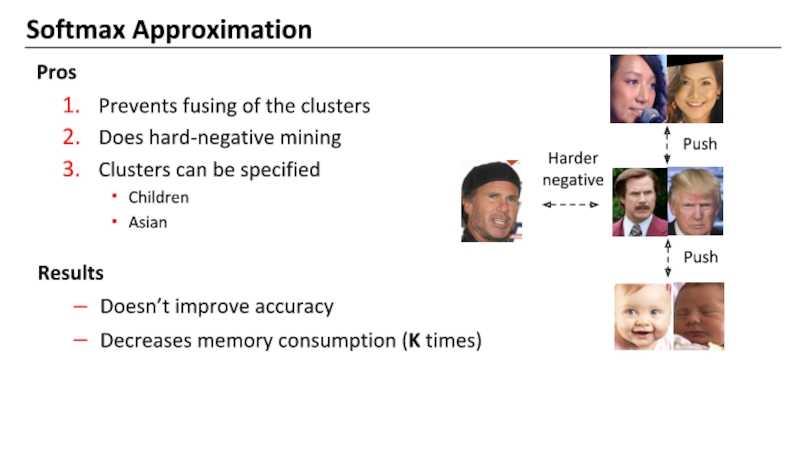

- 72. Softmax Approximation Pros Prevents fusing of the

- 73. Fighting errors on production

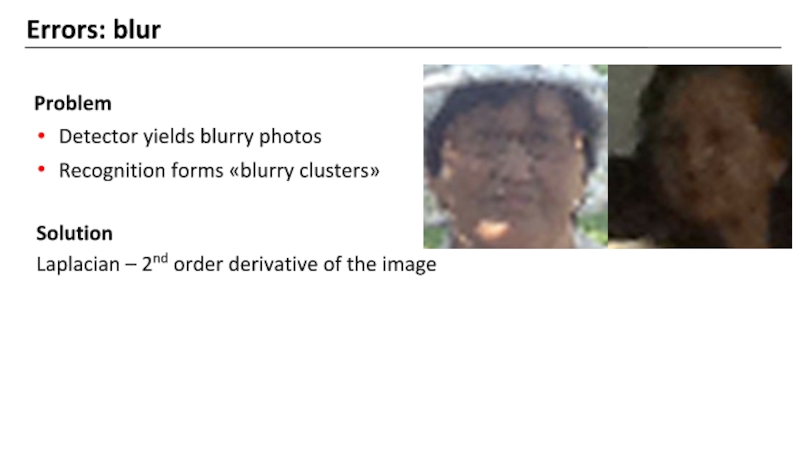

- 74. Errors: blur Problem Detector yields blurry

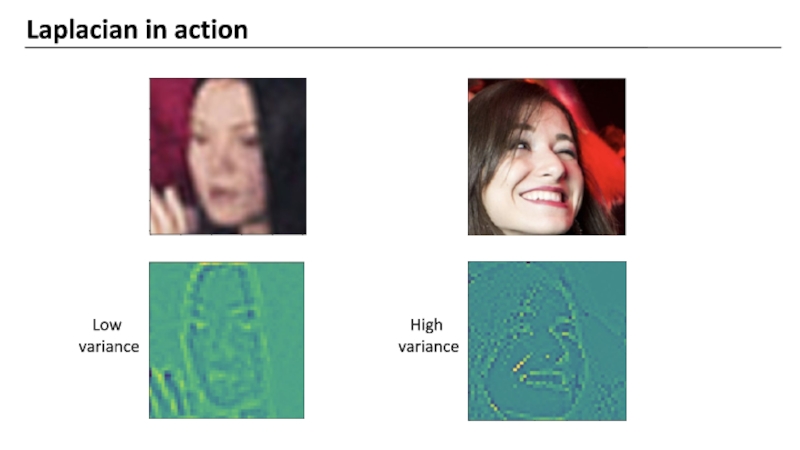

- 75. Laplacian in action Low variance High variance

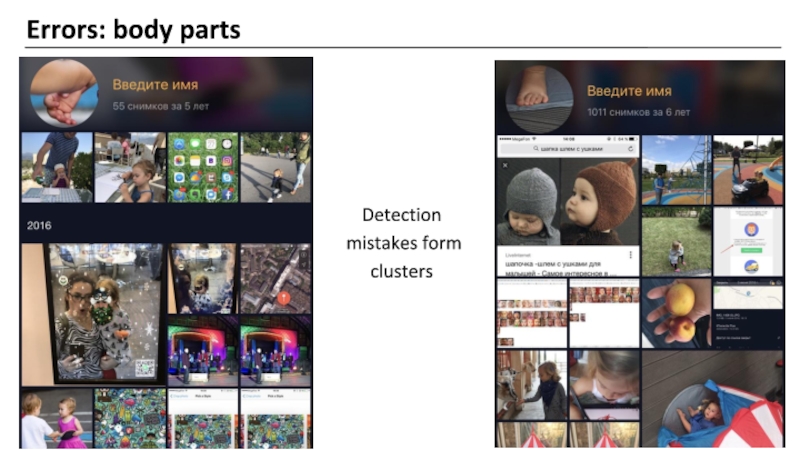

- 76. Errors: body parts Detection mistakes form clusters

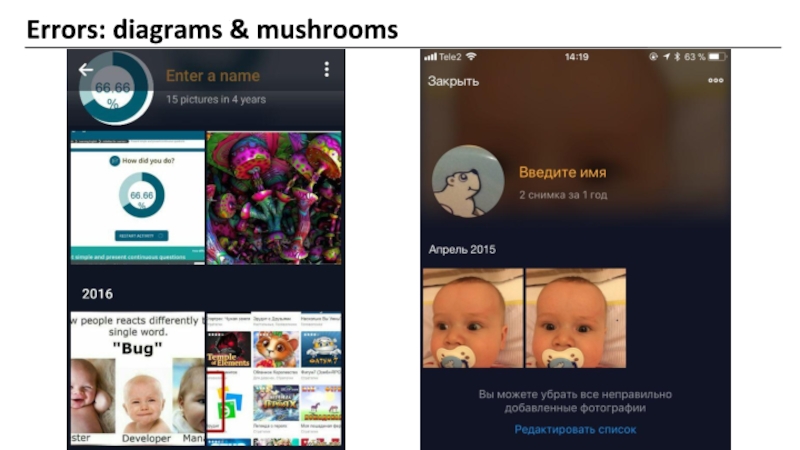

- 77. Errors: diagrams & mushrooms

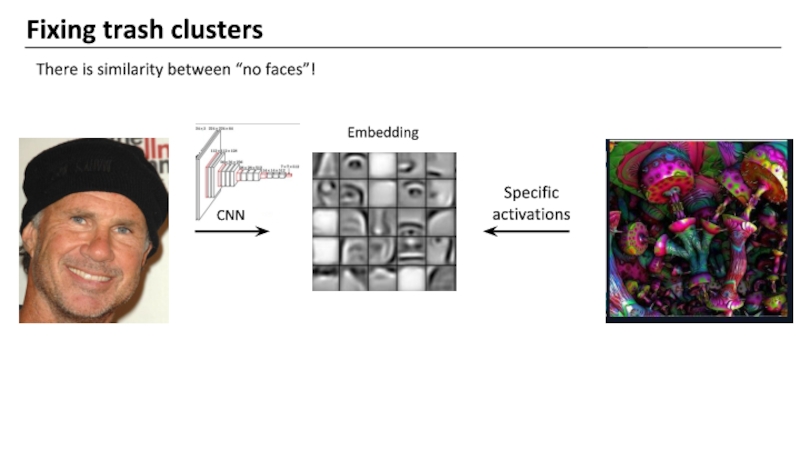

- 78. Fixing trash clusters There is similarity between “no faces”!

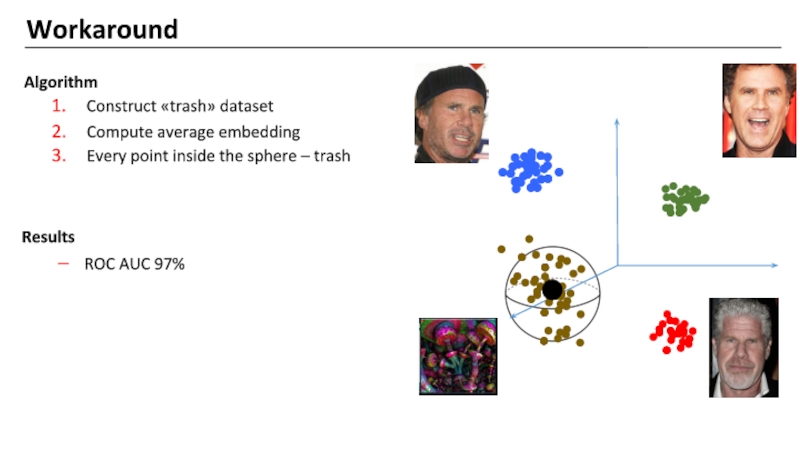

- 79. Workaround Algorithm Construct «trash» dataset Compute

- 80. Spectacular results

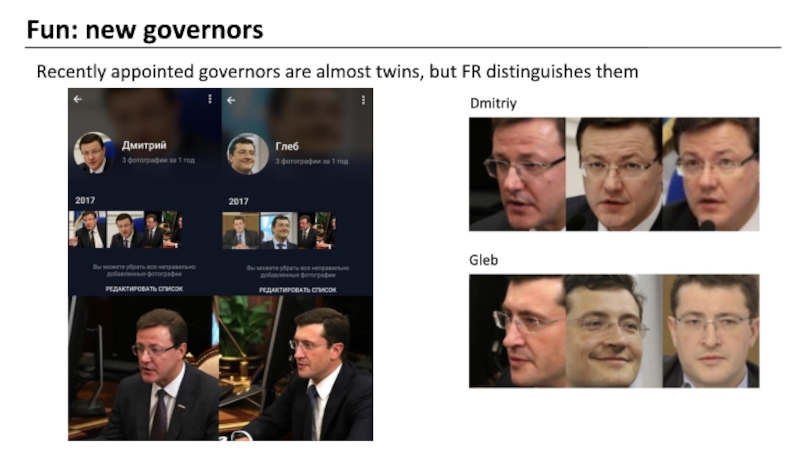

- 81. Fun: new governors Recently appointed governors are almost twins, but FR distinguishes them

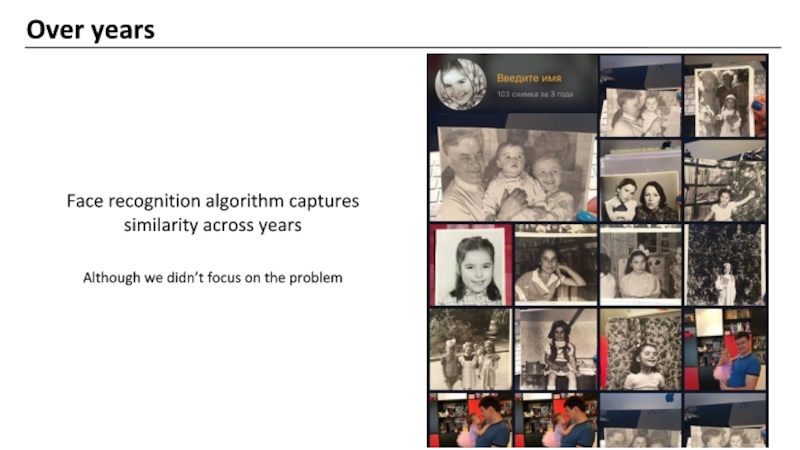

- 82. Over years Face recognition algorithm captures similarity

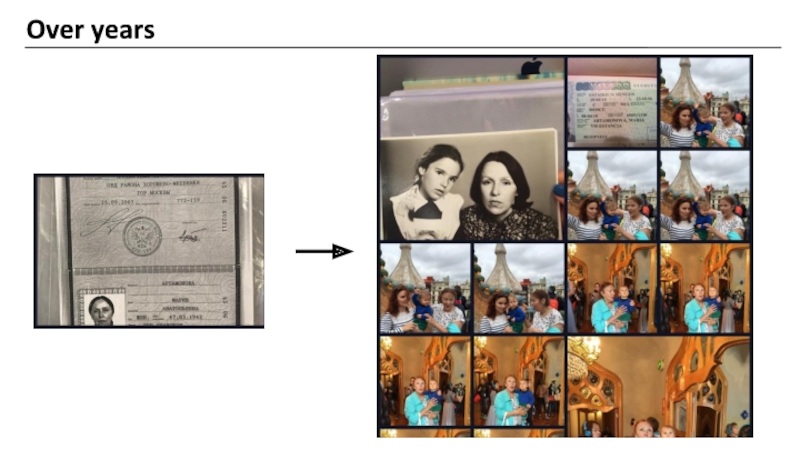

- 83. Over years

- 84. Summary Use TensorRT to speed up

- 86. Auxiliary

- 87. Best avatar Problem How to pick an

- 88. Predicting awesomeness: how to approach Social networks

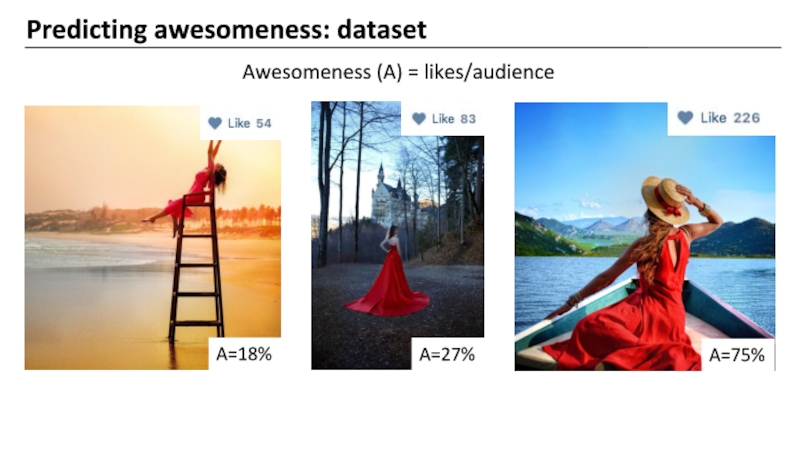

- 89. Predicting awesomeness: dataset Awesomeness (A) = likes/audience A=18% A=27% A=75%

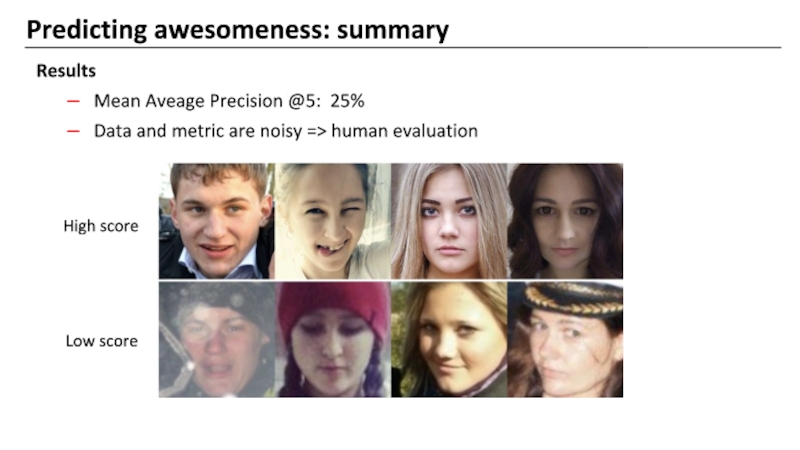

- 90. Results Mean Aveage Precision @5: 25% Data

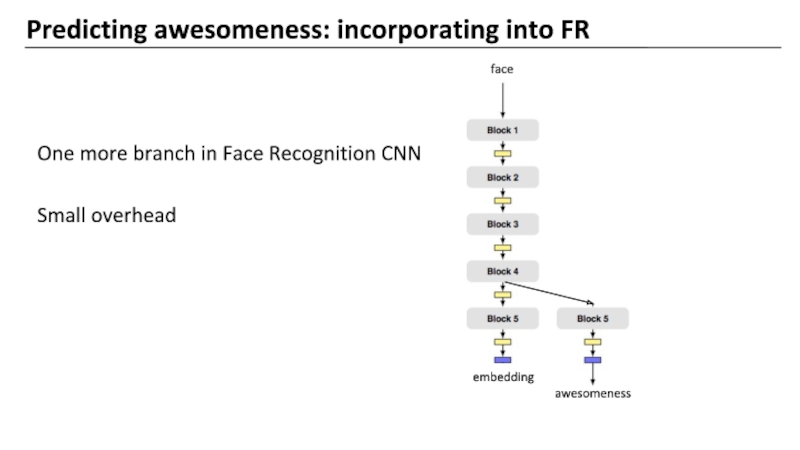

- 91. Predicting awesomeness: incorporating into FR One more

Слайд 2Face Recognition in Cloud@Mail.ru

Users upload photos to Cloud

Backend identifies

Слайд 8Auxiliary task: facial landmarks

Face alignment: rotation

Goal: make it easier for Face

Слайд 9Train Datasets

Wider

32k images

494k faces

Celeba

200k images, 10k persons

Landmarks, 40 binary attributes

Слайд 13Viola-Jones algorithm: inference

Stages

Face

Yes

Yes

Stage 1

Stage 2

Stage N

Optimization

Features are grouped into stages

If a

Слайд 15Pre-trained network: extracting features

New school: Region-based Convolutional Networks

Faster RCNN, algorithm

Face ?

Region proposal network

RoI-pooling: extract corresponding tensor

Classifier: classes and the bounding box

Слайд 18Alternative: MTCNN

Cascade of 3 CNN

Resize to different scales

Proposal -> candidates +

Refine -> calibration

Output -> b-boxes + landmarks

Слайд 21What is TensorRT

NVIDIA TensorRT is a high-performance deep learning inference optimizer

Features

Improves

FP16 & INT8 support

Effective at small batch-sizes

Слайд 24Batch processing

Problem

Image size is fixed, but

MTCNN works at different scales

Solution

Pyramid on

Слайд 26TensorRT: layers

Problem

No PReLU layer => default pre-trained model can’t be used

Retrained

-20%

Слайд 27Face detection: inference

Target: < 10 ms

Result: 8.8 ms

Ingredients

MTCNN

Batch processing

TensorRT

Слайд 29Face recognition task

Goal – to compare faces

How? To learn metric

To enable

Слайд 30Training set: MSCeleb

Top 100k celebrities

10 Million images, 100 per person

Noisy: constructed

Слайд 31Small test dataset: LFW

Labeled Faces in the Wild Home

13k images from

1680 persons have >= 2 photos

Слайд 32Large test dataset: Megaface

Identification under up to 1 million “distractors”

530 people

Слайд 36Softmax

Learned features only separable but not discriminative

The resulting features are not

Слайд 38Triplet loss

Features

Identity -> single point

Enforces a margin between persons

positive + α

Слайд 39Choosing triplets

Crucial problem

How to choose triplets ? Useful triplets = hardest

Solution

Hard-mining within a large mini-batch (>1000)

Слайд 43Choosing triplets: trap

Selecting hardest negative may lead to the collapse early

Слайд 45Triplet loss: summary

Overview

Requires large batches, margin tuning

Slow convergence

Opensource Code

Openface (Torch)

suboptimal implementation

Facenet,

Слайд 47Center loss: structure

Without classification loss – collapses

Softmax

Loss

Center

Loss

Final loss = Softmax loss

Слайд 51Center loss: summary

Overview

Intra-class compactness and inter-class separability

Good performance at several other

Opensource Code

Caffe (original, Megaface - 65%)

Слайд 53Tricks: alignment

Rotation

Kabsch algorithm - the optimal rotation matrix that minimizes the

Слайд 57Angular softmax: summary

Overview

Works only on small datasets

Slight modification of the loss

Various modification of the loss function

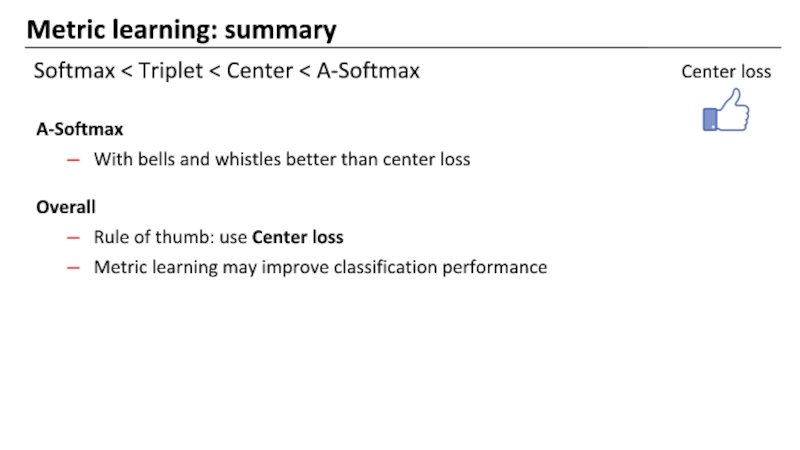

Слайд 58Metric learning: summary

Softmax < Triplet < Center < A-Softmax

A-Softmax

With bells and

Overall

Rule of thumb: use Center loss

Metric learning may improve classification performance

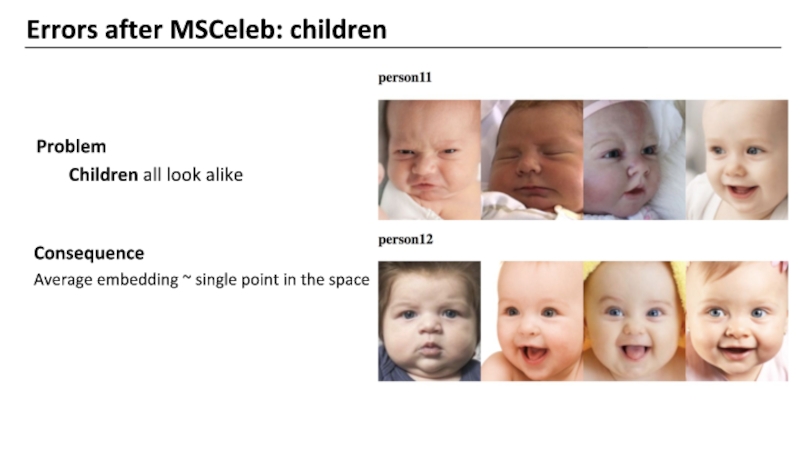

Слайд 60Errors after MSCeleb: children

Problem

Children all look alike

Consequence

Average embedding ~ single point

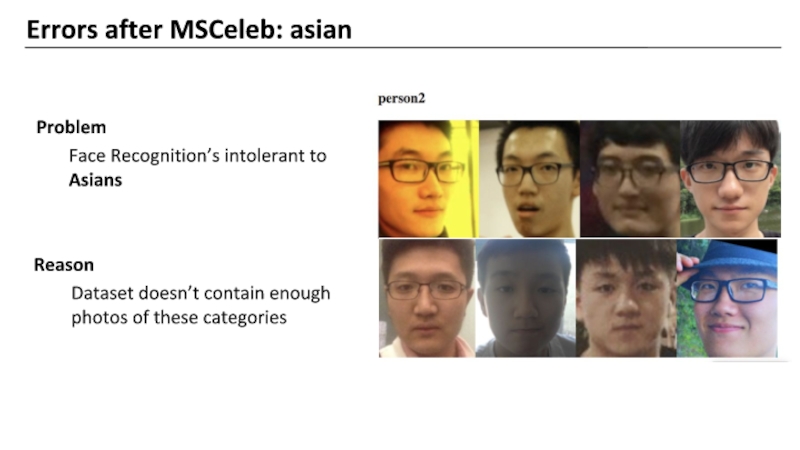

Слайд 61Errors after MSCeleb: asian

Problem

Face Recognition’s intolerant to Asians

Reason

Dataset doesn’t contain enough

Слайд 62How to fix these errors ?

It’s all about data, we need

Natural choice – avatars of social networks

Слайд 63A way to construct dataset

Cleaning algorithm

Face detection

Face recognition -> embeddings

Hierarchical clustering

Pick the largest cluster as a person

Iterate after each model improvement

Слайд 64MSCeleb dataset’s errors

MSCeleb is constructed by leveraging search engines

Joe Eszterhas and

=

Слайд 67MSCeleb dataset’s errors

Dataset has been shrinked from 100k to 46k celebrities

Random

search

Слайд 68Results on new datasets

Datasets

Train:

MSCeleb (46k)

VK-train (200k)

Test

MegaVK

Sets for children and asians

Слайд 69How to handle big dataset

It seems we can add more data

Problems

Memory consumption (Softmax)

Computational costs

A lot of noise in gradients

Слайд 71Softmax Approximation

Algorithm

Perform K-Means clustering using current FR model

Two Softmax heads:

Predicts cluster

Class within the true cluster

Слайд 72Softmax Approximation

Pros

Prevents fusing of the clusters

Does hard-negative mining

Clusters can be specified

Children

Asian

Results

Doesn’t

Decreases memory consumption (K times)

Слайд 74Errors: blur

Problem

Detector yields blurry photos

Recognition forms «blurry clusters»

Solution

Laplacian – 2nd order

Слайд 79Workaround

Algorithm

Construct «trash» dataset

Compute average embedding

Every point inside the sphere –

Results

ROC AUC 97%

Слайд 82Over years

Face recognition algorithm captures similarity across years

Although we didn’t

Слайд 84Summary

Use TensorRT to speed up inference

Metric learning: use Center loss by

Clean your data thoroughly

Understanding CNN helps to fight errors

Слайд 87Best avatar

Problem

How to pick an avatar for a person ?

Solution

Train model

Слайд 90Results

Mean Aveage Precision @5: 25%

Data and metric are noisy => human

Predicting awesomeness: summary