- Главная

- Разное

- Дизайн

- Бизнес и предпринимательство

- Аналитика

- Образование

- Развлечения

- Красота и здоровье

- Финансы

- Государство

- Путешествия

- Спорт

- Недвижимость

- Армия

- Графика

- Культурология

- Еда и кулинария

- Лингвистика

- Английский язык

- Астрономия

- Алгебра

- Биология

- География

- Детские презентации

- Информатика

- История

- Литература

- Маркетинг

- Математика

- Медицина

- Менеджмент

- Музыка

- МХК

- Немецкий язык

- ОБЖ

- Обществознание

- Окружающий мир

- Педагогика

- Русский язык

- Технология

- Физика

- Философия

- Химия

- Шаблоны, картинки для презентаций

- Экология

- Экономика

- Юриспруденция

Managing growth in Production Hadoop Deployments презентация

Содержание

- 1. Managing growth in Production Hadoop Deployments

- 2. ALTISCALE : INFRASTRUCTURE NERDS Soam Acharya -

- 3. SO, YOU’VE PUT TOGETHER YOUR FIRST HADOOP DEPLOYMENT It’s now running production ETLs

- 4. CONGRATULATIONS!

- 5. BUT THEN ... Your data scientists get on the cluster and start building models

- 6. BUT THEN ... Your data scientists get

- 7. BUT THEN ... Your data scientists get

- 8. BUT THEN ... Your data scientists get

- 9. SOON, YOUR CLUSTER ...

- 10. AND YOU …

- 11. THE “SUCCESS DISASTER” SCENARIO Initial success Many

- 12. WHY DO CLUSTERS FAIL? Failure categories: Too much data Too many jobs Too many users

- 13. HOW EXTRICATE YOURSELF? Short term strategy: Get

- 14. LONGER TERM STRATEGY Can’t cover every scenario

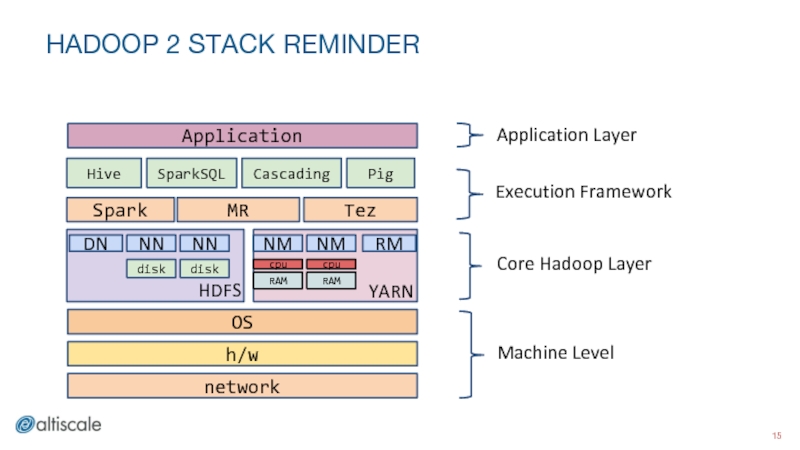

- 15. HADOOP 2 STACK REMINDER

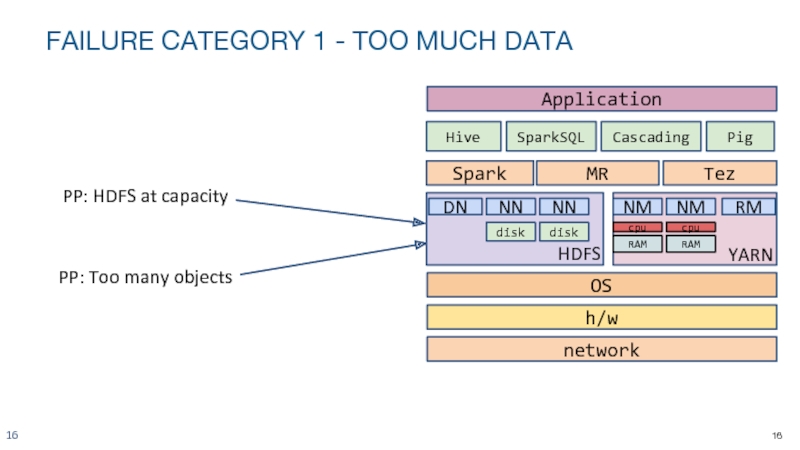

- 16. FAILURE CATEGORY 1 - TOO MUCH DATA PP: HDFS at capacity PP: Too many objects

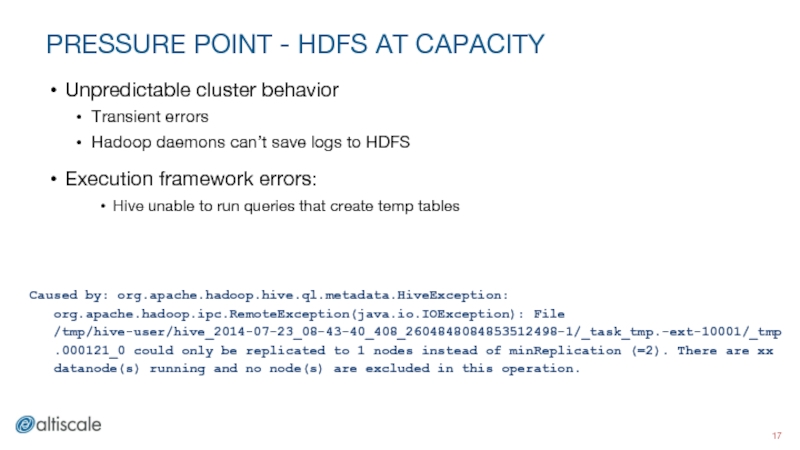

- 17. PRESSURE POINT - HDFS AT CAPACITY Unpredictable

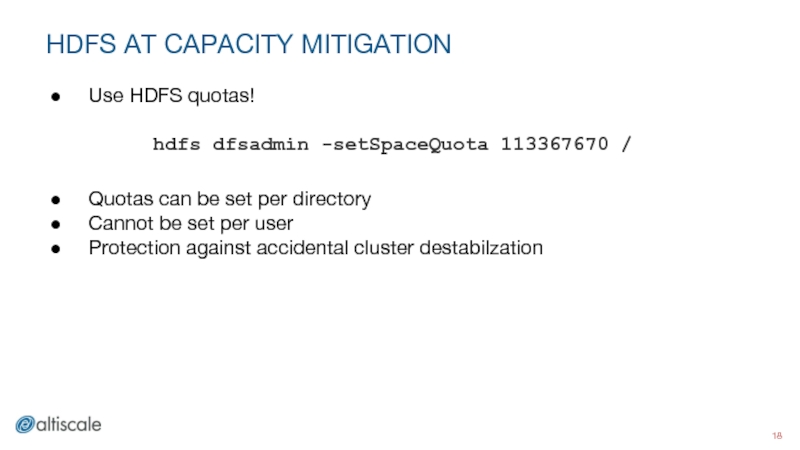

- 18. HDFS AT CAPACITY MITIGATION Use HDFS quotas!

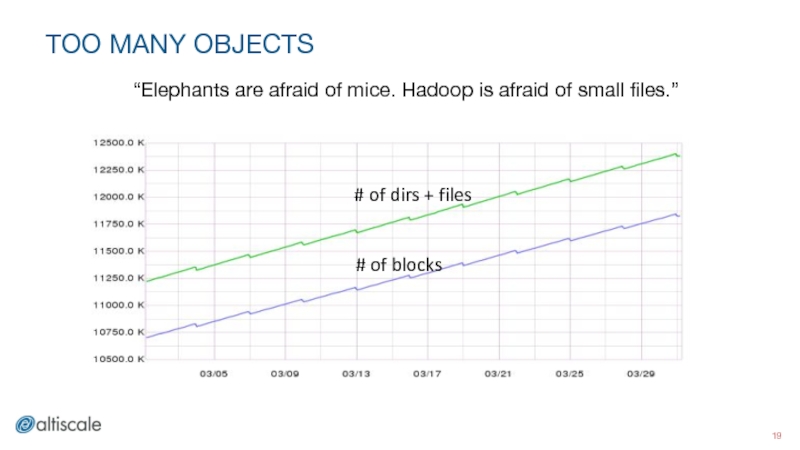

- 19. TOO MANY OBJECTS “Elephants are afraid of

- 20. TOO MANY OBJECTS Memory pressure: Namenode

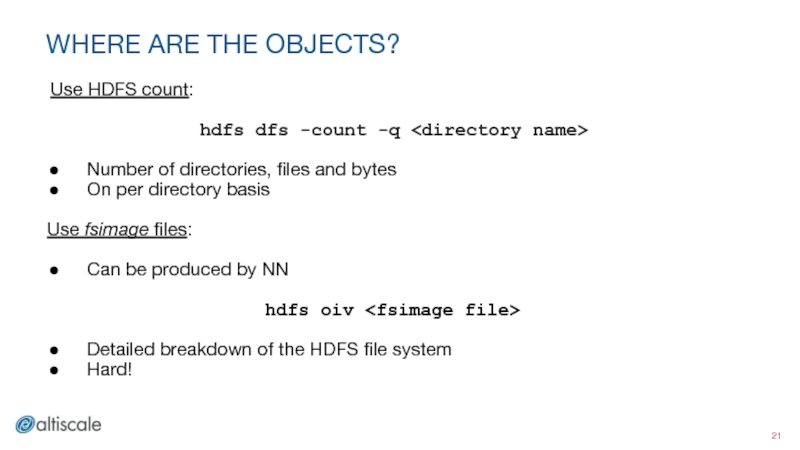

- 21. WHERE ARE THE OBJECTS? Use HDFS count:

- 22. TOO MANY OBJECTS - MITIGATION Short term:

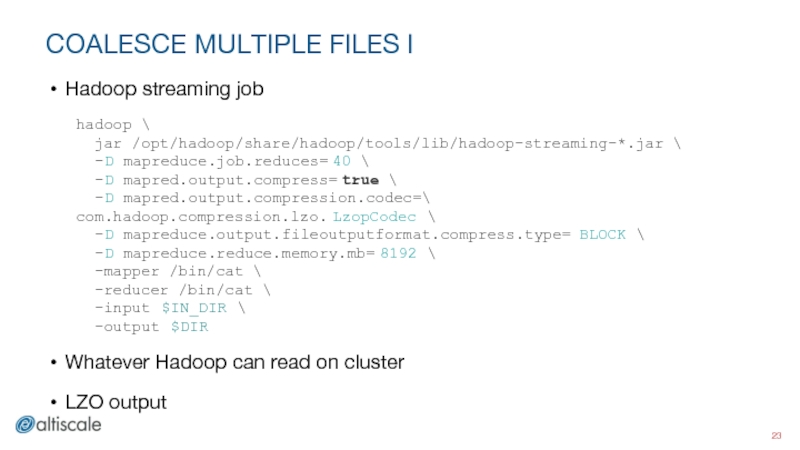

- 23. COALESCE MULTIPLE FILES I Hadoop streaming job

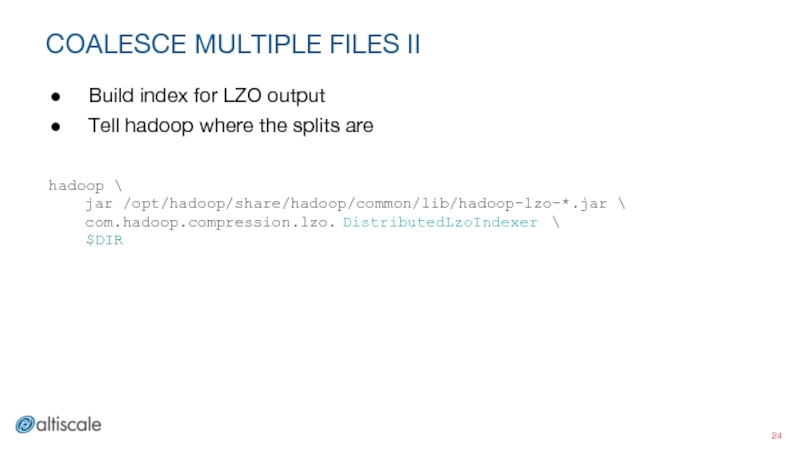

- 24. COALESCE MULTIPLE FILES II Build index for

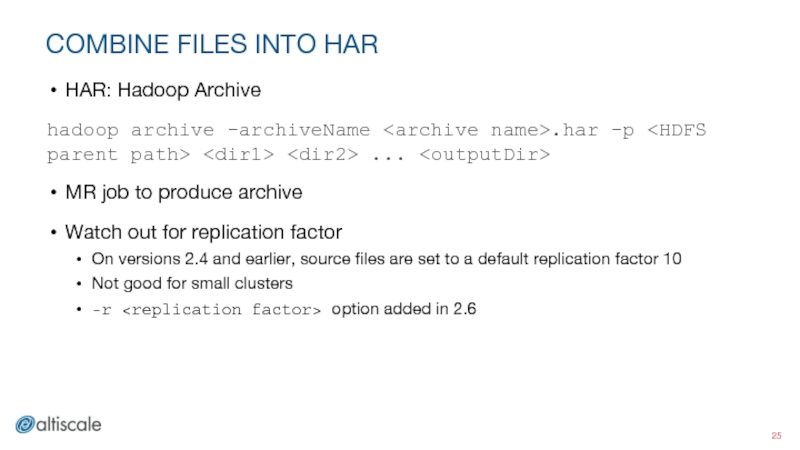

- 25. COMBINE FILES INTO HAR HAR: Hadoop Archive

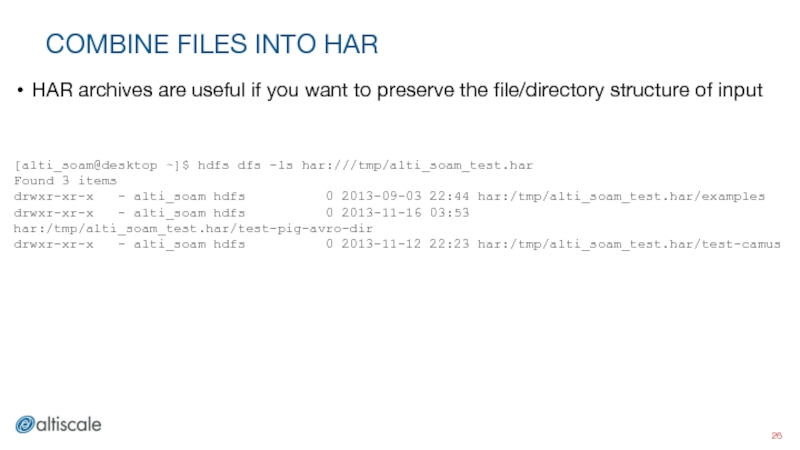

- 26. COMBINE FILES INTO HAR HAR archives are

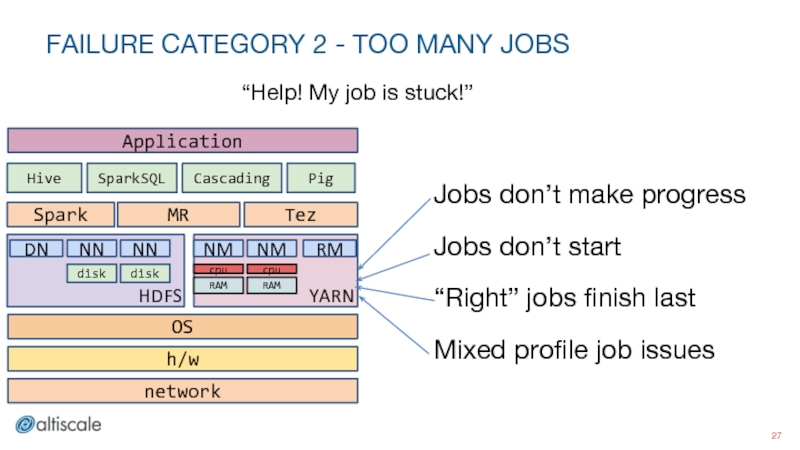

- 27. FAILURE CATEGORY 2 - TOO MANY JOBS

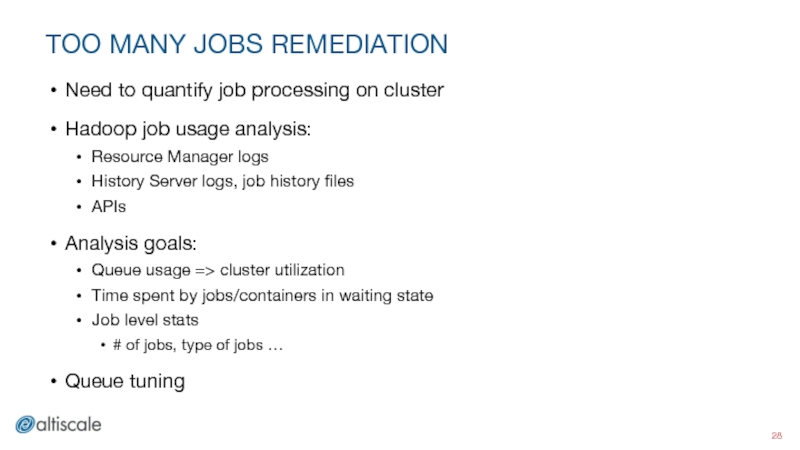

- 28. TOO MANY JOBS REMEDIATION Need to quantify

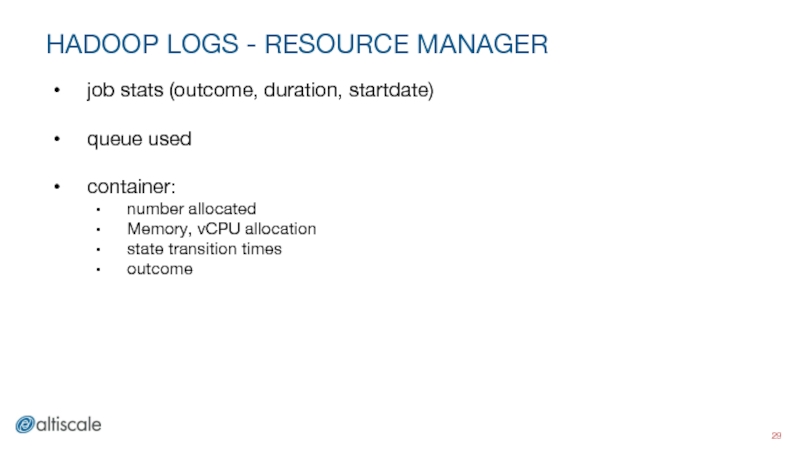

- 29. HADOOP LOGS - RESOURCE MANAGER job stats

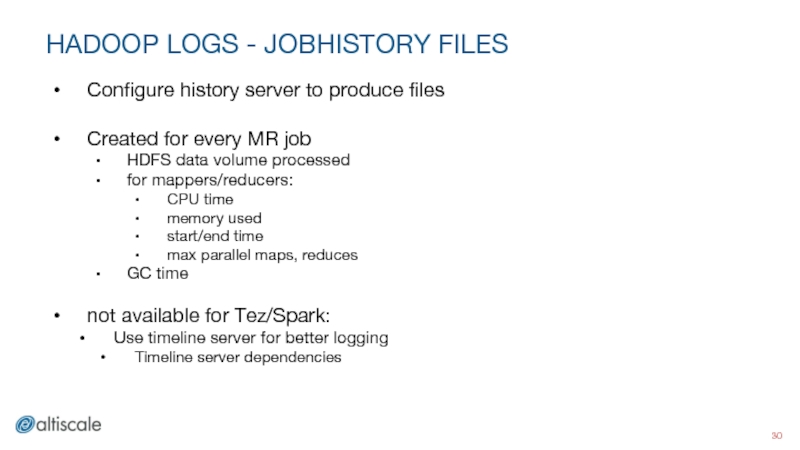

- 30. HADOOP LOGS - JOBHISTORY FILES Configure history

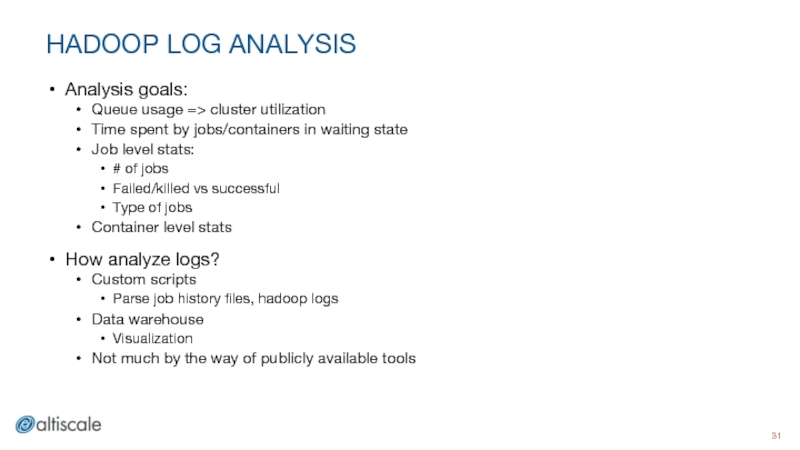

- 31. HADOOP LOG ANALYSIS Analysis goals: Queue usage

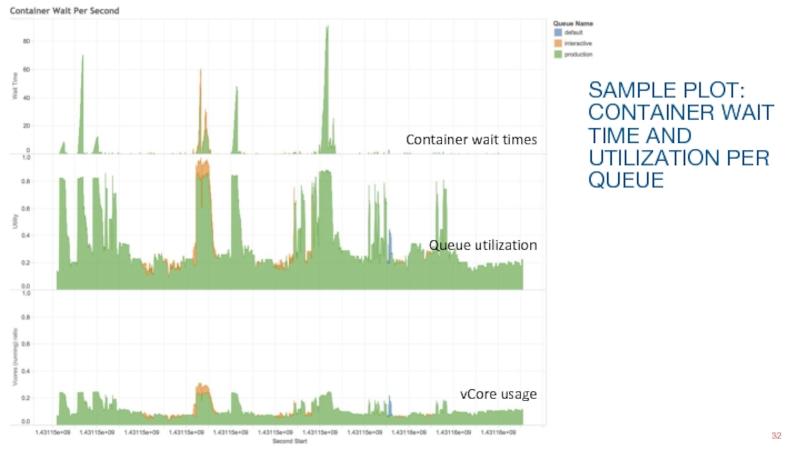

- 32. SAMPLE PLOT: CONTAINER WAIT TIME AND UTILIZATION

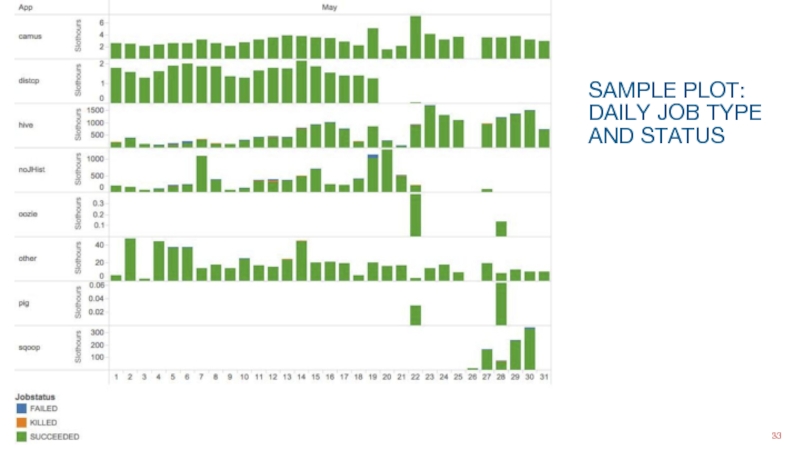

- 33. SAMPLE PLOT: DAILY JOB TYPE AND STATUS

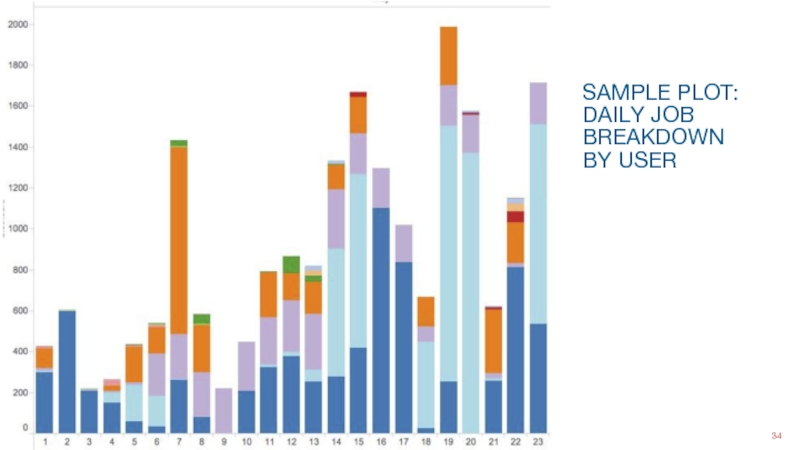

- 34. SAMPLE PLOT: DAILY JOB BREAKDOWN BY USER

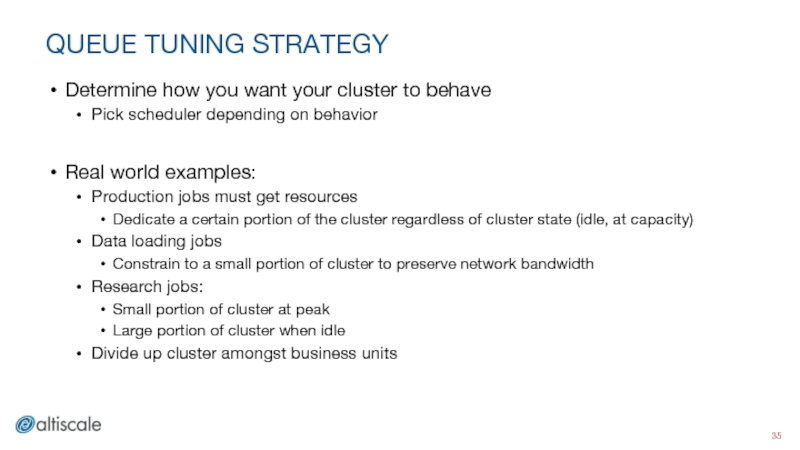

- 35. QUEUE TUNING STRATEGY Determine how you want

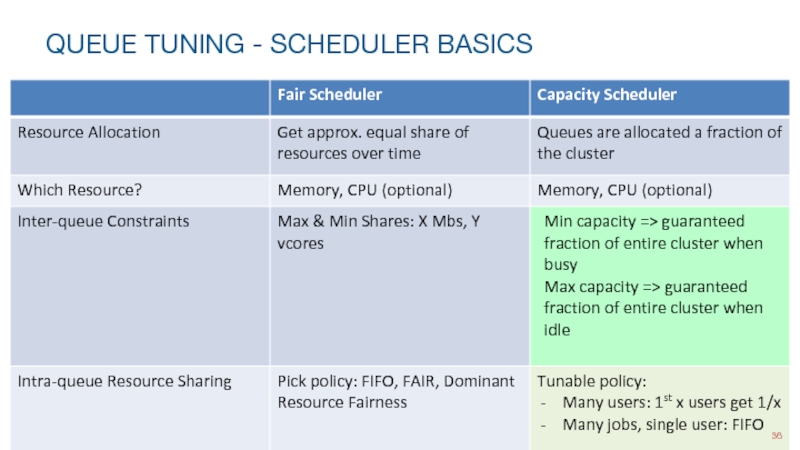

- 36. QUEUE TUNING - SCHEDULER BASICS

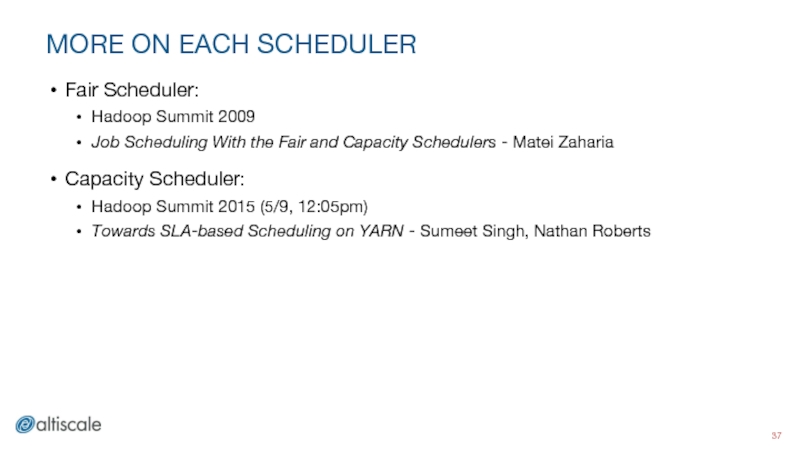

- 37. MORE ON EACH SCHEDULER Fair Scheduler:

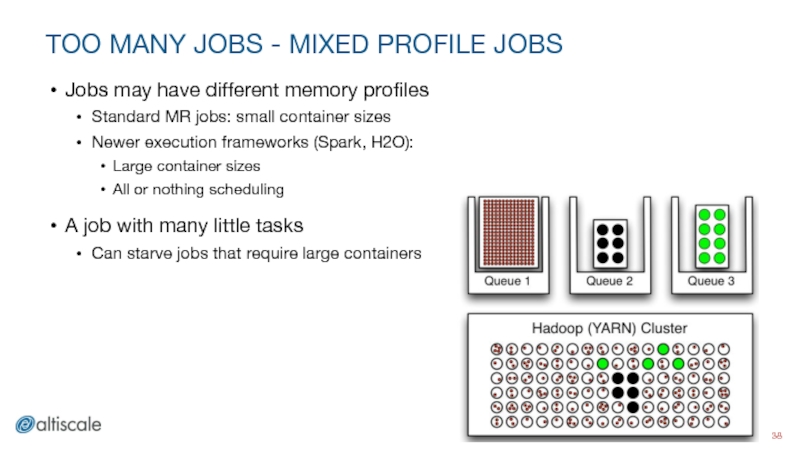

- 38. TOO MANY JOBS - MIXED PROFILE JOBS

- 39. TOO MANY JOBS - MIXED PROFILE JOBS

- 40. TOO MANY JOBS - HARDENING YOUR CLUSTER

- 41. FAILURE CATEGORY 3 - TOO MANY USERS

- 42. TOO MANY USERS - QUEUE ACCESS Use

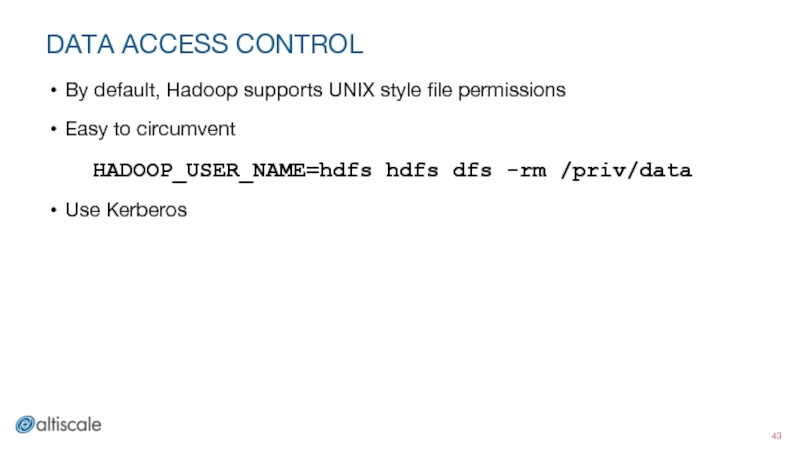

- 43. DATA ACCESS CONTROL By default, Hadoop supports

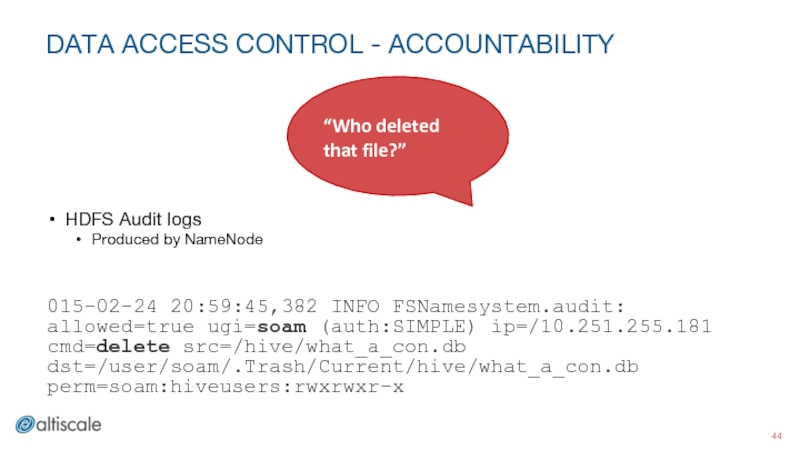

- 44. DATA ACCESS CONTROL - ACCOUNTABILITY

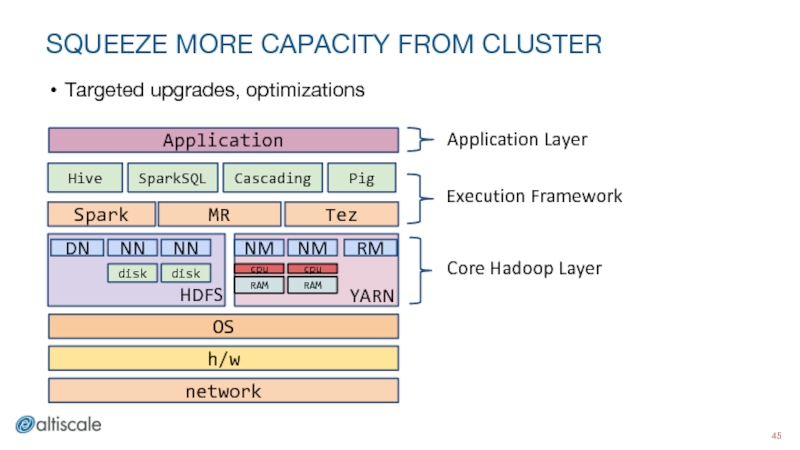

- 45. SQUEEZE MORE CAPACITY FROM CLUSTER

- 46. SQUEEZE MORE CAPACITY FROM CLUSTER Optimizations: Application

- 47. QUESTIONS? COMMENTS?

Слайд 1MANAGING GROWTH IN PRODUCTION HADOOP DEPLOYMENTS

Soam Acharya

@soamwork

Charles Wimmer

@cwimmer

Altiscale

@altiscale

HADOOP SUMMIT 2015

SAN JOSE

Слайд 2ALTISCALE : INFRASTRUCTURE NERDS

Soam Acharya - Head of Application Engineering

Formerly Chief

Charles Wimmer, Head of Operations

Former Yahoo! & LinkedIn SRE

Managed 40000 nodes in Hadoop clusters at Yahoo!

Hadoop as a Service, built and managed by Big Data, SaaS, and enterprise software veterans

Yahoo!, Google, LinkedIn, VMWare, Oracle, ...

Слайд 6BUT THEN ...

Your data scientists get on the cluster and start

Your BI team starts running interactive SQL on Hadoop queries ..

Слайд 7BUT THEN ...

Your data scientists get on the cluster and start

Your BI team starts running interactive SQL on Hadoop queries ..

Your mobile team starts sending RT events into the cluster ..

Слайд 8BUT THEN ...

Your data scientists get on the cluster and start

Your BI team starts running interactive SQL on Hadoop queries ..

Your mobile team starts sending RT events into the cluster ..

You sign up more clients

And the input data for your initial use case doubles ..

Слайд 11THE “SUCCESS DISASTER” SCENARIO

Initial success

Many subsequent use cases on cluster

Cluster gets

Слайд 13HOW EXTRICATE YOURSELF?

Short term strategy:

Get more resources for your cluster

Expand cluster

More headroom for longer term strategy

Longer term strategy

Слайд 14LONGER TERM STRATEGY

Can’t cover every scenario

Per failure category:

Selected pressure points (PPs)

Can

Identify and shore up pressure points

Squeeze more capacity from cluster

Слайд 17PRESSURE POINT - HDFS AT CAPACITY

Unpredictable cluster behavior

Transient errors

Hadoop daemons can’t

Execution framework errors:

Hive unable to run queries that create temp tables

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: org.apache.hadoop.ipc.RemoteException(java.io.IOException): File /tmp/hive-user/hive_2014-07-23_08-43-40_408_2604848084853512498-1/_task_tmp.-ext-10001/_tmp.000121_0 could only be replicated to 1 nodes instead of minReplication (=2). There are xx datanode(s) running and no node(s) are excluded in this operation.

Слайд 18HDFS AT CAPACITY MITIGATION

Use HDFS quotas!

hdfs dfsadmin -setSpaceQuota 113367670 /

Quotas can

Cannot be set per user

Protection against accidental cluster destabilzation

Слайд 19TOO MANY OBJECTS

“Elephants are afraid of mice. Hadoop is afraid of

# of dirs + files

# of blocks

Слайд 20TOO MANY OBJECTS

Memory pressure:

Namenode heap: too many files + directories +

Datanode heap: too many blocks allocated per node

Performance overhead

Too much time spent on container creation and teardown

More time spent in execution framework than actual application

Слайд 21WHERE ARE THE OBJECTS?

Use HDFS count:

hdfs dfs -count -q

Number

On per directory basis

Use fsimage files:

Can be produced by NN

hdfs oiv

Detailed breakdown of the HDFS file system

Hard!

Слайд 22TOO MANY OBJECTS - MITIGATION

Short term:

Increase NN/DN heapsizes

Node physical limits

Increase cluster

Longer term:

Find and compact

Coalesce multiple files

Use HAR

Слайд 23COALESCE MULTIPLE FILES I

Hadoop streaming job

Whatever Hadoop can read on cluster

LZO output

hadoop \

jar /opt/hadoop/share/hadoop/tools/lib/hadoop-streaming-*.jar \

-D mapreduce.job.reduces=40 \

-D mapred.output.compress=true \

-D mapred.output.compression.codec=\

com.hadoop.compression.lzo.LzopCodec \

-D mapreduce.output.fileoutputformat.compress.type=BLOCK \

-D mapreduce.reduce.memory.mb=8192 \

-mapper /bin/cat \

-reducer /bin/cat \

-input $IN_DIR \

-output $DIR

Слайд 24COALESCE MULTIPLE FILES II

Build index for LZO output

Tell hadoop where the

hadoop \ jar /opt/hadoop/share/hadoop/common/lib/hadoop-lzo-*.jar \ com.hadoop.compression.lzo.DistributedLzoIndexer \ $DIR

Слайд 25COMBINE FILES INTO HAR

HAR: Hadoop Archive

hadoop archive -archiveName .har -p

MR job to produce archive

Watch out for replication factor

On versions 2.4 and earlier, source files are set to a default replication factor 10

Not good for small clusters

-r

Слайд 26COMBINE FILES INTO HAR

HAR archives are useful if you want to

[alti_soam@desktop ~]$ hdfs dfs -ls har:///tmp/alti_soam_test.har Found 3 items drwxr-xr-x - alti_soam hdfs 0 2013-09-03 22:44 har:/tmp/alti_soam_test.har/examples drwxr-xr-x - alti_soam hdfs 0 2013-11-16 03:53 har:/tmp/alti_soam_test.har/test-pig-avro-dir drwxr-xr-x - alti_soam hdfs 0 2013-11-12 22:23 har:/tmp/alti_soam_test.har/test-camus

Слайд 27FAILURE CATEGORY 2 - TOO MANY JOBS

“Help! My job is stuck!”

Jobs

Jobs don’t start

“Right” jobs finish last

Mixed profile job issues

Слайд 28TOO MANY JOBS REMEDIATION

Need to quantify job processing on cluster

Hadoop job

Resource Manager logs

History Server logs, job history files

APIs

Analysis goals:

Queue usage => cluster utilization

Time spent by jobs/containers in waiting state

Job level stats

# of jobs, type of jobs …

Queue tuning

Слайд 29HADOOP LOGS - RESOURCE MANAGER

job stats (outcome, duration, startdate)

queue used

container:

number allocated

Memory,

state transition times

outcome

Слайд 30HADOOP LOGS - JOBHISTORY FILES

Configure history server to produce files

Created for

HDFS data volume processed

for mappers/reducers:

CPU time

memory used

start/end time

max parallel maps, reduces

GC time

not available for Tez/Spark:

Use timeline server for better logging

Timeline server dependencies

Слайд 31HADOOP LOG ANALYSIS

Analysis goals:

Queue usage => cluster utilization

Time spent by jobs/containers

Job level stats:

# of jobs

Failed/killed vs successful

Type of jobs

Container level stats

How analyze logs?

Custom scripts

Parse job history files, hadoop logs

Data warehouse

Visualization

Not much by the way of publicly available tools

Слайд 32SAMPLE PLOT: CONTAINER WAIT TIME AND UTILIZATION PER QUEUE

Container wait times

Queue

vCore usage

Слайд 35QUEUE TUNING STRATEGY

Determine how you want your cluster to behave

Pick scheduler

Real world examples:

Production jobs must get resources

Dedicate a certain portion of the cluster regardless of cluster state (idle, at capacity)

Data loading jobs

Constrain to a small portion of cluster to preserve network bandwidth

Research jobs:

Small portion of cluster at peak

Large portion of cluster when idle

Divide up cluster amongst business units

Слайд 37MORE ON EACH SCHEDULER

Fair Scheduler:

Hadoop Summit 2009

Job Scheduling With the

Capacity Scheduler:

Hadoop Summit 2015 (5/9, 12:05pm)

Towards SLA-based Scheduling on YARN - Sumeet Singh, Nathan Roberts

Слайд 38TOO MANY JOBS - MIXED PROFILE JOBS

Jobs may have different memory

Standard MR jobs: small container sizes

Newer execution frameworks (Spark, H2O):

Large container sizes

All or nothing scheduling

A job with many little tasks

Can starve jobs that require large containers

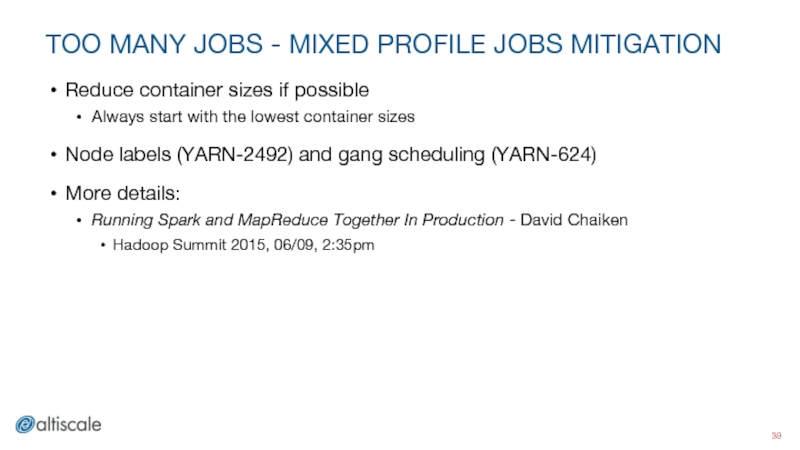

Слайд 39TOO MANY JOBS - MIXED PROFILE JOBS MITIGATION

Reduce container sizes if

Always start with the lowest container sizes

Node labels (YARN-2492) and gang scheduling (YARN-624)

More details:

Running Spark and MapReduce Together In Production - David Chaiken

Hadoop Summit 2015, 06/09, 2:35pm

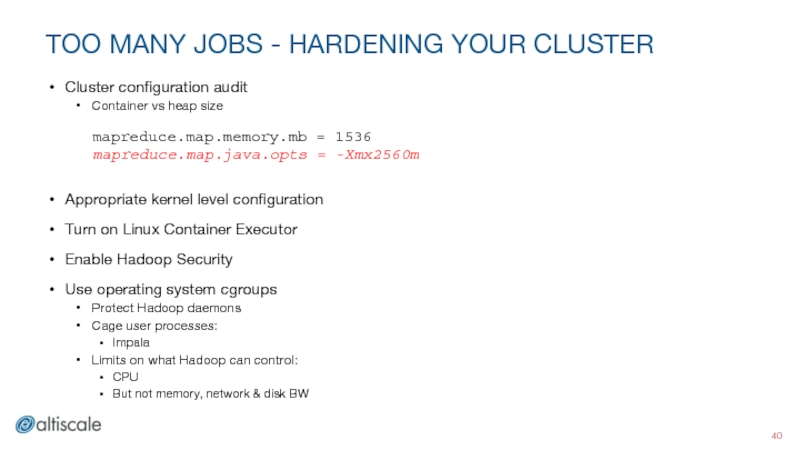

Слайд 40TOO MANY JOBS - HARDENING YOUR CLUSTER

Cluster configuration audit

Container vs heap

Appropriate kernel level configuration

Turn on Linux Container Executor

Enable Hadoop Security

Use operating system cgroups

Protect Hadoop daemons

Cage user processes:

Impala

Limits on what Hadoop can control:

CPU

But not memory, network & disk BW

mapreduce.map.memory.mb = 1536

mapreduce.map.java.opts = -Xmx2560m

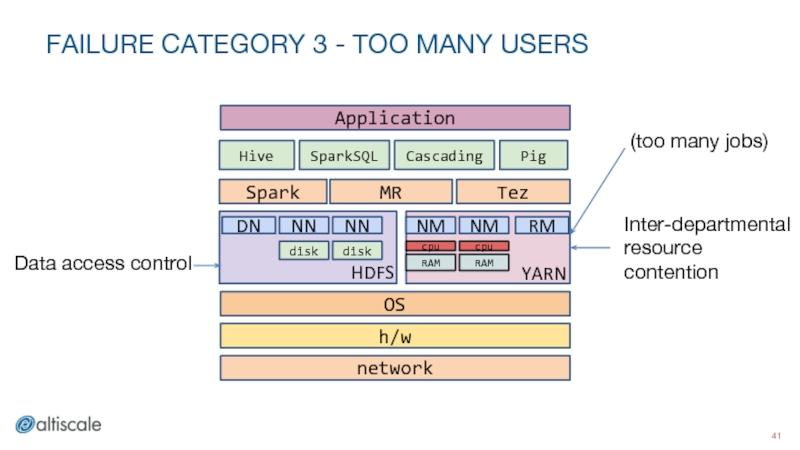

Слайд 41FAILURE CATEGORY 3 - TOO MANY USERS

Data access control

Inter-departmental resource contention

(too

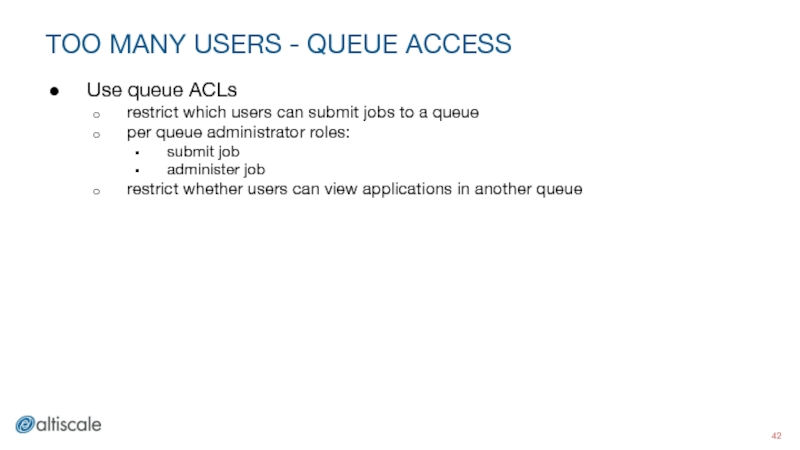

Слайд 42TOO MANY USERS - QUEUE ACCESS

Use queue ACLs

restrict which users can

per queue administrator roles:

submit job

administer job

restrict whether users can view applications in another queue

Слайд 43DATA ACCESS CONTROL

By default, Hadoop supports UNIX style file permissions

Easy to

HADOOP_USER_NAME=hdfs hdfs dfs -rm /priv/data

Use Kerberos

Слайд 44DATA ACCESS CONTROL - ACCOUNTABILITY

HDFS Audit logs

Produced by NameNode

015-02-24 20:59:45,382 INFO

Слайд 45SQUEEZE MORE CAPACITY FROM CLUSTER

Application Layer

Execution Framework

Core Hadoop Layer

Targeted upgrades, optimizations

Слайд 46SQUEEZE MORE CAPACITY FROM CLUSTER

Optimizations:

Application layer:

Query optimizations, algorithmic level optimizations

Upgrading:

Execution Framework:

Tremendous

Pig, Cascading all continue to improve

Hadoop layer:

Recent focus on security, stability

Recommendation:

Focus on upgrading execution framework