Chapter 3

- Главная

- Разное

- Дизайн

- Бизнес и предпринимательство

- Аналитика

- Образование

- Развлечения

- Красота и здоровье

- Финансы

- Государство

- Путешествия

- Спорт

- Недвижимость

- Армия

- Графика

- Культурология

- Еда и кулинария

- Лингвистика

- Английский язык

- Астрономия

- Алгебра

- Биология

- География

- Детские презентации

- Информатика

- История

- Литература

- Маркетинг

- Математика

- Медицина

- Менеджмент

- Музыка

- МХК

- Немецкий язык

- ОБЖ

- Обществознание

- Окружающий мир

- Педагогика

- Русский язык

- Технология

- Физика

- Философия

- Химия

- Шаблоны, картинки для презентаций

- Экология

- Экономика

- Юриспруденция

Processes. Processes & Threads. (Chapter 3) презентация

Содержание

- 1. Processes. Processes & Threads. (Chapter 3)

- 2. Process A program in execution An instance

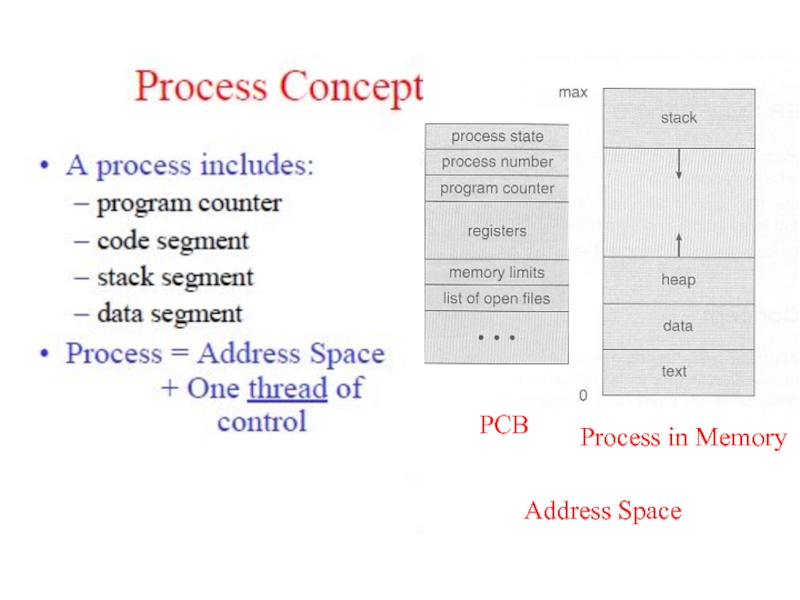

- 3. Address Space PCB Process in Memory

- 4. Multiprogramming The interleaved execution of two or

- 5. Processes The Process Model Multiprogramming of four

- 6. Multiprogramming

- 7. Cooperating Processes (I) Sequential programs consist of

- 8. Cooperating Processes (II) Cooperating processes need to

- 9. Threads: Motivation Process created and managed by

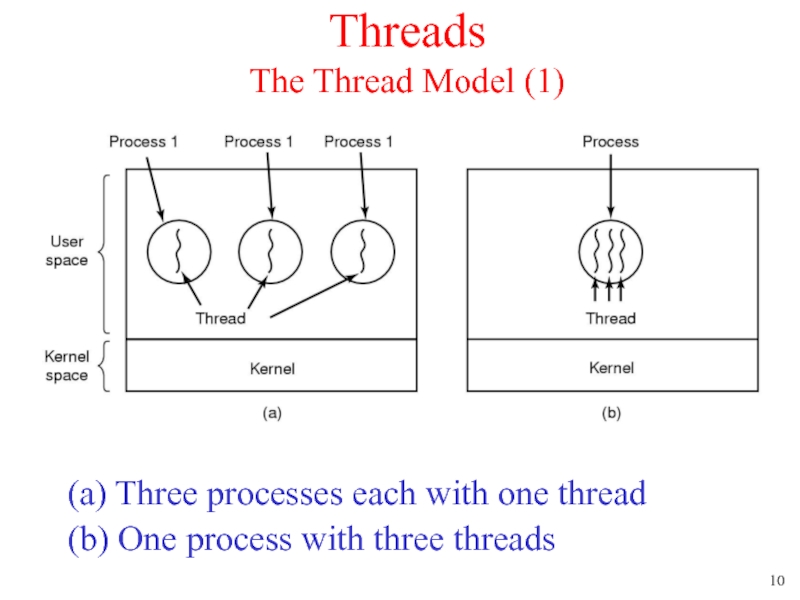

- 10. Threads The Thread Model (1) (a) Three

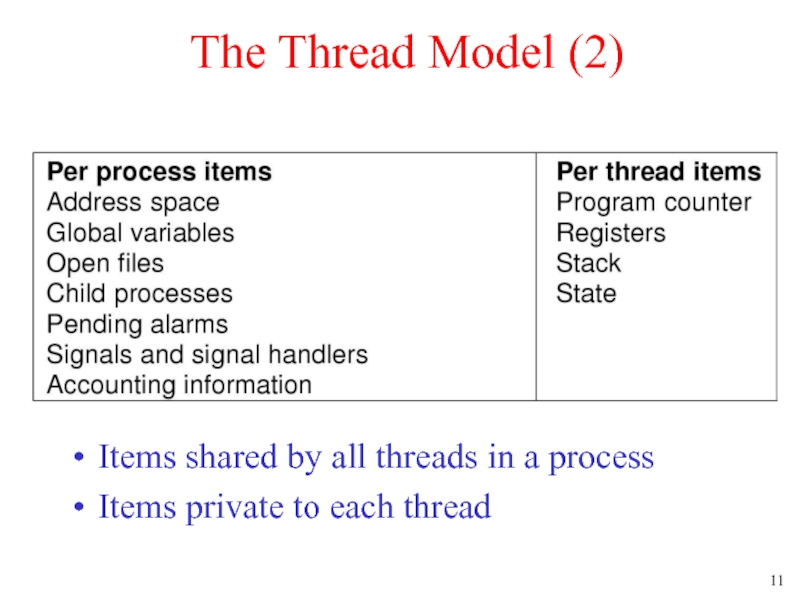

- 11. The Thread Model (2) Items shared by

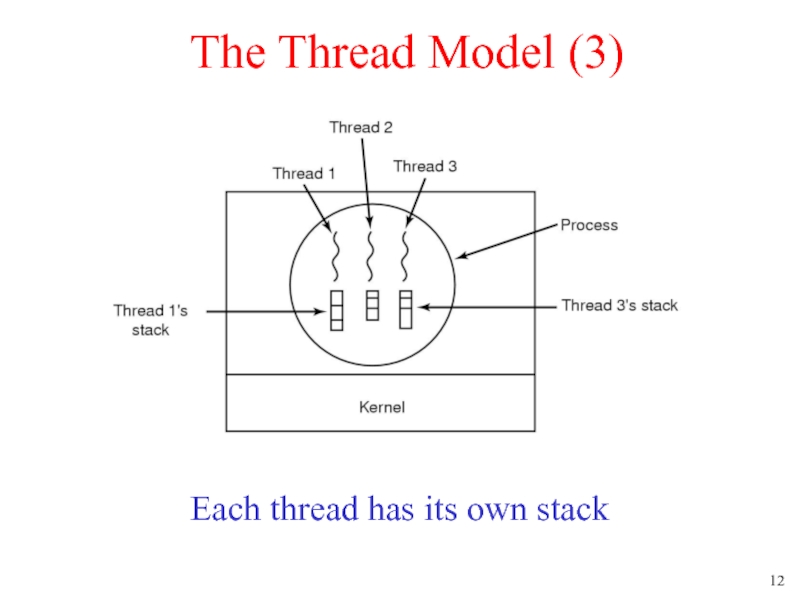

- 12. The Thread Model (3) Each thread has its own stack

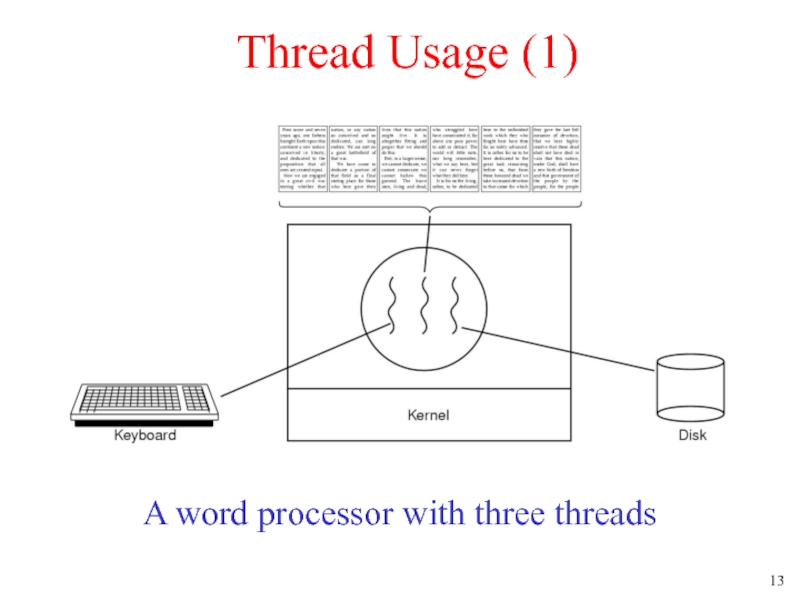

- 13. Thread Usage (1) A word processor with three threads

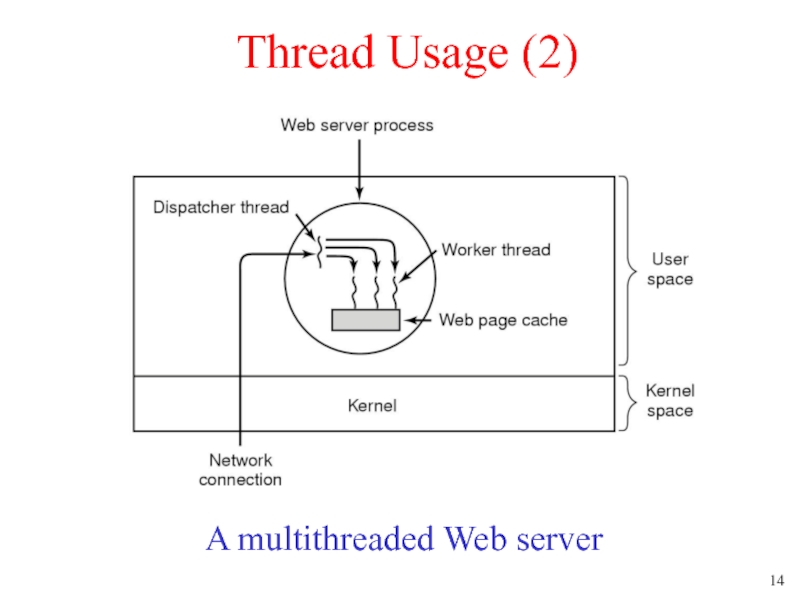

- 14. Thread Usage (2) A multithreaded Web server

- 16. Thread Implementation - Packages Threads are

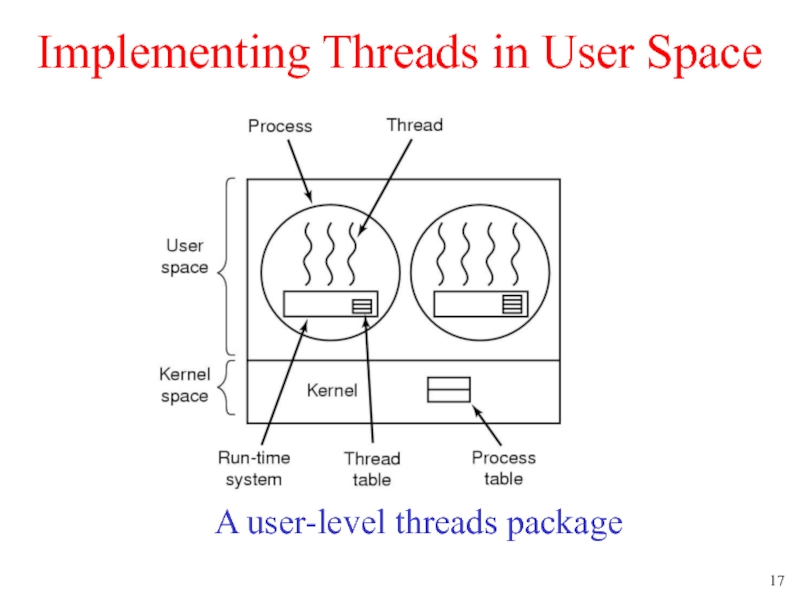

- 17. Implementing Threads in User Space A user-level threads package

- 18. User-Level Threads Thread management done by user-level

- 19. User-Level Threads Thread library entirely executed in

- 20. Kernel-Level Threads Kernel is aware of and

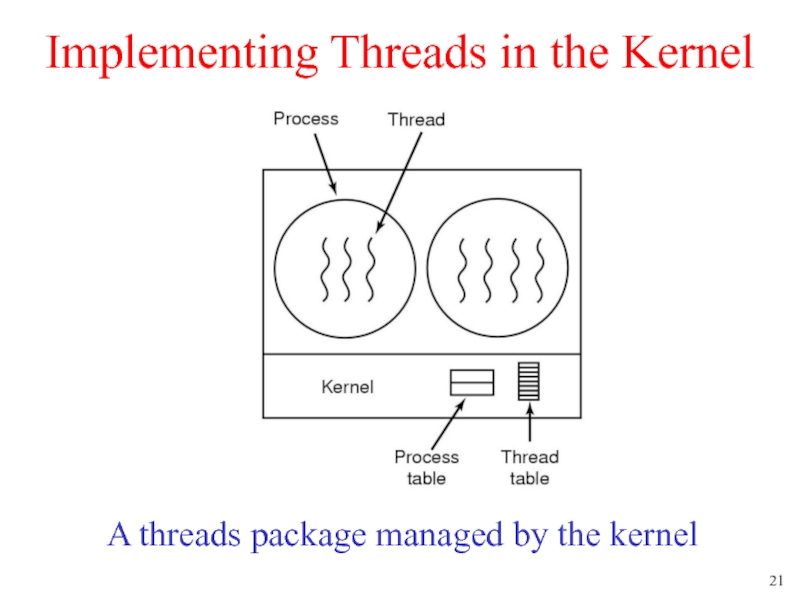

- 21. Implementing Threads in the Kernel A threads package managed by the kernel

- 22. Kernel Threads Supported by the Kernel Examples: newer versions of Windows UNIX Linux

- 23. Linux Threads Linux refers to them as

- 24. Pthreads A POSIX standard (IEEE 1003.1c) API

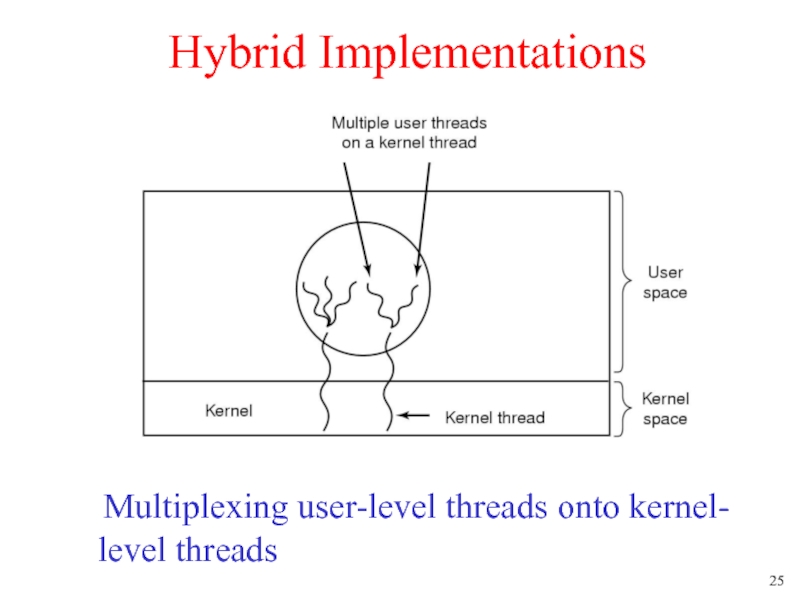

- 25. Hybrid Implementations Multiplexing user-level threads onto kernel- level threads

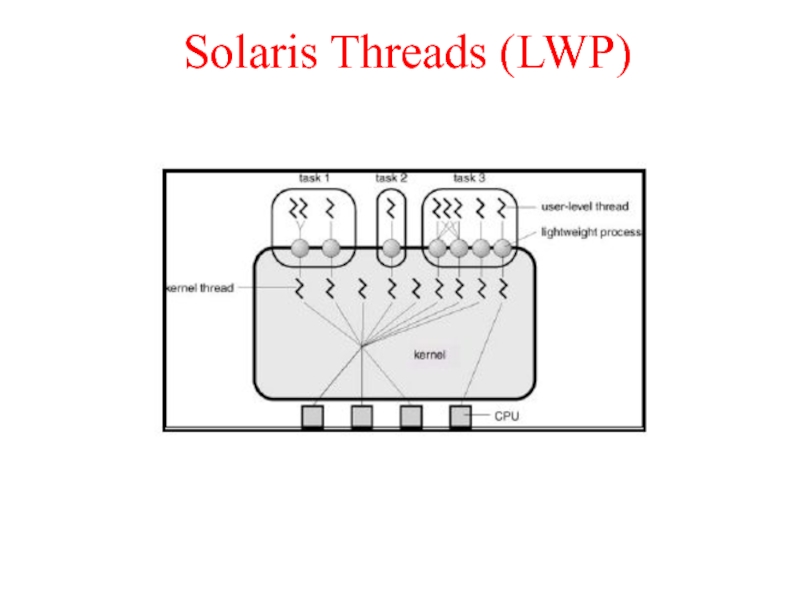

- 26. Solaris Threads (LWP)

- 27. LWP Advantages Cheap user-level thread management

Слайд 1Processes

Part I

Processes & Threads*

*Referred to slides by Dr. Sanjeev Setia at

George Mason University

Слайд 2Process

A program in execution

An instance of a program running on a

computer

The entity that can be assigned to and executed on a processor

A unit of activity characterized by

the execution of a sequence of instructions

a current state

an associated set of system resources

The entity that can be assigned to and executed on a processor

A unit of activity characterized by

the execution of a sequence of instructions

a current state

an associated set of system resources

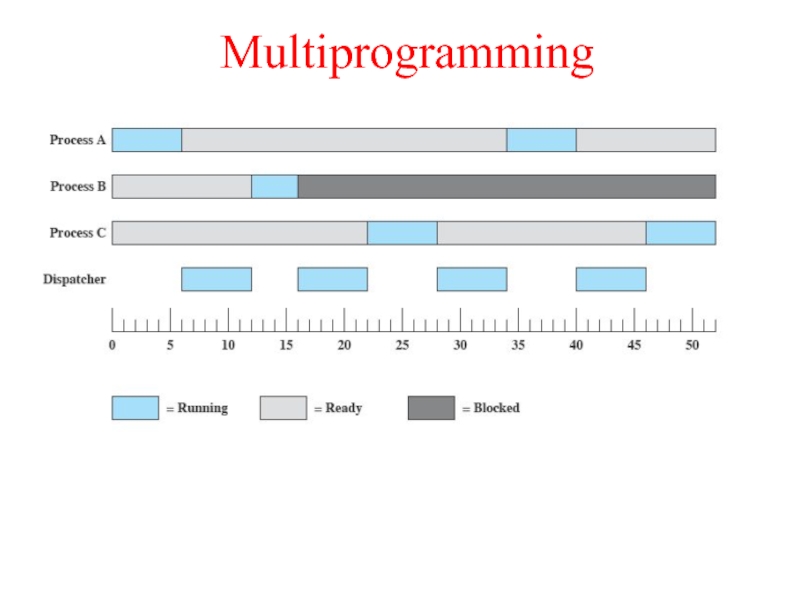

Слайд 4Multiprogramming

The interleaved execution of two or more computer programs by a

single processor

An important technique that

enables a time-sharing system

allows the OS to overlap I/O and computation, creating an efficient system

An important technique that

enables a time-sharing system

allows the OS to overlap I/O and computation, creating an efficient system

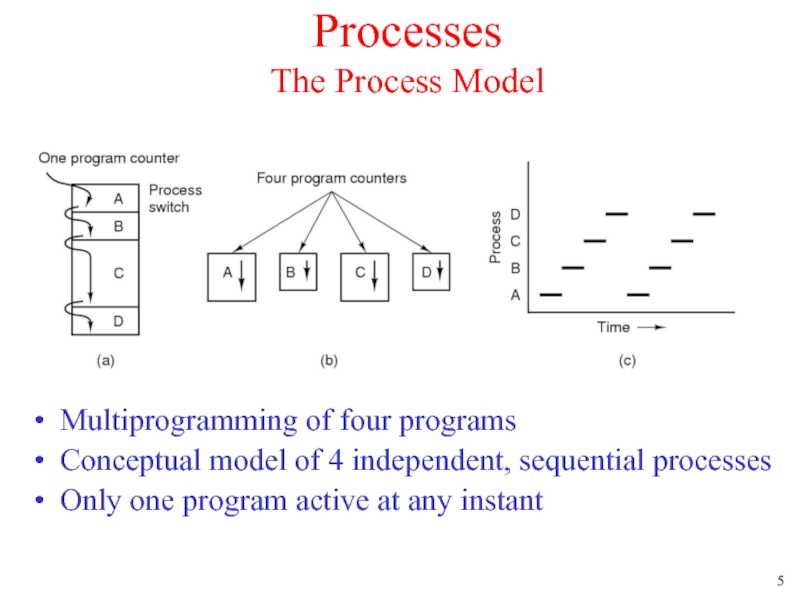

Слайд 5Processes

The Process Model

Multiprogramming of four programs

Conceptual model of 4 independent, sequential

processes

Only one program active at any instant

Only one program active at any instant

Слайд 7Cooperating Processes (I)

Sequential programs consist of a single process

Concurrent applications consist

of multiple cooperating processes that execute concurrently

Advantages

Can exploit multiple CPUs (hardware concurrency) for speeding up application

Application can benefit from software concurrency, e.g., web servers, window systems

Advantages

Can exploit multiple CPUs (hardware concurrency) for speeding up application

Application can benefit from software concurrency, e.g., web servers, window systems

Слайд 8Cooperating Processes (II)

Cooperating processes need to share information

Since each process

has its own address space, OS mechanisms are needed to let process exchange information

Two paradigms for cooperating processes

Shared Memory

OS enables two independent processes to have a shared memory segment in their address spaces

Message-passing

OS provides mechanisms for processes to send and receive messages

Two paradigms for cooperating processes

Shared Memory

OS enables two independent processes to have a shared memory segment in their address spaces

Message-passing

OS provides mechanisms for processes to send and receive messages

Слайд 9Threads: Motivation

Process created and managed by the OS kernel

Process creation expensive,

e.g., fork system call

Context switching expensive

IPC requires kernel intervention expensive

Cooperating processes – no need for memory protection, i.e., separate address spaces

Context switching expensive

IPC requires kernel intervention expensive

Cooperating processes – no need for memory protection, i.e., separate address spaces

Слайд 10Threads

The Thread Model (1)

(a) Three processes each with one thread

(b) One

process with three threads

Слайд 16Thread Implementation - Packages

Threads are provided as a package, including

operations to create, destroy, and synchronize them

A package can be implemented as:

User-level threads

Kernel threads

A package can be implemented as:

User-level threads

Kernel threads

Слайд 18User-Level Threads

Thread management done by user-level threads library

Examples

POSIX Pthreads

Mach C-threads

Solaris threads

Java

threads

Слайд 19User-Level Threads

Thread library entirely executed in user mode

Cheap to manage threads

Create:

setup a stack

Destroy: free up memory

Context switch requires few instructions

Just save CPU registers

Done based on program logic

A blocking system call blocks all peer threads

Destroy: free up memory

Context switch requires few instructions

Just save CPU registers

Done based on program logic

A blocking system call blocks all peer threads

Слайд 20Kernel-Level Threads

Kernel is aware of and schedules threads

A blocking system call,

will not block all peer threads

Expensive to manage threads

Expensive context switch

Kernel Intervention

Expensive to manage threads

Expensive context switch

Kernel Intervention

Слайд 23Linux Threads

Linux refers to them as tasks rather than threads.

Thread creation

is done through clone() system call.

Unlike fork(), clone() allows a child task to share the address space of the parent task (process)

Unlike fork(), clone() allows a child task to share the address space of the parent task (process)

Слайд 24Pthreads

A POSIX standard (IEEE 1003.1c) API for thread creation and synchronization.

API

specifies behavior of the thread library, implementation is up to development of the library.

POSIX Pthreads - may be provided as either a user or kernel library, as an extension to the POSIX standard.

Common in UNIX operating systems.

POSIX Pthreads - may be provided as either a user or kernel library, as an extension to the POSIX standard.

Common in UNIX operating systems.

Слайд 27LWP Advantages

Cheap user-level thread management

A blocking system call will not suspend

the whole process

LWPs are transparent to the application

LWPs can be easily mapped to different CPUs

LWPs are transparent to the application

LWPs can be easily mapped to different CPUs