- Главная

- Разное

- Дизайн

- Бизнес и предпринимательство

- Аналитика

- Образование

- Развлечения

- Красота и здоровье

- Финансы

- Государство

- Путешествия

- Спорт

- Недвижимость

- Армия

- Графика

- Культурология

- Еда и кулинария

- Лингвистика

- Английский язык

- Астрономия

- Алгебра

- Биология

- География

- Детские презентации

- Информатика

- История

- Литература

- Маркетинг

- Математика

- Медицина

- Менеджмент

- Музыка

- МХК

- Немецкий язык

- ОБЖ

- Обществознание

- Окружающий мир

- Педагогика

- Русский язык

- Технология

- Физика

- Философия

- Химия

- Шаблоны, картинки для презентаций

- Экология

- Экономика

- Юриспруденция

Nets compression and speedup презентация

Содержание

- 1. Nets compression and speedup

- 2. Deep neural networks compression. Motivation Neural

- 3. Deep neural networks compression. Overview

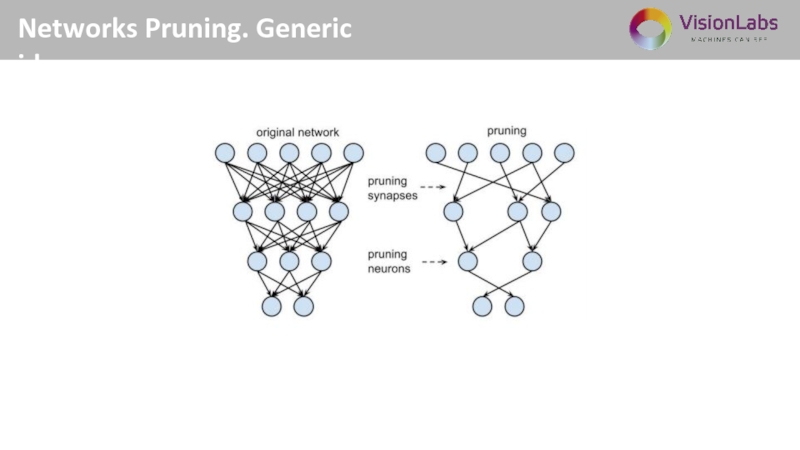

- 4. Networks Pruning

- 5. Networks Pruning. Generic idea 2

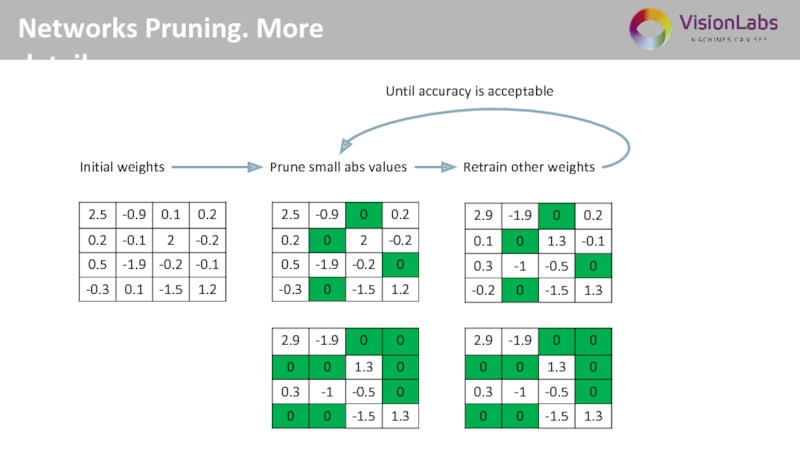

- 6. Networks Pruning. More details Initial weights

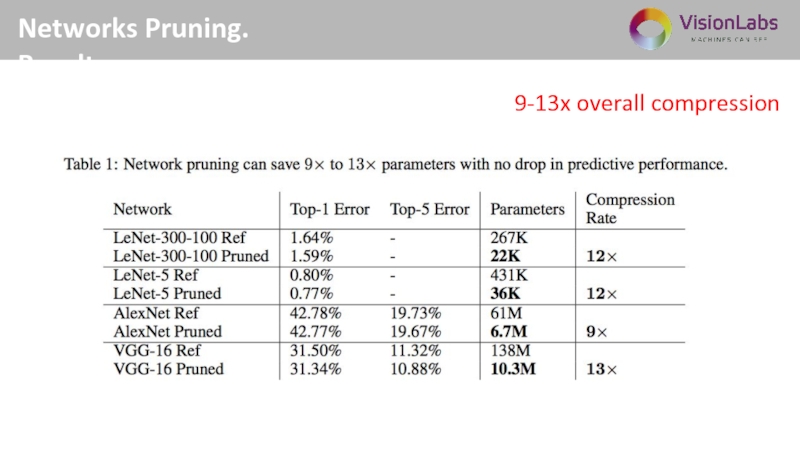

- 7. Networks Pruning. Results 2 9-13x overall compression

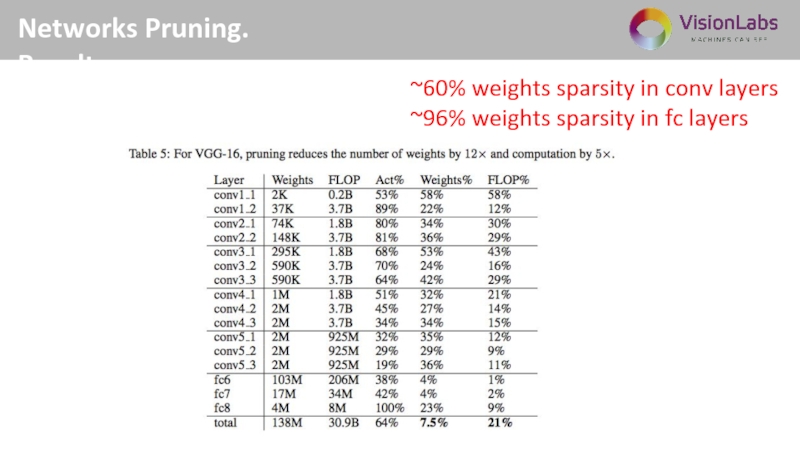

- 8. Networks Pruning. Results 2 ~60%

- 9. Deep Compression 2 ICLR 2016 Best Paper

- 10. Deep Compression. Overview 2 Algorithm:

- 11. Deep Compression. Weights pruning Already discussed

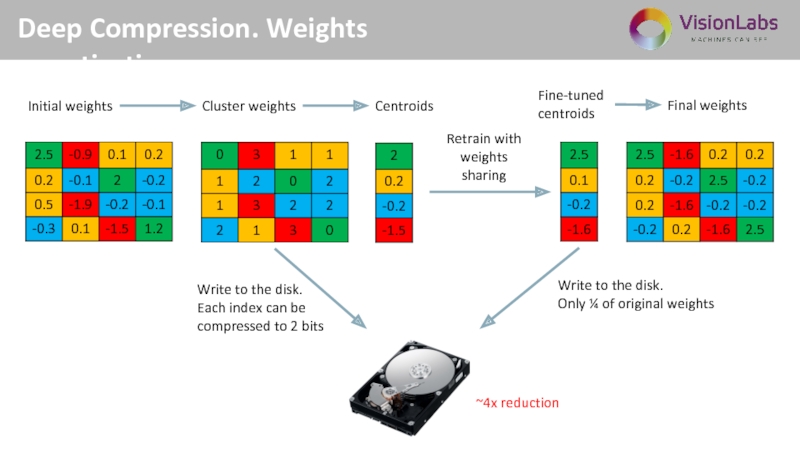

- 12. Deep Compression. Weights quantization Initial weights

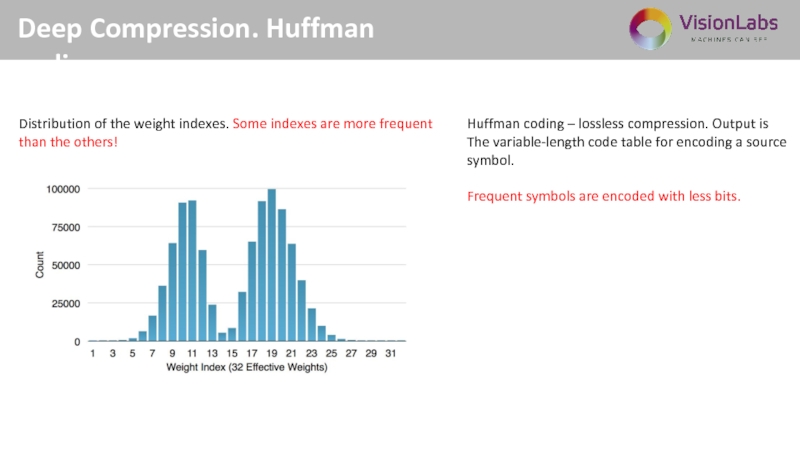

- 13. Deep Compression. Huffman coding 2

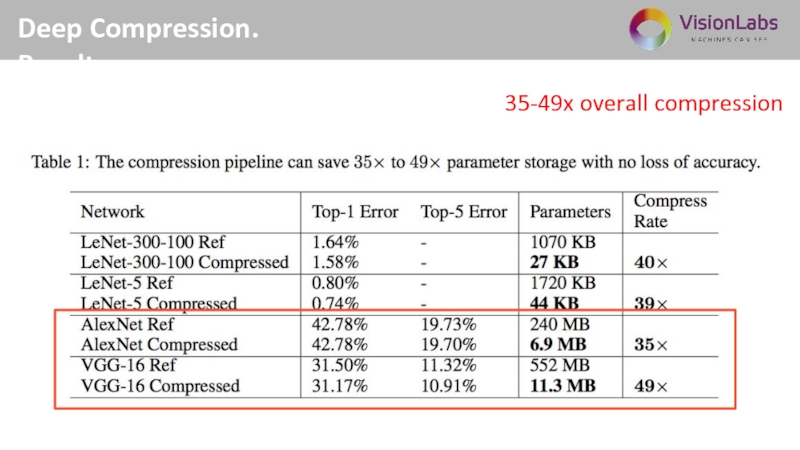

- 14. Deep Compression. Results 35-49x overall compression

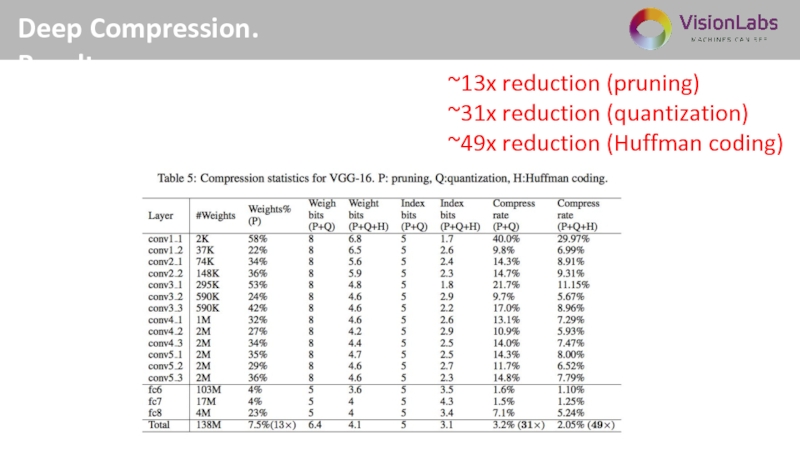

- 15. Deep Compression. Results ~13x reduction (pruning) ~31x reduction (quantization) ~49x reduction (Huffman coding)

- 16. Incremental Network Quantization

- 17. Incremental Network Quantization. Idea Idea:

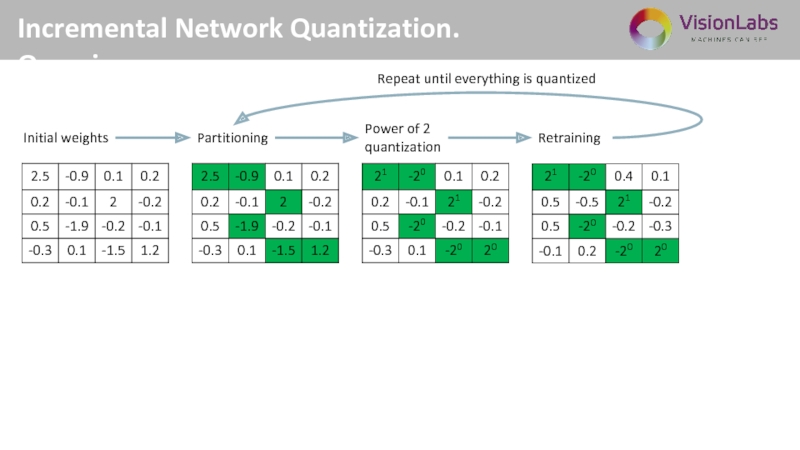

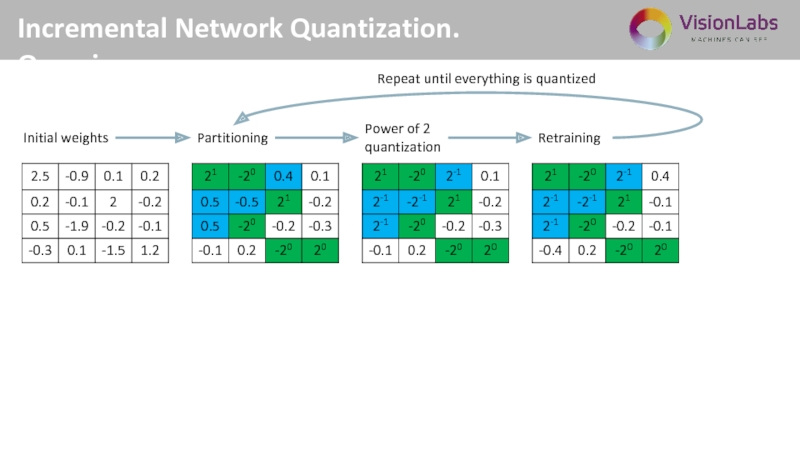

- 18. Incremental Network Quantization. Overview

- 19. Incremental Network Quantization. Overview Initial weights

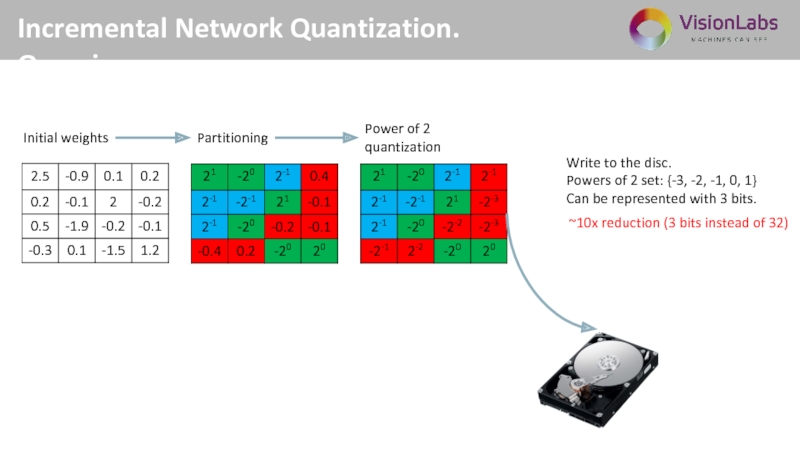

- 20. Incremental Network Quantization. Overview 2

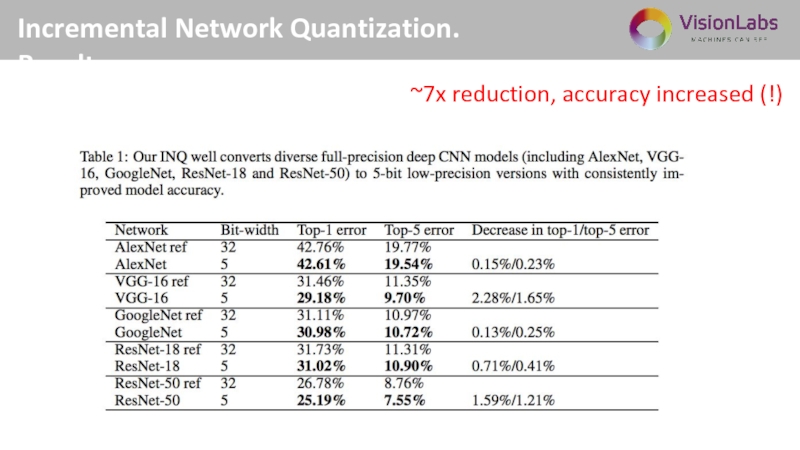

- 21. Incremental Network Quantization. Results ~7x reduction, accuracy increased (!)

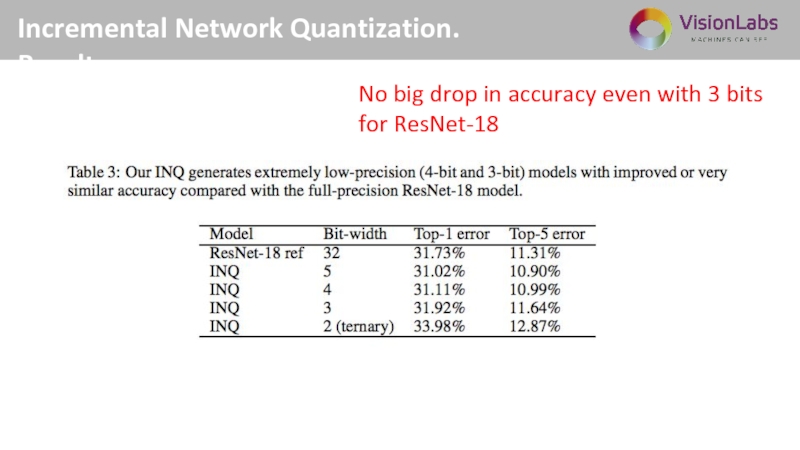

- 22. Incremental Network Quantization. Results 2

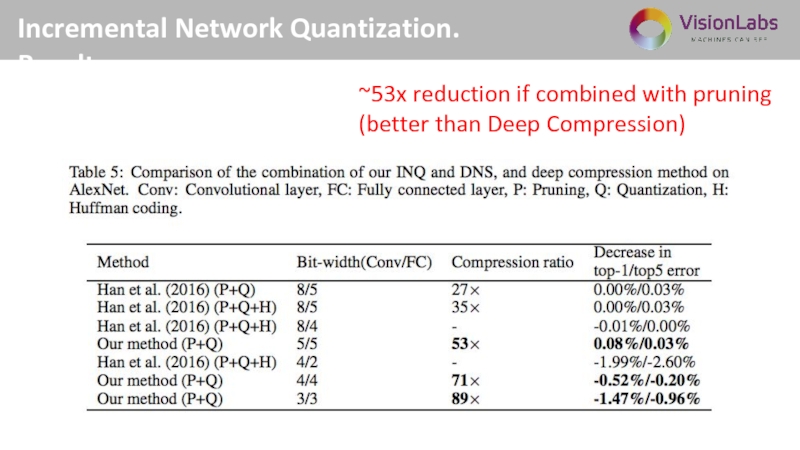

- 23. Incremental Network Quantization. Results ~53x reduction if combined with pruning (better than Deep Compression)

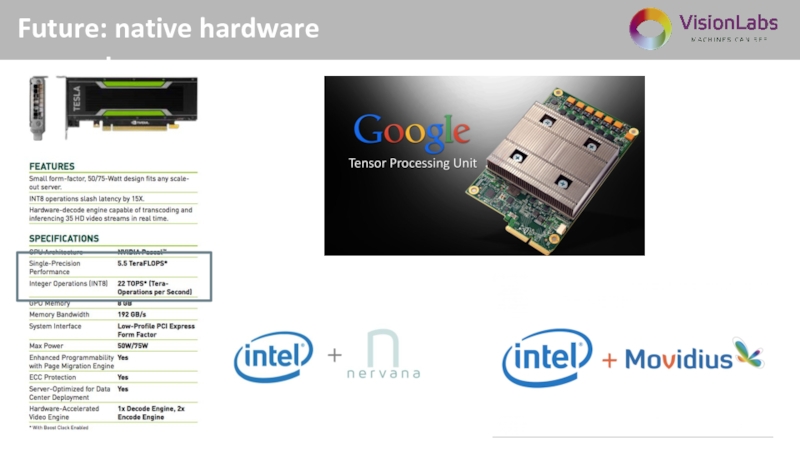

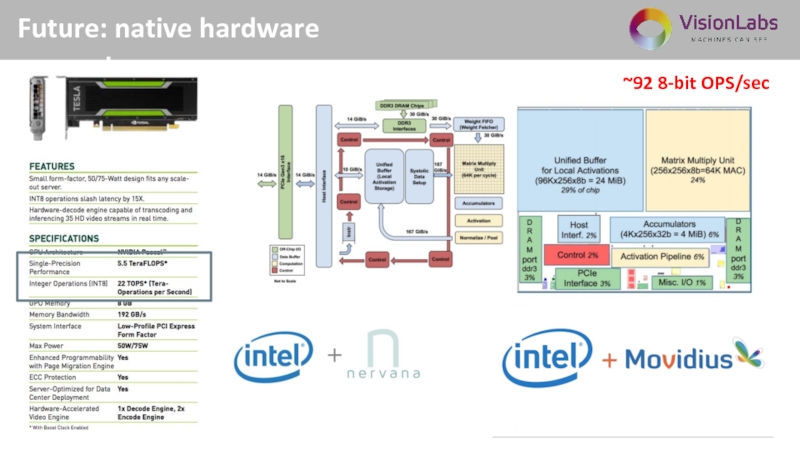

- 24. Future: native hardware support

- 25. Future: native hardware support ~92 8-bit OPS/sec

- 26. Этапы типового внедрения платформы Alexander Chigorin Head of research projects VisionLabs a.chigorin@visionlabs.ru

Слайд 1

Deep neural networks compression

Alexander Chigorin

Head of research projects

VisionLabs

a.chigorin@visionlabs.ru

Слайд 2

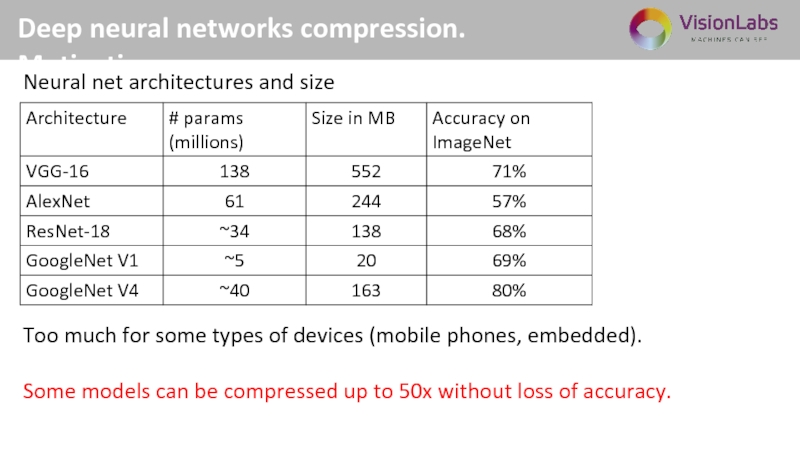

Deep neural networks compression. Motivation

Neural net architectures and size

Too much for

Some models can be compressed up to 50x without loss of accuracy.

Слайд 3

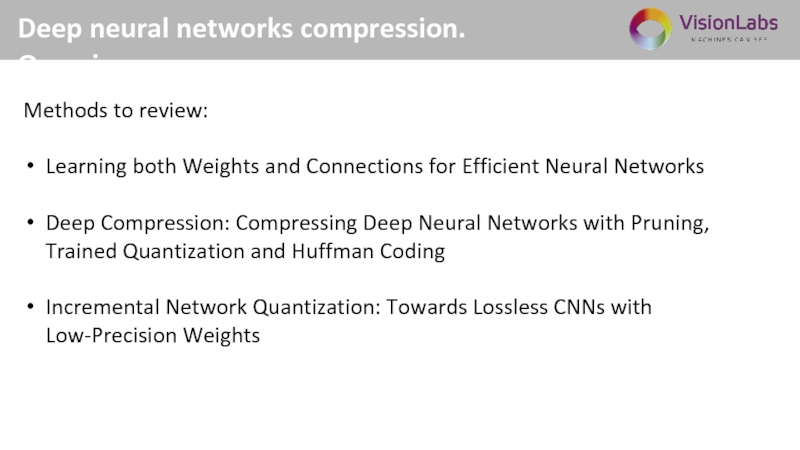

Deep neural networks compression. Overview

Methods to review:

Learning both Weights and Connections

Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding

Incremental Network Quantization: Towards Lossless CNNs with Low-Precision Weights

Слайд 6

Networks Pruning. More details

Initial weights

Prune small abs values

Retrain other weights

Until accuracy

Слайд 8

Networks Pruning. Results

2

~60% weights sparsity in conv layers

~96% weights sparsity

Слайд 10

Deep Compression. Overview

2

Algorithm:

Iterative weights pruning

Weights quantization

Huffman encoding

Слайд 12

Deep Compression. Weights quantization

Initial weights

Cluster weights

Centroids

Fine-tuned

centroids

Final weights

Retrain with

weights

sharing

Write to the

Each index can be

compressed to 2 bits

Write to the disk.

Only ¼ of original weights

~4x reduction

Слайд 13

Deep Compression. Huffman coding

2

Huffman coding – lossless compression. Output is

The

symbol.

Frequent symbols are encoded with less bits.

Distribution of the weight indexes. Some indexes are more frequent

than the others!

Слайд 15

Deep Compression. Results

~13x reduction (pruning)

~31x reduction (quantization)

~49x reduction (Huffman coding)

Слайд 17

Incremental Network Quantization. Idea

Idea:

let’s quantize weights incrementally (as we do during

let’s quantize to the power of 2

Слайд 18

Incremental Network Quantization. Overview

Initial weights

Partitioning

Power of 2

quantization

Retraining

Repeat until everything

Слайд 19

Incremental Network Quantization. Overview

Initial weights

Partitioning

Power of 2

quantization

Retraining

Repeat until everything is

Слайд 20

Incremental Network Quantization. Overview

2

Initial weights

Partitioning

Power of 2

quantization

Write to the

Powers of 2 set: {-3, -2, -1, 0, 1}

Can be represented with 3 bits.

~10x reduction (3 bits instead of 32)

Слайд 22

Incremental Network Quantization. Results

2

No big drop in accuracy even with

for ResNet-18