- Главная

- Разное

- Дизайн

- Бизнес и предпринимательство

- Аналитика

- Образование

- Развлечения

- Красота и здоровье

- Финансы

- Государство

- Путешествия

- Спорт

- Недвижимость

- Армия

- Графика

- Культурология

- Еда и кулинария

- Лингвистика

- Английский язык

- Астрономия

- Алгебра

- Биология

- География

- Детские презентации

- Информатика

- История

- Литература

- Маркетинг

- Математика

- Медицина

- Менеджмент

- Музыка

- МХК

- Немецкий язык

- ОБЖ

- Обществознание

- Окружающий мир

- Педагогика

- Русский язык

- Технология

- Физика

- Философия

- Химия

- Шаблоны, картинки для презентаций

- Экология

- Экономика

- Юриспруденция

Fuzzing everything in 2014 презентация

Содержание

- 1. Fuzzing everything in 2014

- 2. About me Hacking binary since 15 Left

- 3. A BIT AWAY FROM A 0-DAY… Section 1: hacker’s

- 4. Microsoft Word 2007/2010 E

- 5. THE IDEAL FUZZER Section 2: engineer’s

- 6. Problems with fuzzers Too specialized. E.g. fuzz

- 7. What I want (from a fuzzer) Omnivore.

- 8. What I want, cont’d Autonomous. Can leave

- 9. Key design decisions Network client-server architecture Build

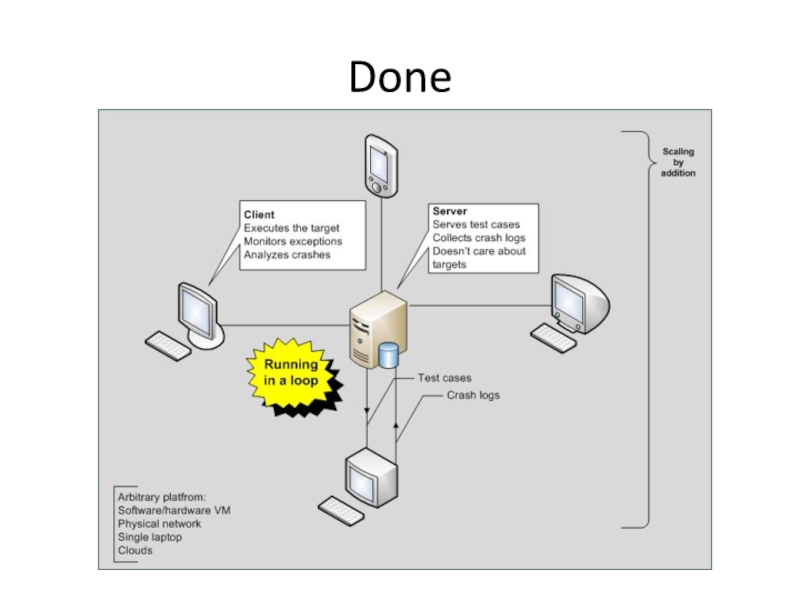

- 10. Done

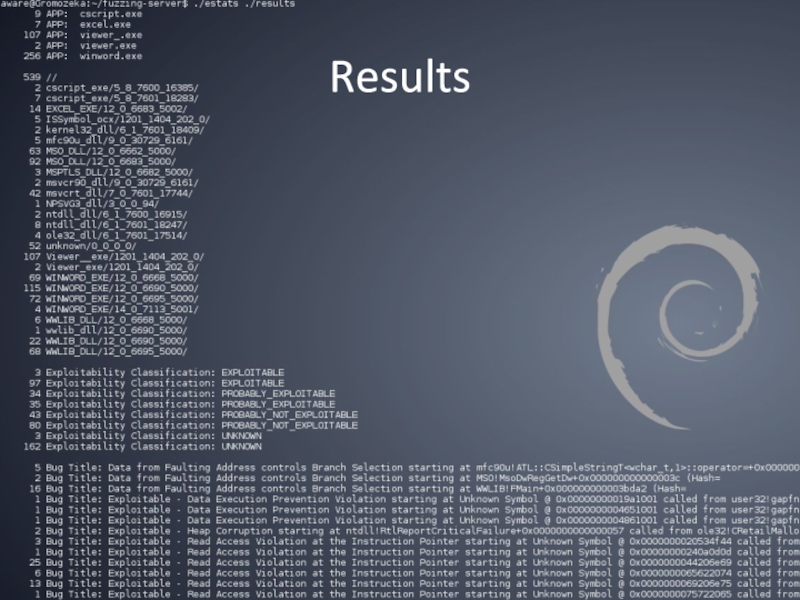

- 11. Results

- 12. THE MAGIC Section 3: director’s

- 13. Fuzzing in 2014 “Shellcoder’s Handbook”: 10 years

- 14. The beginner’s delusion “Success in fuzzing is

- 15. Thinking One only needs millions of test

- 16. Problem No algorithm to discover “fresh” code

- 17. Where is the “new” code? Code

- 18. Unobvious Examples CVE-2013-3906: TIFF 0’day Ogl.dll=gdiplus.dll alternative

- 19. Presumably Effortful Examples CVE-2013-1296: MS RDP

- 20. Presumably Constrained Example Standard ActiveX in

- 21. RESULTS Section 4: sponsor’s ☺

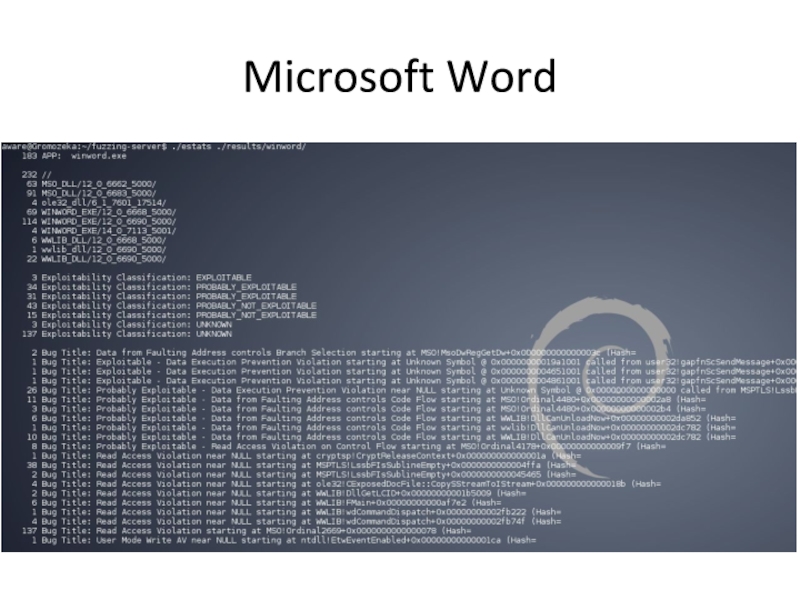

- 22. Microsoft Word

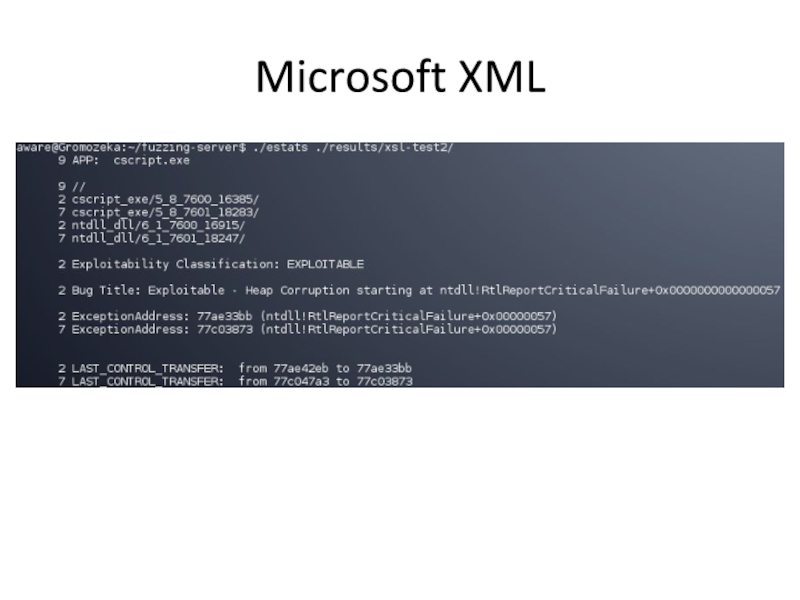

- 23. Microsoft XML

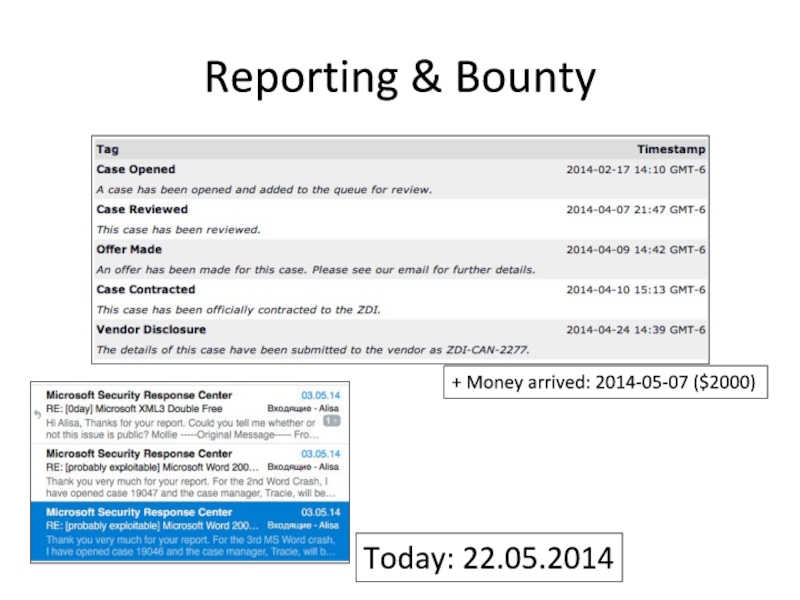

- 24. Reporting & Bounty Today: 22.05.2014 + Money arrived: 2014-05-07 ($2000)

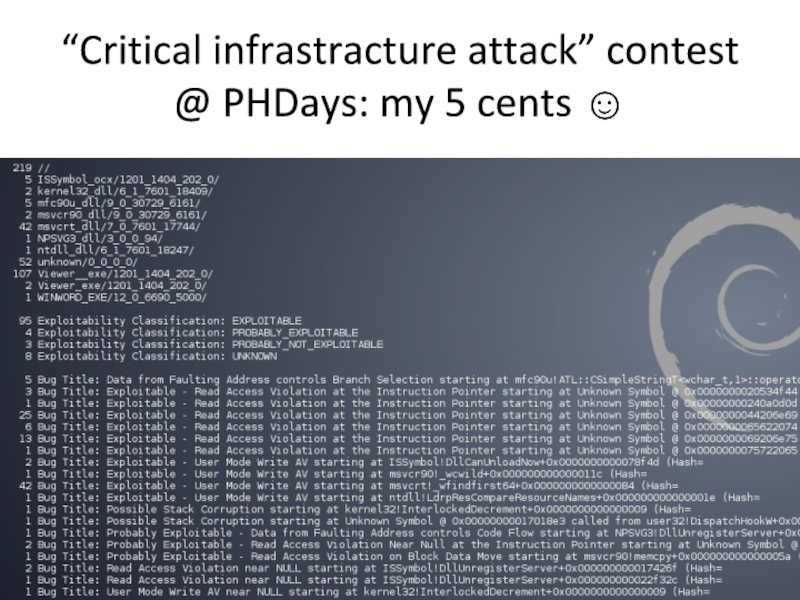

- 25. “Critical infrastracture attack” contest @ PHDays: my 5 cents ☺

- 26. Lessons Learnt Research! Primary target: code bases

- 27. Thank you!

Слайд 2About me

Hacking binary since 15

Left my first=last employer in 2004, independent

ever since

Done: binary reversing to malware analysis to cyber investigation, pentesting to blackbox auditing to vulnerability discovery to exploitation…

Founded: Esage Lab => Neuronspace, Malwas, TZOR

Done: binary reversing to malware analysis to cyber investigation, pentesting to blackbox auditing to vulnerability discovery to exploitation…

Founded: Esage Lab => Neuronspace, Malwas, TZOR

Слайд 6Problems with fuzzers

Too specialized.

E.g. fuzz only browsers, or only files

Not suitable

for fuzzing everything by design

2. Enforce unnecessary constraints.

E.g. glue mutation with automation with crash monitoring

Kills flexibility => not suitable for fuzzing everything

3. Steep learning curve.

E.g. templates & configs

Is it worthy to learn a system which is constrained anyway?

2. Enforce unnecessary constraints.

E.g. glue mutation with automation with crash monitoring

Kills flexibility => not suitable for fuzzing everything

3. Steep learning curve.

E.g. templates & configs

Is it worthy to learn a system which is constrained anyway?

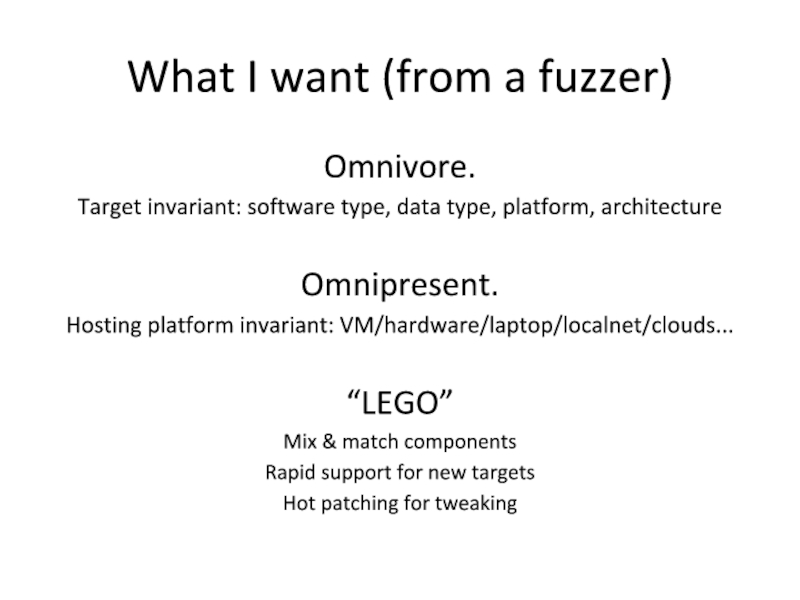

Слайд 7What I want (from a fuzzer)

Omnivore.

Target invariant: software type, data type,

platform, architecture

Omnipresent.

Hosting platform invariant: VM/hardware/laptop/localnet/clouds...

“LEGO”

Mix & match components

Rapid support for new targets

Hot patching for tweaking

Omnipresent.

Hosting platform invariant: VM/hardware/laptop/localnet/clouds...

“LEGO”

Mix & match components

Rapid support for new targets

Hot patching for tweaking

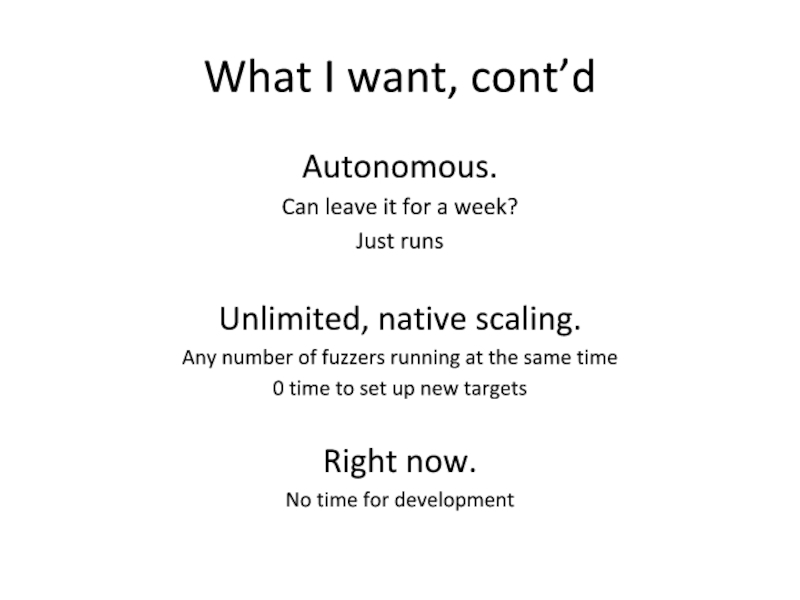

Слайд 8What I want, cont’d

Autonomous.

Can leave it for a week?

Just runs

Unlimited, native

scaling.

Any number of fuzzers running at the same time

0 time to set up new targets

Right now.

No time for development

Any number of fuzzers running at the same time

0 time to set up new targets

Right now.

No time for development

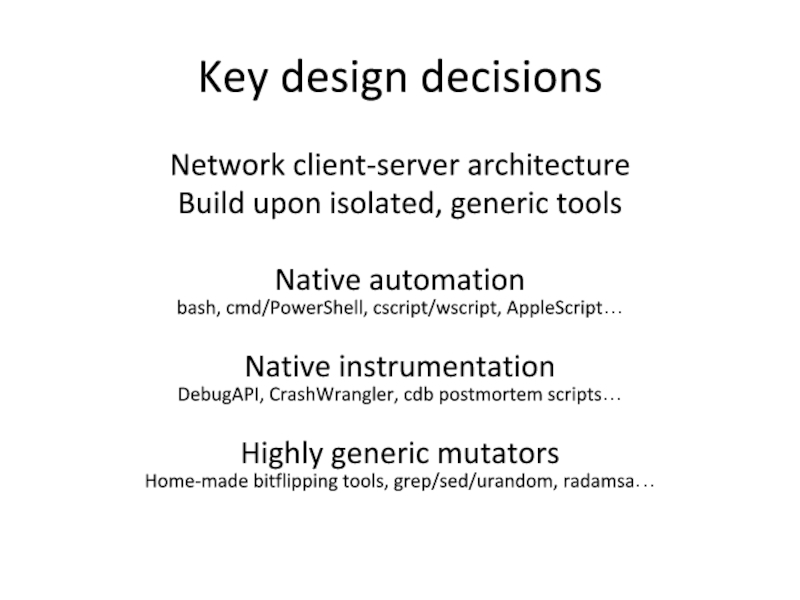

Слайд 9Key design decisions

Network client-server architecture

Build upon isolated, generic tools

Native automation

bash, cmd/PowerShell,

cscript/wscript, AppleScript…

Native instrumentation

DebugAPI, CrashWrangler, cdb postmortem scripts…

Highly generic mutators

Home-made bitflipping tools, grep/sed/urandom, radamsa…

Native instrumentation

DebugAPI, CrashWrangler, cdb postmortem scripts…

Highly generic mutators

Home-made bitflipping tools, grep/sed/urandom, radamsa…

Слайд 13Fuzzing in 2014

“Shellcoder’s Handbook”: 10 years ago

“Fuzzing: Brute Force Vulnerability Discovery”:

7 years ago

Dozens of publications, hundreds of tools, thousands of vulns found (=> code audited)

Driven by market and the competition

Dozens of publications, hundreds of tools, thousands of vulns found (=> code audited)

Driven by market and the competition

Слайд 14The beginner’s delusion

“Success in fuzzing is defined by speed & scale”

Not exactly

ClusterFuzz is still beaten by standalone researchers

My results: ~1 night per fuzzer

Слайд 15Thinking

One only needs millions of test cases, if majority of those

test cases are bad

Rejected by the validator or not reaching or not triggering vulnerable code paths

Cornerstone: bug-rich branches of code

Rejected by the validator or not reaching or not triggering vulnerable code paths

Cornerstone: bug-rich branches of code

Слайд 16Problem

No algorithm to discover “fresh” code paths

Code coverage can only measure

the already reached paths

Evolutionary input generation is tiny (think Word with embeddings)

Evolutionary input generation is tiny (think Word with embeddings)

Слайд 17Where is the “new” code?

Code unobviously triggered or reached

Presumably effortful input

generation

Presumably constrained exploit

Presumably constrained exploit

Слайд 18Unobvious Examples

CVE-2013-3906: TIFF 0’day

Ogl.dll=gdiplus.dll alternative only in Office 2007

CVE-2014-0315: Insecure Library

Loading with .cmd and .bat

CVE-2013-1324: Microsoft Word WPD stack based buffer overflow

CVE-2013-1324: Microsoft Word WPD stack based buffer overflow

Слайд 19Presumably Effortful Examples

CVE-2013-1296: MS RDP ActiveX Use-after-Free

No public ActiveX tools can

target UaFs

Strict syntax-based and/or layered formats

My experience: the better the generator, and the deeper the targeted data layer, the more bugs found

Microsoft DKOM/RPC

Did you know one can send a DKOM/RPC request to the port mapper (135) to enable RDP?

Strict syntax-based and/or layered formats

My experience: the better the generator, and the deeper the targeted data layer, the more bugs found

Microsoft DKOM/RPC

Did you know one can send a DKOM/RPC request to the port mapper (135) to enable RDP?

Слайд 20Presumably Constrained Example

Standard ActiveX in Windows

Requires user interaction in IE

But IE

is not the only wide-spread software capable of loading ActiveX…

Слайд 26Lessons Learnt

Research! Primary target: code bases

Not data formats or data input

interfaces or fuzzing automation technology

Yes: Ancient code, hidden/unobvious functionality etc.

Bet on complex data formats

For complex data, code paths exist which are not reachable automatically, which means probably never audited code base and zero competition.

Craft complex fuzzing seeds manually

The rule of “minimal size sample”, as stated in the book “Fuzzing: Brute Force Vulnerability Discovery” is obsolete 2014.

Remove 1-2 data format layers before injecting malformed data

Deep parsers are less audited (researchers are lazy?)

Deep parsers tend to contain more bugs (programmers are lazy?)

Estimate potency of a new vector by dumbest fuzzing prior to investing in smart fuzzing

Criteria: Bugs crowd

Bugs crowd in direction of “less audited” code base

Tweak a lot to get a “feeling” for the particular target

Keep the fuzzing setting dirty

Fuzzing is dirty by design

Pretty lying it into a well-designed system kills flexibility necessary for tweaking & rapid prototyping.

Research, again

Yes: Ancient code, hidden/unobvious functionality etc.

Bet on complex data formats

For complex data, code paths exist which are not reachable automatically, which means probably never audited code base and zero competition.

Craft complex fuzzing seeds manually

The rule of “minimal size sample”, as stated in the book “Fuzzing: Brute Force Vulnerability Discovery” is obsolete 2014.

Remove 1-2 data format layers before injecting malformed data

Deep parsers are less audited (researchers are lazy?)

Deep parsers tend to contain more bugs (programmers are lazy?)

Estimate potency of a new vector by dumbest fuzzing prior to investing in smart fuzzing

Criteria: Bugs crowd

Bugs crowd in direction of “less audited” code base

Tweak a lot to get a “feeling” for the particular target

Keep the fuzzing setting dirty

Fuzzing is dirty by design

Pretty lying it into a well-designed system kills flexibility necessary for tweaking & rapid prototyping.

Research, again