- Главная

- Разное

- Дизайн

- Бизнес и предпринимательство

- Аналитика

- Образование

- Развлечения

- Красота и здоровье

- Финансы

- Государство

- Путешествия

- Спорт

- Недвижимость

- Армия

- Графика

- Культурология

- Еда и кулинария

- Лингвистика

- Английский язык

- Астрономия

- Алгебра

- Биология

- География

- Детские презентации

- Информатика

- История

- Литература

- Маркетинг

- Математика

- Медицина

- Менеджмент

- Музыка

- МХК

- Немецкий язык

- ОБЖ

- Обществознание

- Окружающий мир

- Педагогика

- Русский язык

- Технология

- Физика

- Философия

- Химия

- Шаблоны, картинки для презентаций

- Экология

- Экономика

- Юриспруденция

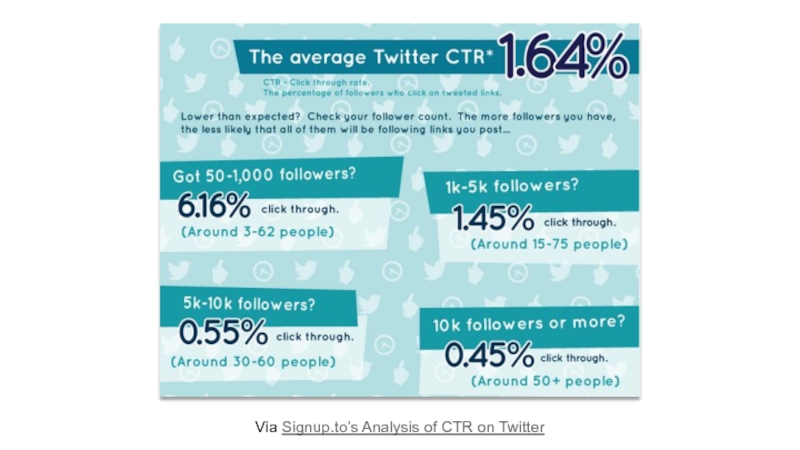

Why Great Marketers Must Be Great Skeptics презентация

Содержание

- 1. Why Great Marketers Must Be Great Skeptics

- 2. This Presentation Is Online Here: bit.ly/mozskeptics

- 3. Great Skepticism Defining

- 4. I have some depressing news…

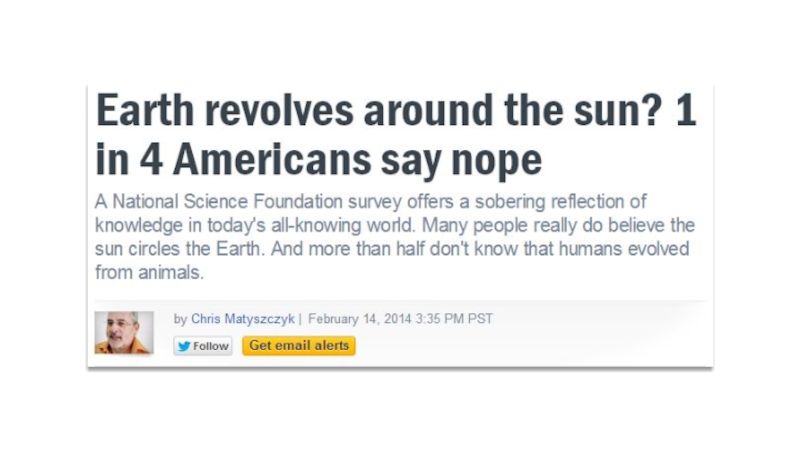

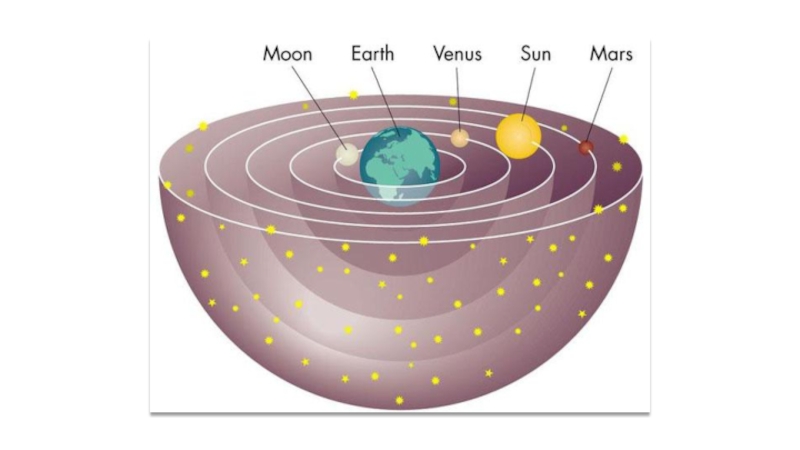

- 7. Does anyone in this room believe that the Earth doesn’t revolve around the Sun?

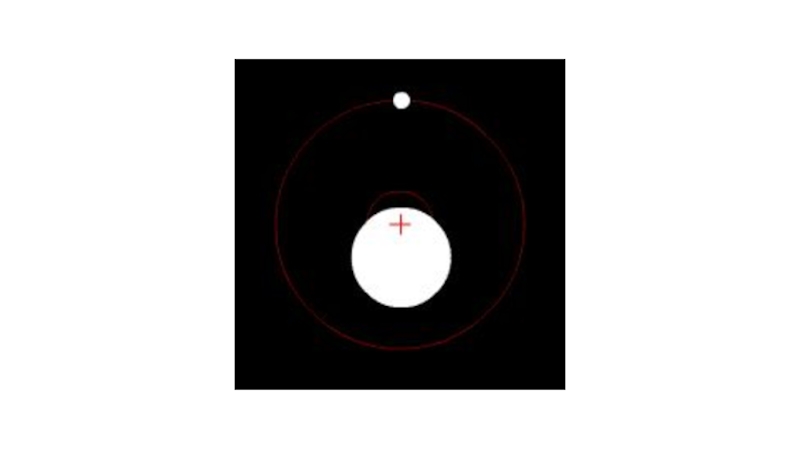

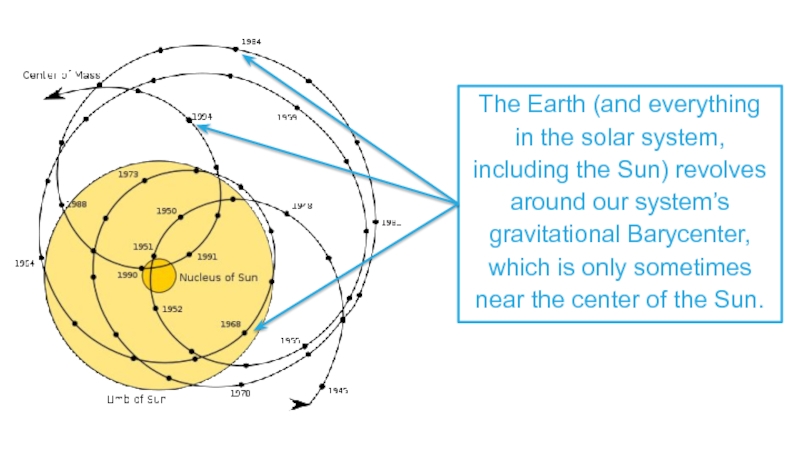

- 9. The Earth (and everything in the solar

- 10. Let’s try a more marketing-centric example...

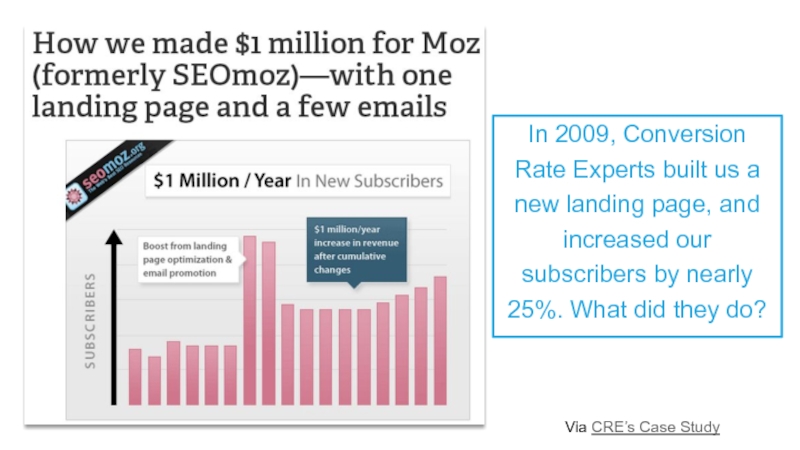

- 11. In 2009, Conversion Rate Experts built us

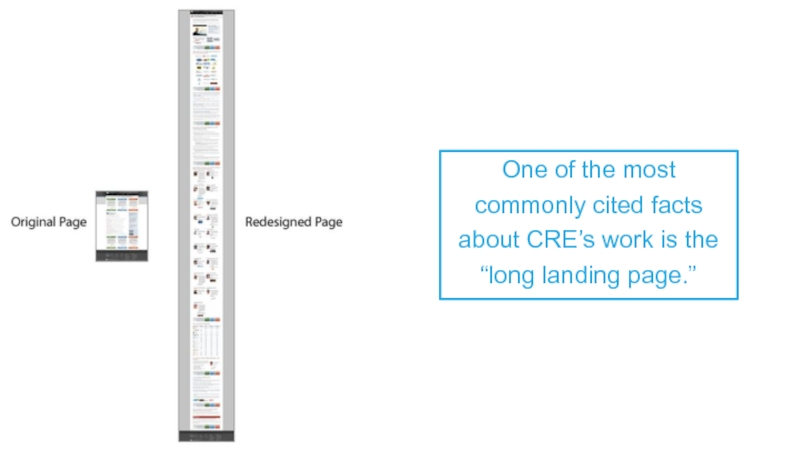

- 12. One of the most commonly cited facts about CRE’s work is the “long landing page.”

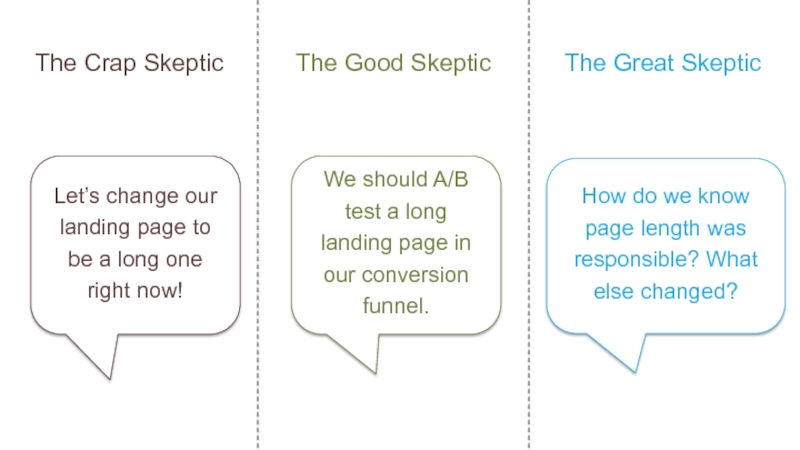

- 13. The Crap Skeptic The Good Skeptic The

- 14. The Crap Skeptic The Good Skeptic The

- 15. In fact, we’ve changed our landing pages

- 16. What separates the crap, good, & great?

- 17. Assumes one belief-reinforcing data point is

- 18. Doesn’t make assumptions about why a

- 19. Seeks to discover the reasons underlying

- 20. Will more conversion tests lead to better results? Testing

- 21. Obviously the more tests we run,

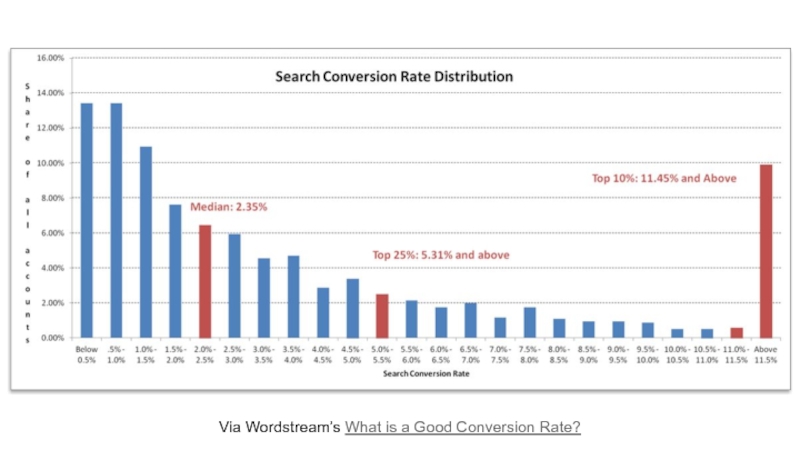

- 22. Via Wordstream’s What is a Good Conversion Rate?

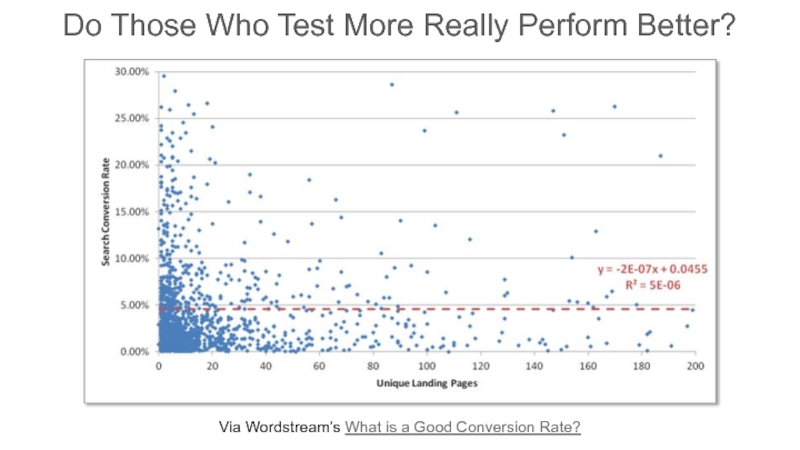

- 23. Via Wordstream’s What is a Good Conversion

- 24. Hmm… There’s no correlation between those

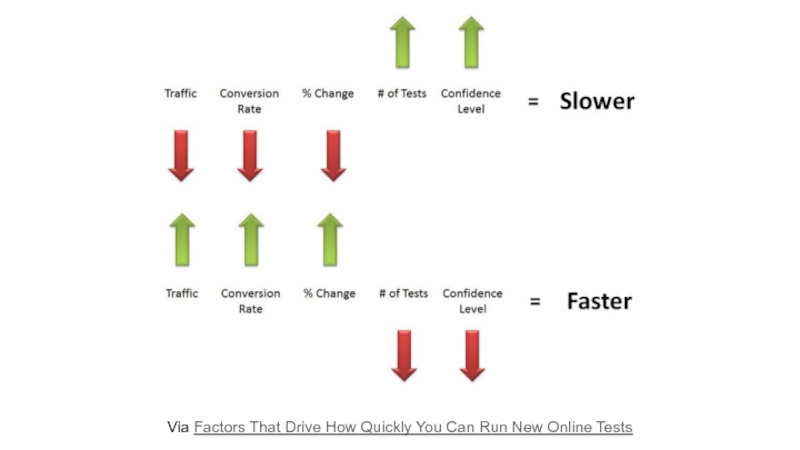

- 25. Via Factors That Drive How Quickly You Can Run New Online Tests

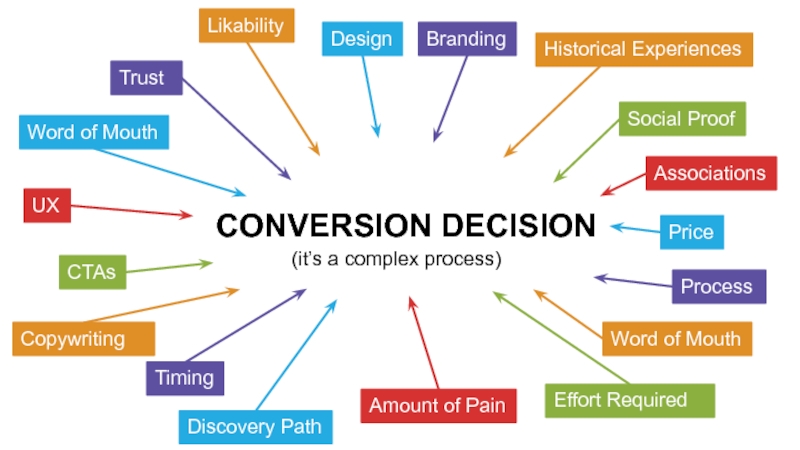

- 26. Trust Word of Mouth Likability Design Associations

- 27. How do we know where our conversion problems lie?

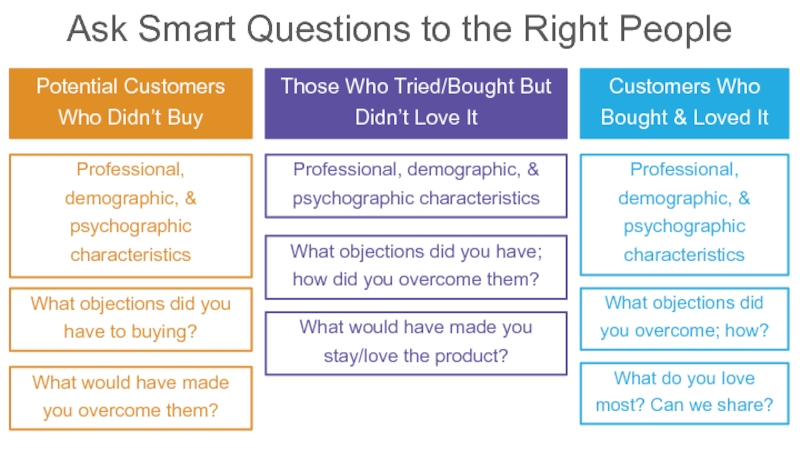

- 28. Ask Smart Questions to the Right People

- 29. We can start by targeting the

- 30. Our tests should be focused around

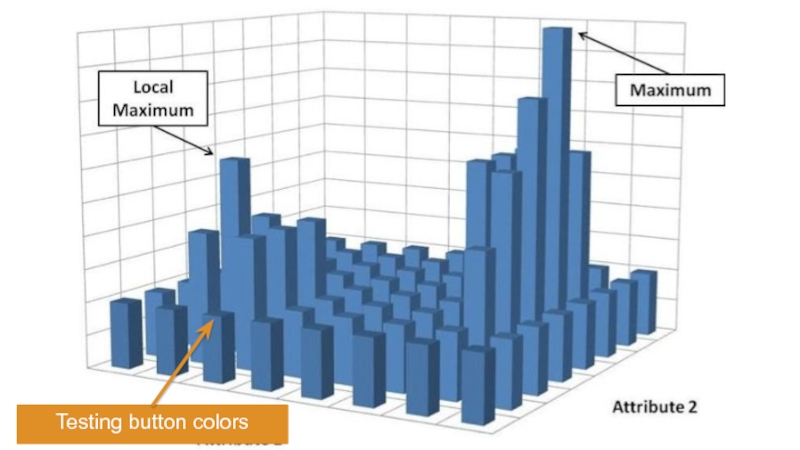

- 31. Testing button colors

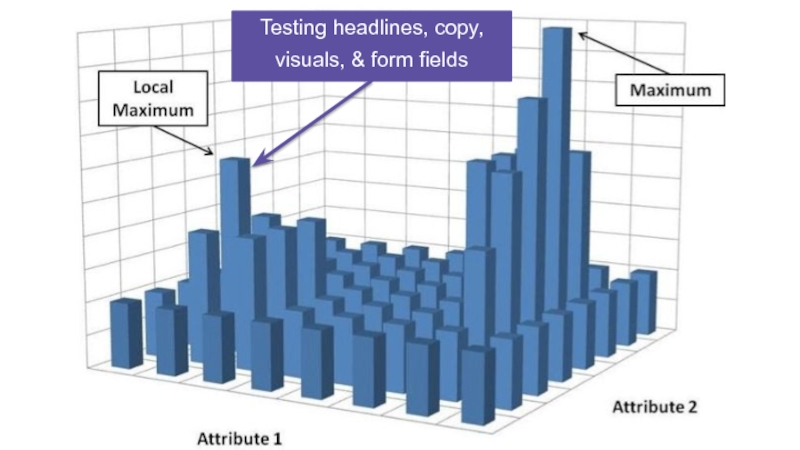

- 32. Testing headlines, copy, visuals, & form fields

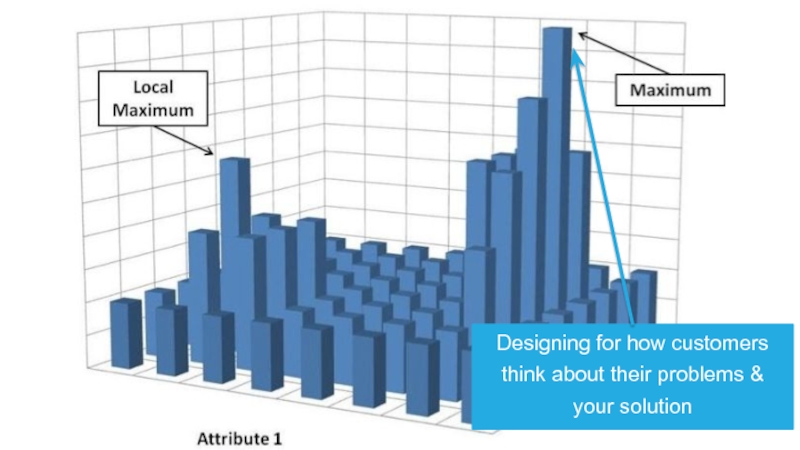

- 33. Designing for how customers think about their problems & your solution

- 34. THIS!

- 35. Does telling users we encrypt data scare them? Security

- 36. Via Visual Website Optimizer Could this actually HURT conversion?

- 37. Via Visual Website Optimizer

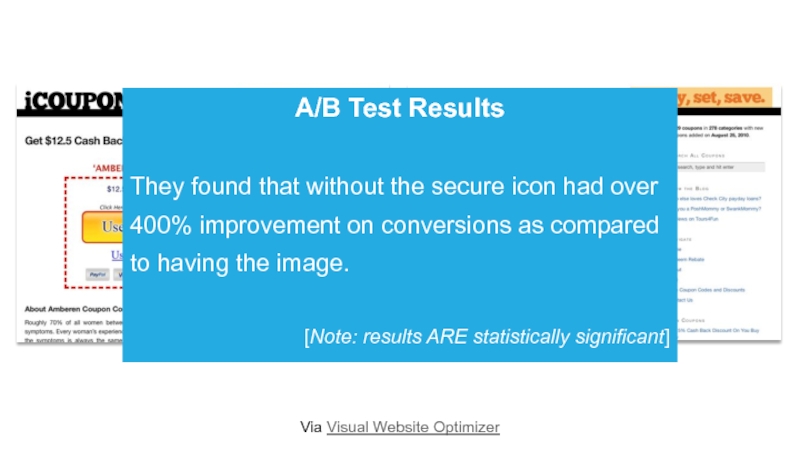

- 38. Via Visual Website Optimizer A/B Test Results

- 39. We need to remove the security messages on our site ASAP!

- 40. We should test this.

- 41. Is this the most meaningful test

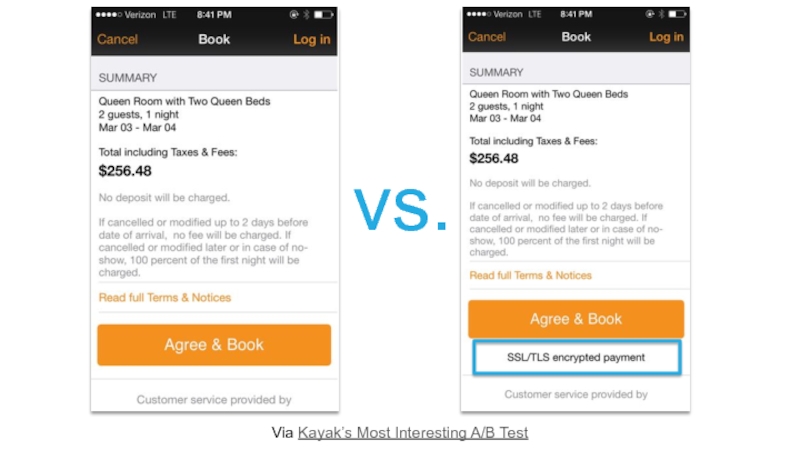

- 42. Via Kayak’s Most Interesting A/B Test vs.

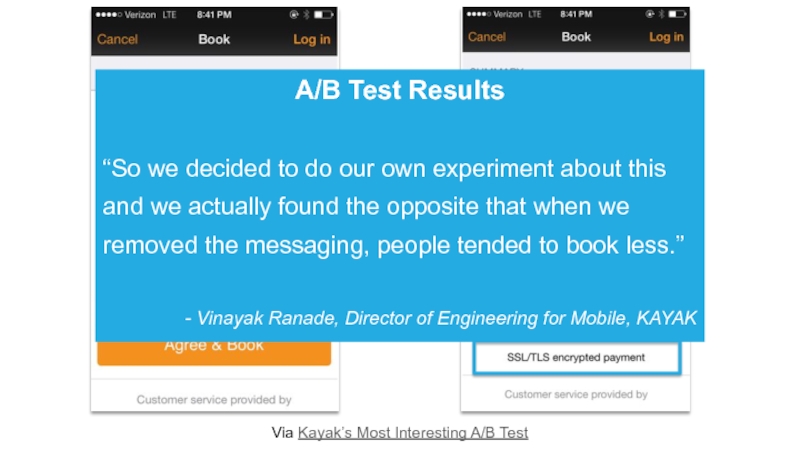

- 43. Via Kayak’s Most Interesting A/B Test

- 44. Good thing we tested! Good thing

- 45. What should we expect from sharing our content on social media? Social CTR

- 46. Just find the average social CTRs

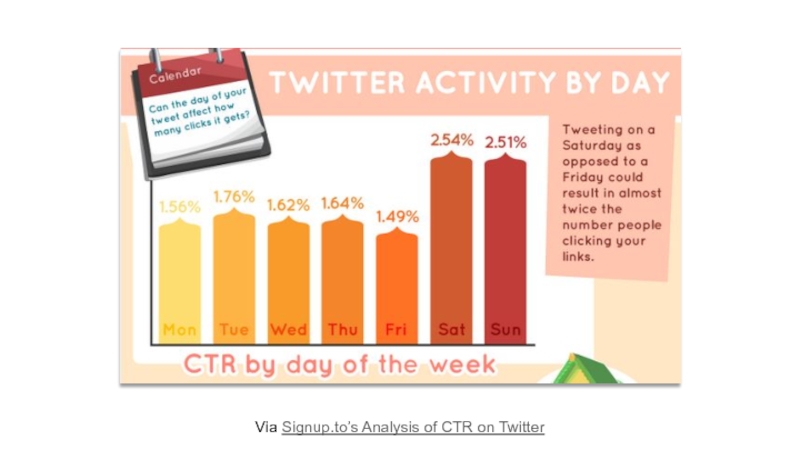

- 47. Via Signup.to’s Analysis of CTR on Twitter

- 48. Via Signup.to’s Analysis of CTR on Twitter

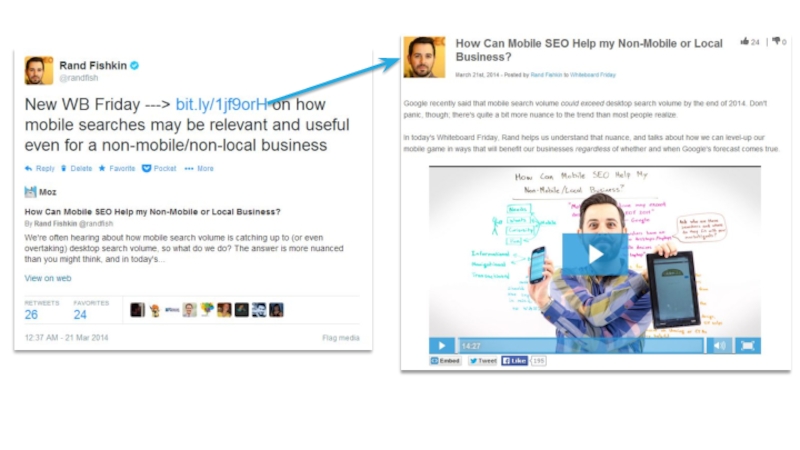

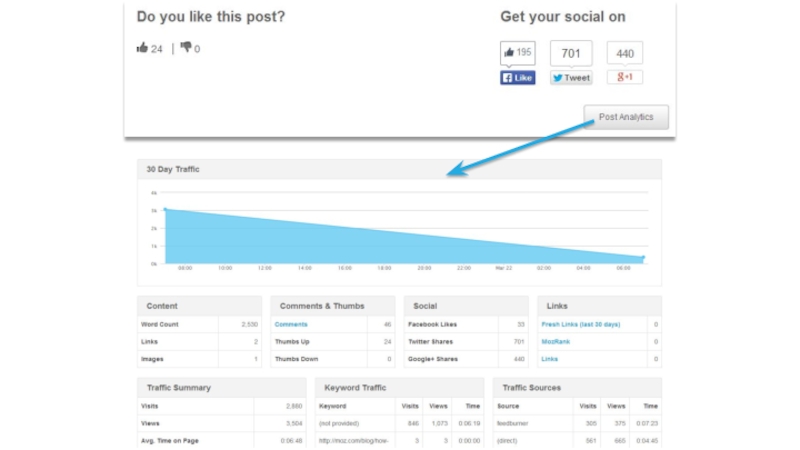

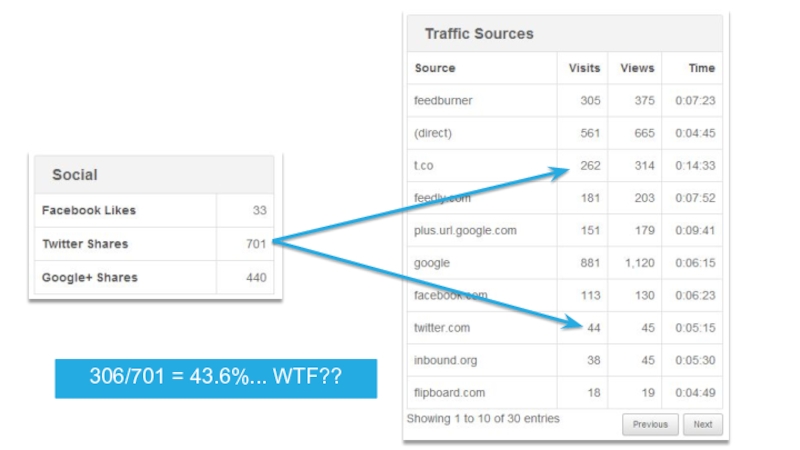

- 51. 306/701 = 43.6%... WTF??

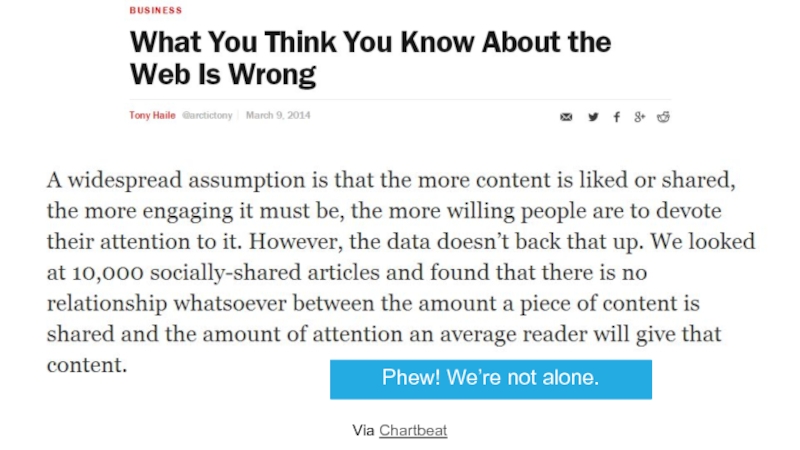

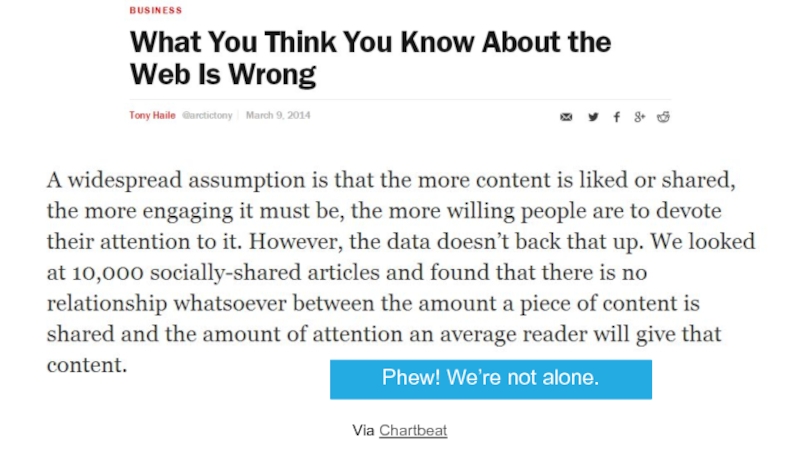

- 53. Phew! We’re not alone. Via Chartbeat

- 54. Assuming social metrics and engagement correlate

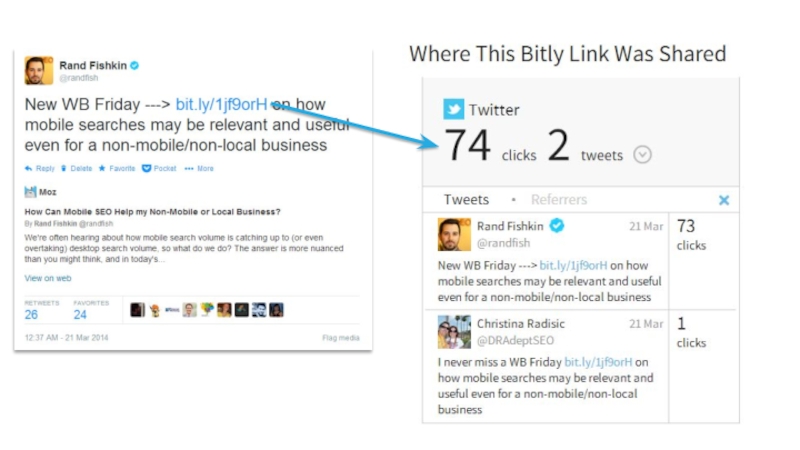

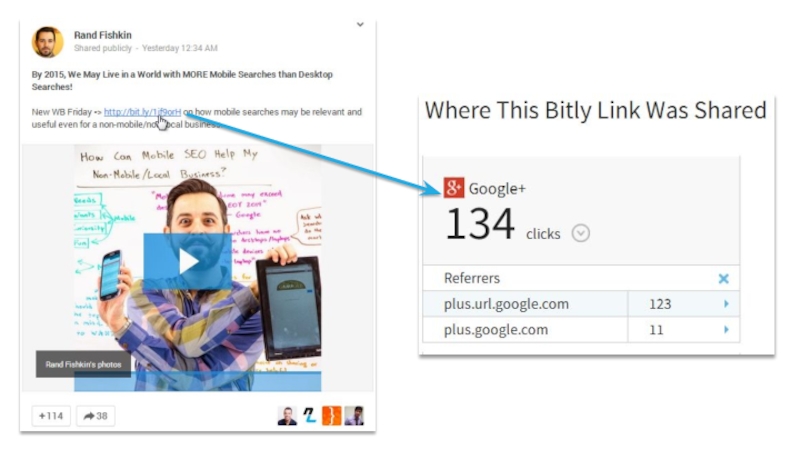

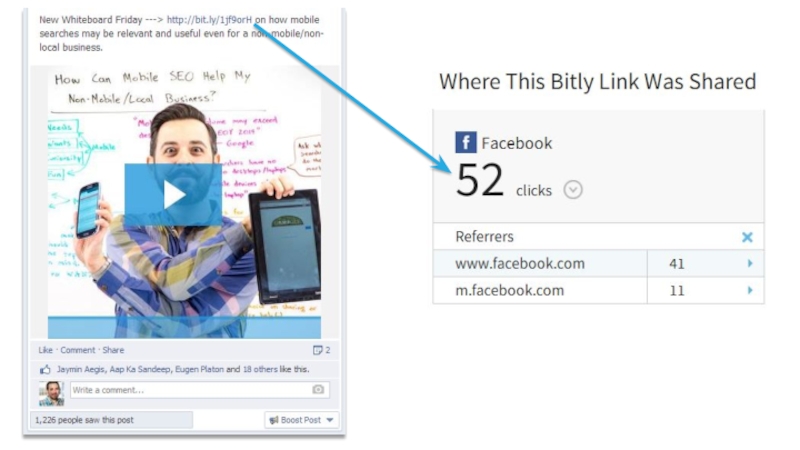

- 58. OK. We can create some benchmarks

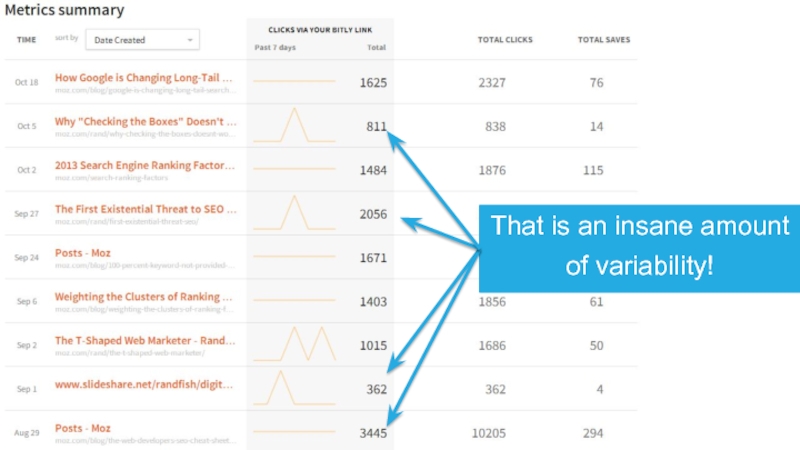

- 59. That is an insane amount of variability!

- 60. There are other factors at work

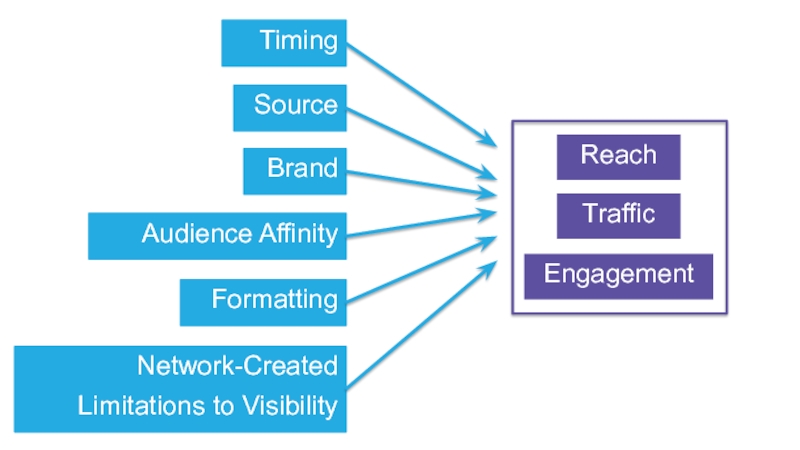

- 61. Timing Source Audience Affinity Formatting Network-Created Limitations to Visibility Brand Reach Traffic Engagement

- 62. Let’s start by examining the data and impacts of timing.

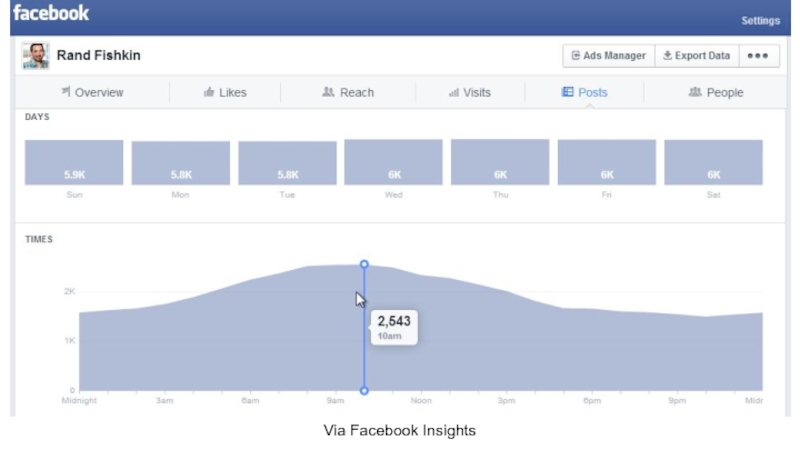

- 63. Via Facebook Insights

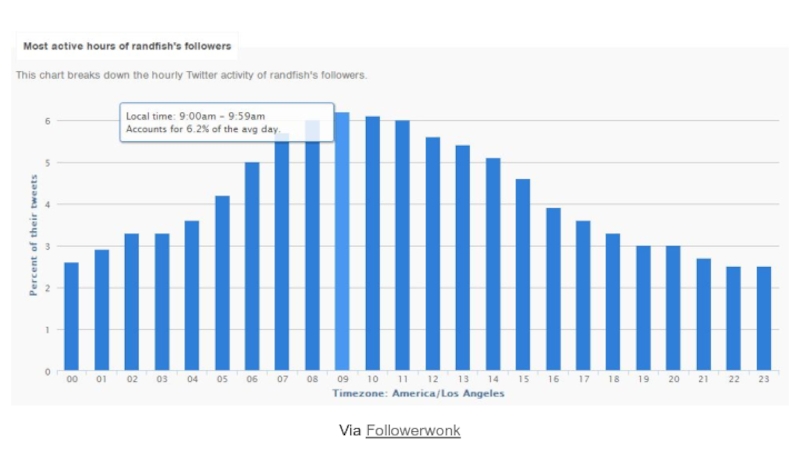

- 64. Via Followerwonk

- 65. Via Google Analytics

- 66. There’s a lot of nuance, but

- 67. Comparing a tweet or share sent

- 68. But, we now know three things:

- 69. Do they work? Can we make them more effective? Share Buttons

- 70. After relentless testing, OKTrends found that the following share buttons worked best:

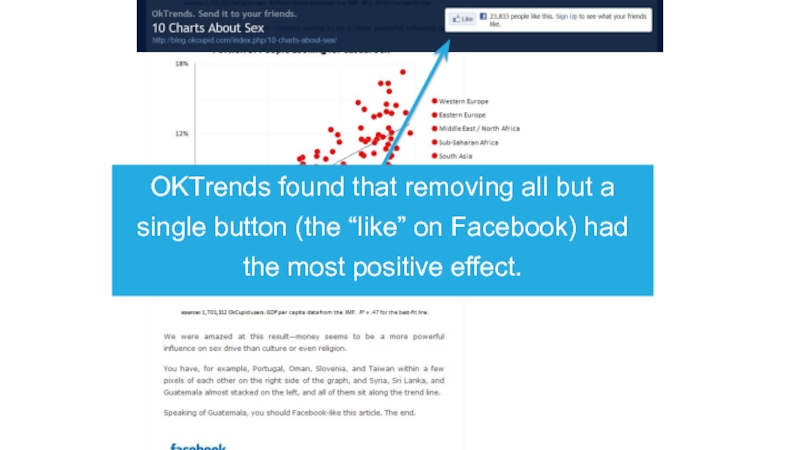

- 73. OKTrends found that removing all but a

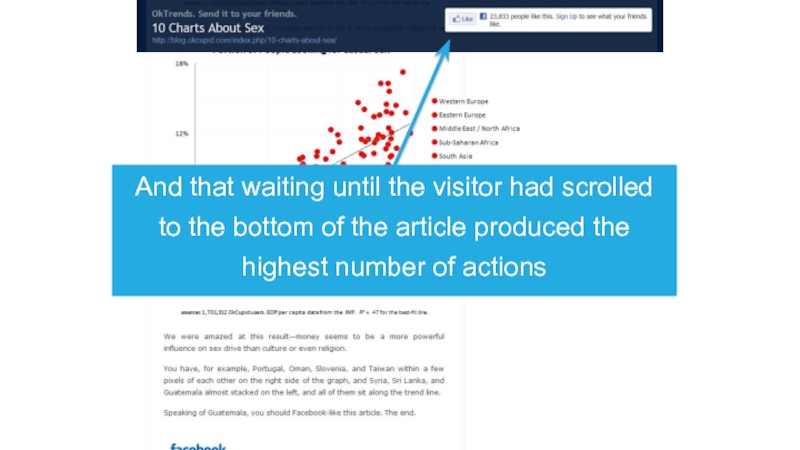

- 74. And that waiting until the visitor had

- 75. We should remove all our social

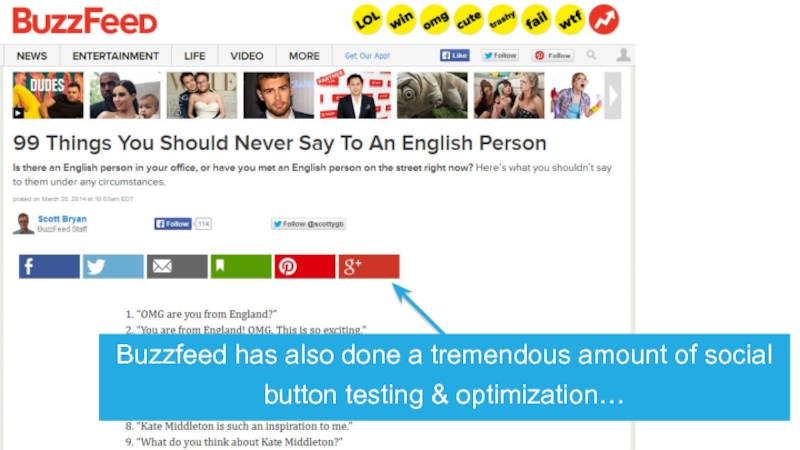

- 76. Buzzfeed has also done a tremendous amount of social button testing & optimization…

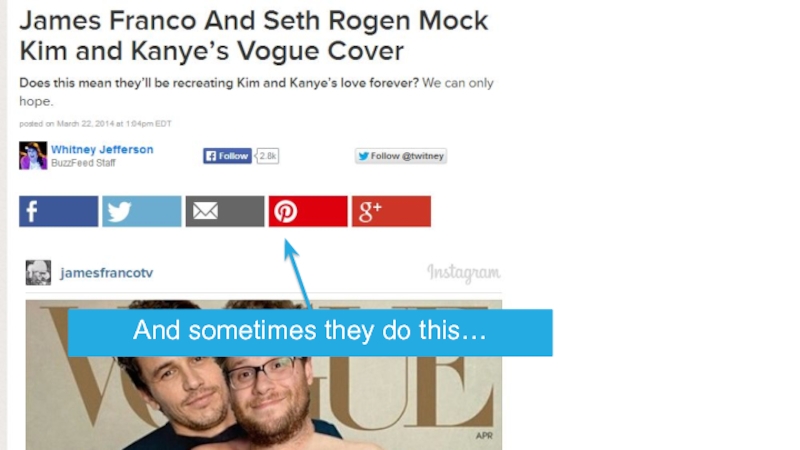

- 77. And sometimes they do this…

- 78. And sometimes this…

- 79. Is Buzzfeed still in testing mode?

- 80. Nope. They’ve found it’s best to

- 81. OK… Well, then let’s do that… Do it now!

- 82. Testing a small number of the

- 83. Buzzfeed & OKTrends share several unique

- 84. Unless we also fit a number

- 85. BTW – it is true that

- 86. Does it still work better than standard link text? Anchor Text

- 87. Psh. Anchor text links obviously work.

- 88. It has been a while since

- 89. Testing in Google is very, very

- 90. 1) Three word, informational keyword phrase

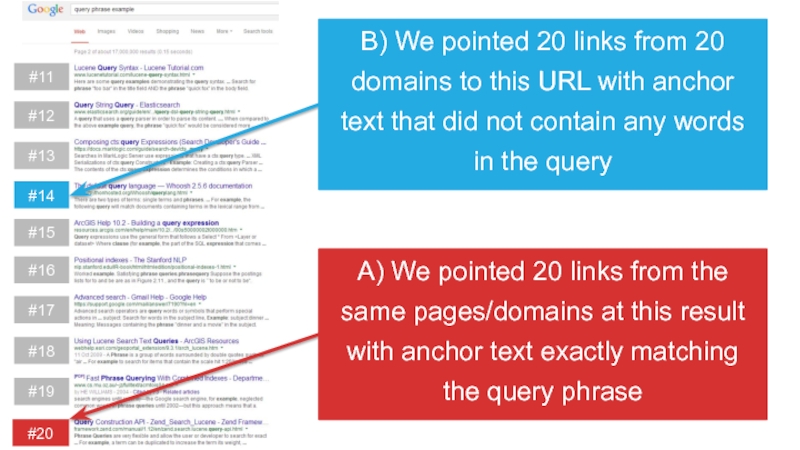

- 91. A) We pointed 20 links from 20

- 92. #11 #12 #13 #14 #15 #16 #17

- 93. See? Told you it works.

- 94. While both results moved up the

- 95. Princess Bubblegum and I are in

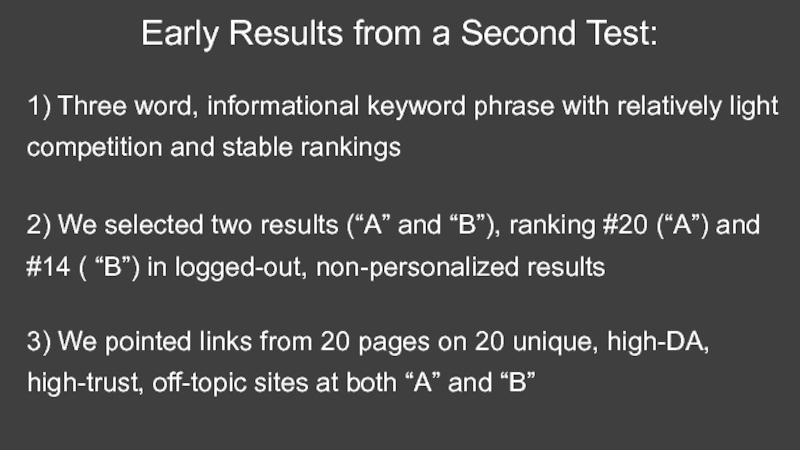

- 96. 1) Three word, informational keyword phrase

- 97. B) We pointed 20 links from 20

- 98. #11 #12 #13 #14 #15 #16 #17

- 99. Good thing we tested! This is

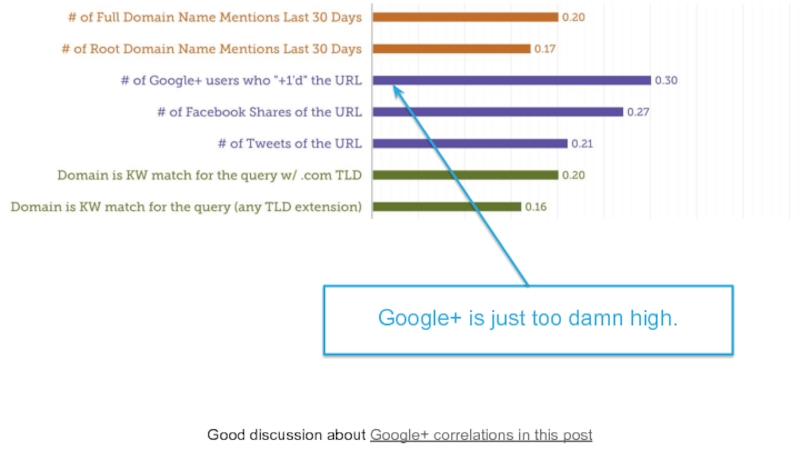

- 100. Does it influence Google’s non-personalized search rankings? Google+

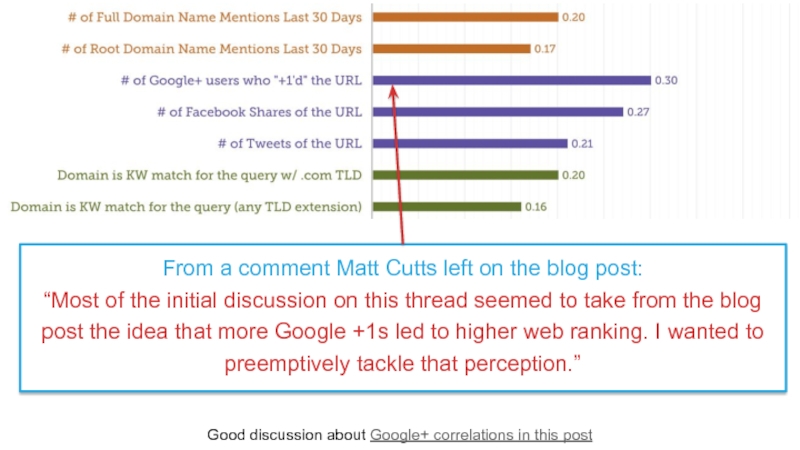

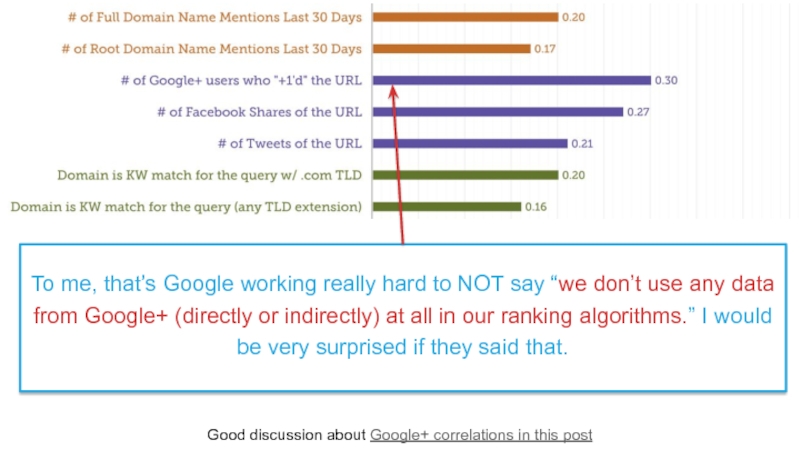

- 101. Good discussion about Google+ correlations in this post Google+ is just too damn high.

- 102. Good discussion about Google+ correlations in this

- 103. Good discussion about Google+ correlations in this

- 104. Google explicitly SAID +1s don’t affect

- 105. The correlations are surprisingly high for

- 106. First, remember how hard it is

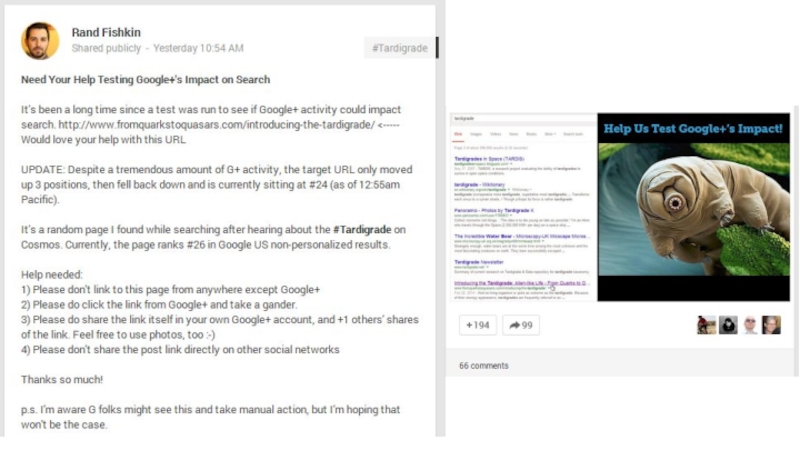

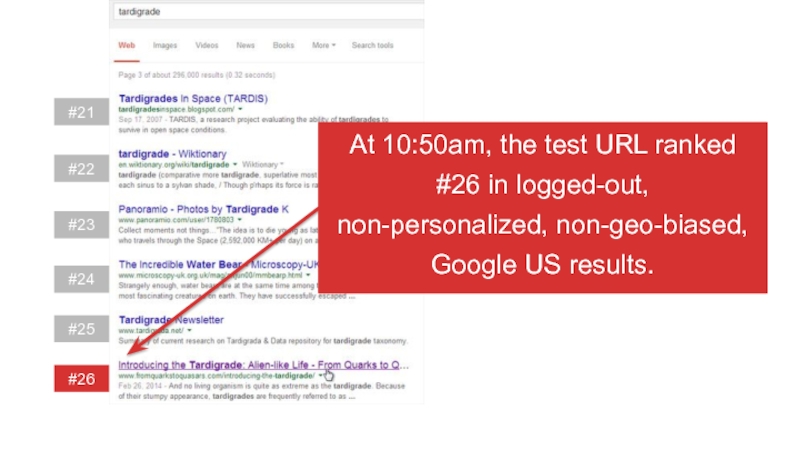

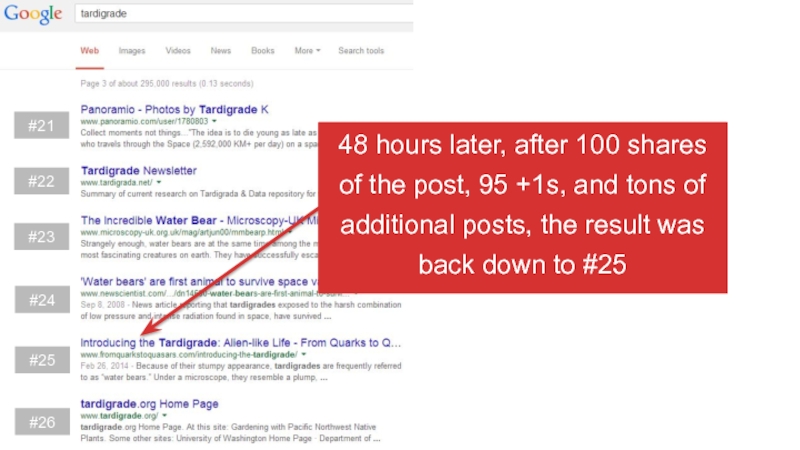

- 108. #21 #22 #23 #24 #25 #26 At

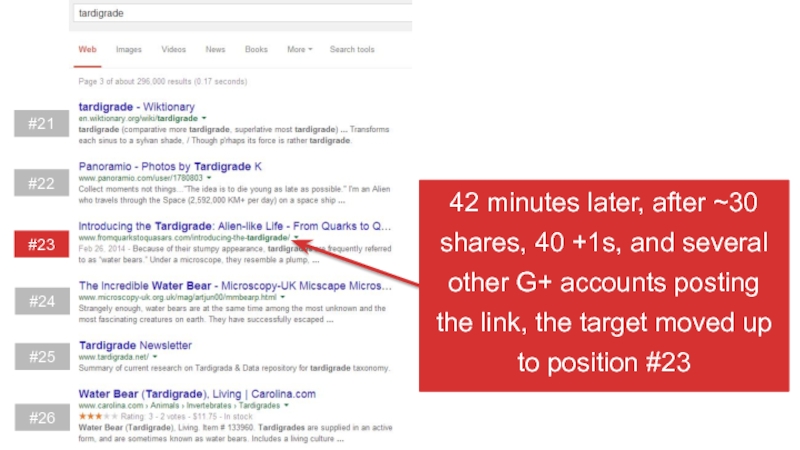

- 109. 42 minutes later, after ~30 shares, 40

- 110. #21 #22 #23 #24 #25 #26 48

- 111. At least we proved one thing –

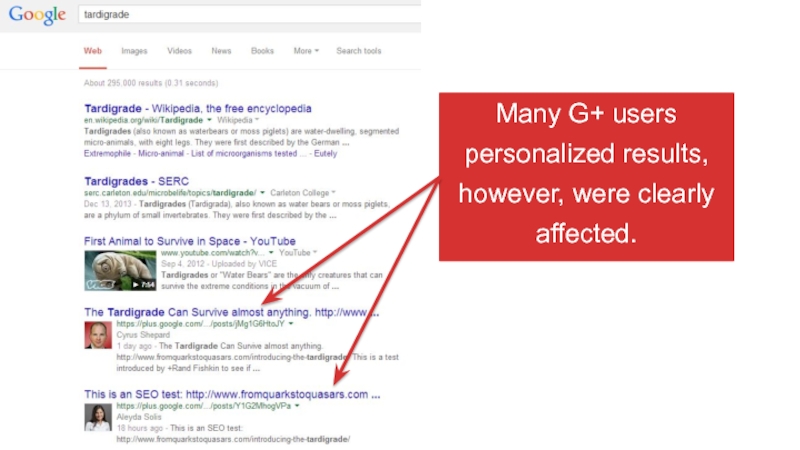

- 112. Many G+ users personalized results, however, were clearly affected.

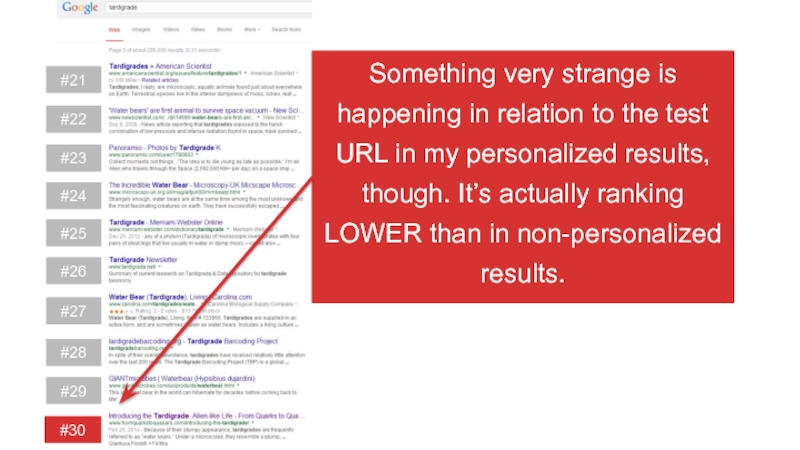

- 113. #21 #22 #23 #24 #25 #26 #27

- 114. Could Google be donking up the test? Sadly, it’s impossible to know.

- 115. GASP!!! The posts did move the

- 116. Sigh… It’s possible that Jenny’s right,

- 117. More testing is needed, but how

- 118. Phew! We’re not alone. Via Chartbeat

- 119. If I were Google, I wouldn’t

- 120. Ready to Be Your Own Skeptic?

- 121. Rand Fishkin, Wizard of Moz | @randfish | rand@moz.com bit.ly/mozskeptics

Слайд 1Rand Fishkin, Wizard of Moz | @randfish | rand@moz.com

Why Great

Must Be Great Skeptics

Слайд 9The Earth (and everything in the solar system, including the Sun)

Слайд 11In 2009, Conversion Rate Experts built us a new landing page,

Via CRE’s Case Study

Слайд 13The Crap Skeptic

The Good Skeptic

The Great Skeptic

Let’s change our landing page

We should A/B test a long landing page in our conversion funnel.

How do we know page length was responsible? What else changed?

Слайд 14The Crap Skeptic

The Good Skeptic

The Great Skeptic

“I do believe sadly it’s

“Listen, all magic is scientific principals presented like "mystical hoodoo" which is fun, but it's sort of irresponsible.”

"The good thing about science is that it's true whether or not you believe in it."

Слайд 15In fact, we’ve changed our landing pages numerous times to shorter

Слайд 17

Assumes one belief-reinforcing data point is evidence enough

Doesn’t question what’s truly

Doesn’t seek to validate

Слайд 18

Doesn’t make assumptions about why a result occurred

Knows that correlation isn’t

Validates assumptions w/ data

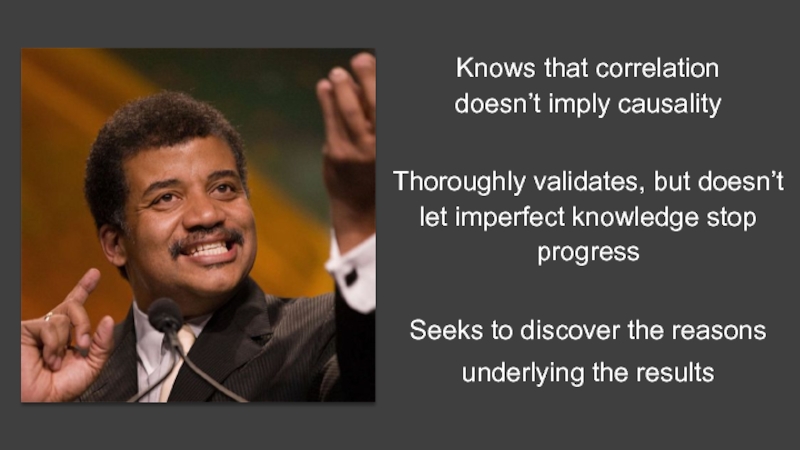

Слайд 19

Seeks to discover the reasons underlying the results

Knows that correlation

doesn’t imply

Thoroughly validates, but doesn’t let imperfect knowledge stop progress

Слайд 21

Obviously the more tests we run, the better we can optimize

Слайд 23Via Wordstream’s What is a Good Conversion Rate?

Do Those Who Test

Слайд 24

Hmm… There’s no correlation between those who run more tests across

Слайд 26Trust

Word of Mouth

Likability

Design

Associations

Word of Mouth

Amount of Pain

CTAs

UX

Effort Required

Process

Historical Experiences

Social Proof

Copywriting

CONVERSION DECISION

Timing

Discovery

Branding

Price

(it’s a complex process)

Слайд 28Ask Smart Questions to the Right People

Potential Customers Who Didn’t Buy

Those

Customers Who Bought & Loved It

Professional, demographic, & psychographic characteristics

Professional, demographic, & psychographic characteristics

Professional, demographic, & psychographic characteristics

What objections did you have to buying?

What objections did you have; how did you overcome them?

What objections did you overcome; how?

What would have made you stay/love the product?

What would have made you overcome them?

What do you love most? Can we share?

Слайд 29

We can start by targeting the right kinds of customers. Trying

Слайд 30

Our tests should be focused around overcoming the objections of the

Слайд 38Via Visual Website Optimizer

A/B Test Results

They found that without the secure

[Note: results ARE statistically significant]

Слайд 41

Is this the most meaningful test we can perform right now?

(I’m

Слайд 43Via Kayak’s Most Interesting A/B Test

A/B Test Results

“So we decided to

- Vinayak Ranade, Director of Engineering for Mobile, KAYAK

Слайд 54

Assuming social metrics and engagement correlate was a flawed assumption. We

Слайд 58

OK. We can create some benchmarks based on these numbers and

Слайд 60

There are other factors at work here. We need to understand

Слайд 61

Timing

Source

Audience Affinity

Formatting

Network-Created Limitations to Visibility

Brand

Reach

Traffic

Engagement

Слайд 66

There’s a lot of nuance, but we can certainly see how

Слайд 67

Comparing a tweet or share sent at 9am Pacific against tweets

Слайд 68

But, we now know three things:

#1 - When our audience is

#2 – Sharing just once is suboptimal

#3 – To be a great skeptic (and marketer), we should attempt to understand each of these inputs with similar rigorousness

Слайд 73OKTrends found that removing all but a single button (the “like”

Слайд 74And that waiting until the visitor had scrolled to the bottom

Слайд 75

We should remove all our social sharing buttons and replace them

Слайд 80

Nope.

They’ve found it’s best to show different buttons based on both

Слайд 82

Testing a small number of the most impactful social button changes

Слайд 83

Buzzfeed & OKTrends share several unique qualities:

They have huge amounts of

Social shares are integral to their business model

The content they create is optimized for social sharing

Слайд 84

Unless we also fit a number of these criteria, I have

Слайд 85

BTW – it is true that testing social buttons can coincide

Слайд 87

Psh. Anchor text links obviously work. Otherwise Google wouldn’t be penalizing

Слайд 88

It has been a while since we’ve seen a public test

Слайд 89

Testing in Google is very, very hard. There’s so many confounding

Слайд 90

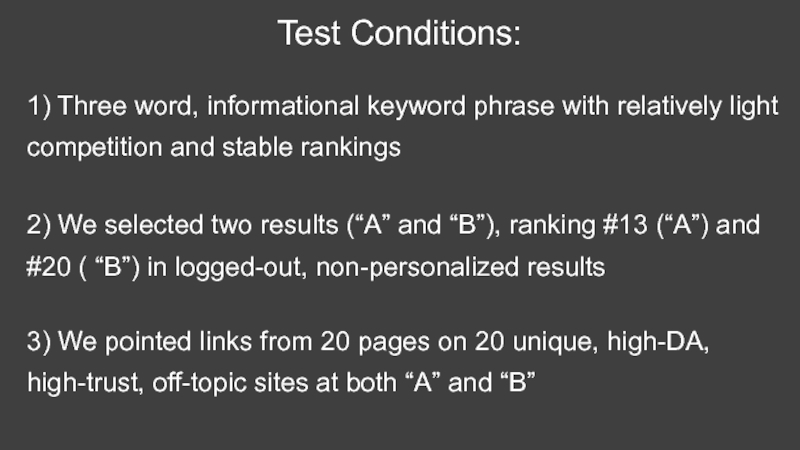

1) Three word, informational keyword phrase with relatively light competition and

Test Conditions:

2) We selected two results (“A” and “B”), ranking #13 (“A”) and #20 ( “B”) in logged-out, non-personalized results

3) We pointed links from 20 pages on 20 unique, high-DA, high-trust, off-topic sites at both “A” and “B”

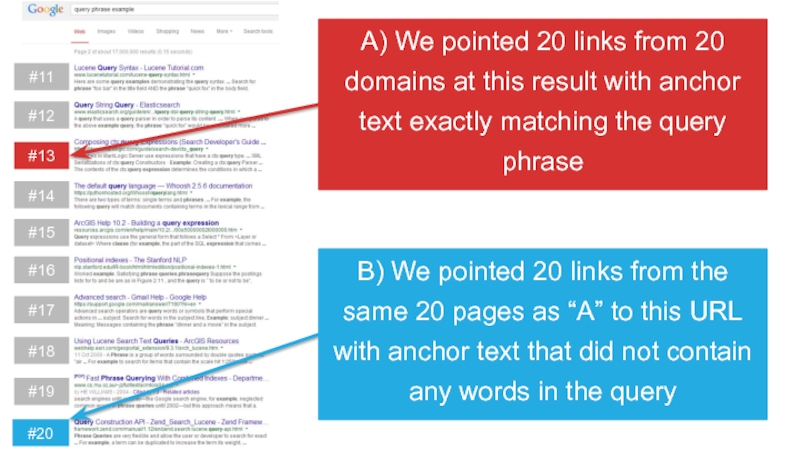

Слайд 91A) We pointed 20 links from 20 domains at this result

#11

#12

#13

#14

#15

#16

#17

#18

#19

#20

B) We pointed 20 links from the same 20 pages as “A” to this URL with anchor text that did not contain any words in the query

Слайд 92#11

#12

#13

#14

#15

#16

#17

#18

#19

#20

#1

#2

#3

#4

#5

#6

#7

#8

#9

#10

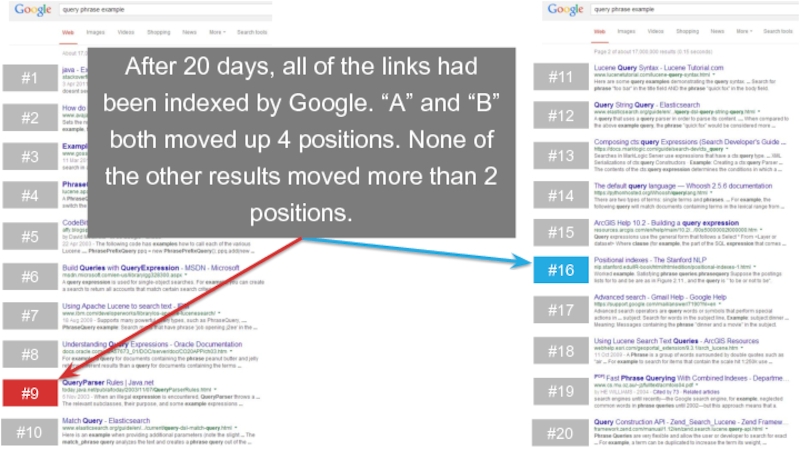

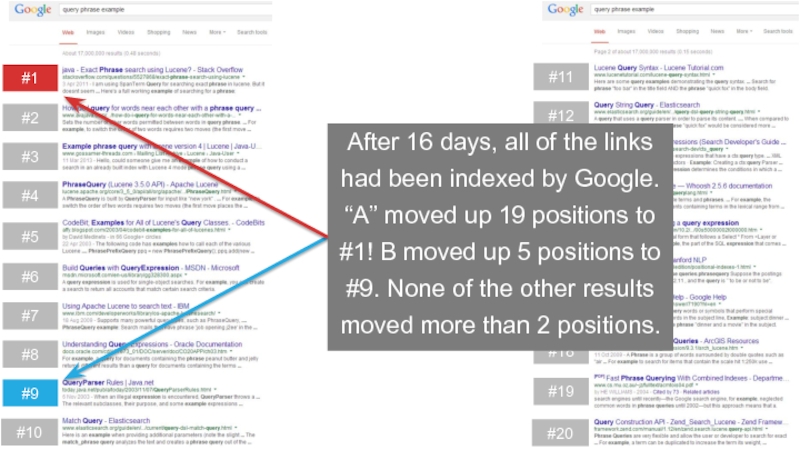

After 20 days, all of the links had been indexed by

Слайд 94

While both results moved up the same number of positions, it’s

Слайд 95

Princess Bubblegum and I are in agreement. We should do the

Слайд 96

1) Three word, informational keyword phrase with relatively light competition and

Early Results from a Second Test:

2) We selected two results (“A” and “B”), ranking #20 (“A”) and #14 ( “B”) in logged-out, non-personalized results

3) We pointed links from 20 pages on 20 unique, high-DA, high-trust, off-topic sites at both “A” and “B”

Слайд 97B) We pointed 20 links from 20 domains to this URL

#11

#12

#13

#14

#15

#16

#17

#18

#19

#20

A) We pointed 20 links from the same pages/domains at this result with anchor text exactly matching the query phrase

Слайд 98#11

#12

#13

#14

#15

#16

#17

#18

#19

#20

#1

#2

#3

#4

#5

#6

#7

#8

#9

#10

After 16 days, all of the links had been indexed by

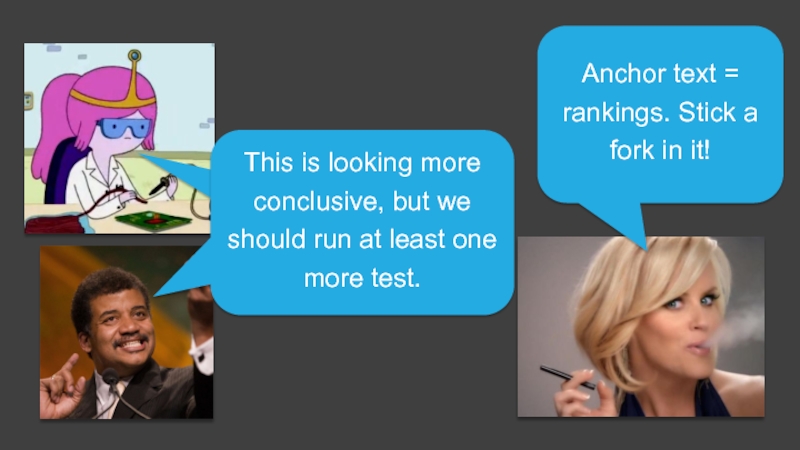

Слайд 99

Good thing we tested!

This is looking more conclusive, but we should

Anchor text = rankings. Stick a fork in it!

Слайд 102Good discussion about Google+ correlations in this post

From a comment Matt

“Most of the initial discussion on this thread seemed to take from the blog post the idea that more Google +1s led to higher web ranking. I wanted to preemptively tackle that perception.”

Слайд 103Good discussion about Google+ correlations in this post

To me, that’s Google

Слайд 104

Google explicitly SAID +1s don’t affect rankings. You think they’d lie

Слайд 105

The correlations are surprisingly high for something with no connection. There

Слайд 106

First, remember how hard it is to prove causality with a

Слайд 108#21

#22

#23

#24

#25

#26

At 10:50am, the test URL ranked #26 in logged-out, non-personalized, non-geo-biased,

Слайд 10942 minutes later, after ~30 shares, 40 +1s, and several other

#21

#22

#23

#24

#25

#26

Слайд 110#21

#22

#23

#24

#25

#26

48 hours later, after 100 shares of the post, 95 +1s,

Слайд 111At least we proved one thing – the Google+ community is

Слайд 113#21

#22

#23

#24

#25

#26

#27

#28

#29

#30

Something very strange is happening in relation to the test URL

Слайд 115

GASP!!! The posts did move the result up, then someone from

Слайд 116

Sigh… It’s possible that Jenny’s right, but impossible to prove. We

Слайд 117

More testing is needed, but how you do it without any

That said, remember this: